Check out our coverage of DesignCon 2024.

The computational demands of AI are immense. But it’s connectivity, not computing, that’s becoming a limiting factor on the future of AI and high-performance computing (HPC) applications in data centers.

Synopsys pushes the limits of high-bandwidth, low-latency connectivity with the first complete set of IP for 1.6-Tb/s Ethernet. Its Ethernet controller IP uses PAM4 signaling to pump out up to 1.6 Tb/s of bandwidth, doubling what’s possible with today’s Ethernet at half the power. The IP includes 224-Gb/s Ethernet PHYs to meet the performance for chip-to-chip, chip-to-module, long-reach copper cables, optics, and PCB backplane connections over distances as short as 1 m out to more than 1 km.

“The massive compute demands of hyperscale data centers require significantly faster Ethernet speeds to enable emerging AI workloads,” said John Koeter, SVP of marketing and strategy for IP at Synopsys.

Synopsys said the IP is a complete set of general-purpose IP for 1.6T Ethernet that everyone from chip designers to systems companies can build into next-gen AI accelerators and other data-center silicon.

The Ethernet controller IP is also designed to have backward compatibility, supplying not only 1.6T of bandwidth, but also from 10G up to 200G, 400G, and 800G Ethernet, according to the semiconductor IP leader.

While it’s widely regarded as one of the leading players in the world of EDA software for chip design, the Silicon Valley-based company is also one of the leading purveyors of IP, including for high-bandwidth data connectivity, that can be plugged into larger systems-on-chip (SoCs). Synopsys presented the 224G Ethernet PHY at DesignCon in January before it rolled out the full 1.6T Ethernet solution last month.

Faster Ethernet for the Future of AI

Ethernet has been the networking backbone of the data center for decades. It’s primarily used to bridge short to long distances between chips in the same column of servers and switches between the racks.

While the technology industry at large is racing to roll out 400-Gb/s Ethernet to stay a step ahead of the huge amounts of data entering data centers, cloud providers and other companies on the forefront of the AI era are pushing the pace even faster. They’re building the largest data centers in the world and upgrading to ultra-fast connectivity based on 800-Gb/s Ethernet to unclog the sprawling networks behind them.

But AI—and the large amount of data used in training and inferencing—will bog down even state-of-the-art Ethernet eventually. High-bandwidth, low-latency networking is a must-have to handle the large language models (LLMs) at the heart of the AI boom. Google’s Gemini, Meta’s Llama, and other high-end models can have tens of trillions of building blocks stored in memory and run on large clusters of AI accelerators.

GPUs such as NVIDIA’s Blackwell chip are the gold standard for carrying out AI computations in the data center. But they rarely work alone. To train and run the largest, most intricate models, up to tens of thousands of chips are lashed together in larger computer clusters that must act as much as possible as a single system. They communicate with each other within the same server and in the same column of servers over the PCIe bus. Ethernet is an ideal connectivity protocol to bridge longer distances between the racks themselves.

Besides AI hardware, everything from networking to memory is also evolving to be disaggregated. These building blocks are placed in separate boxes that interact with each other using copper or optical interconnects or across backplanes on the printed circuit board (PCB). Since Ethernet is the de facto standard interface for box-to-box connectivity, the risk is that it becomes a speed bottleneck for the future of AI.

“AI [and] ML servers will expand from eight GPUs today to 16 and 32 GPUs over the next several years,” said Alan Weckel, co-founder of 650 Group, a technology research firm. “To enable those systems to scale efficiently, 1.6T Ethernet will be a crucial driver to the interconnect.”

But improving the performance of the Ethernet from 800 Gb/s to 1.6 Tb/s is a daunting task. Steep challenges must be solved in everything from power efficiency and signal integrity to latency and costs.

PHY and Controller IP Unlocks Ultra-Fast Ethernet

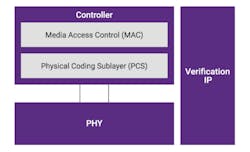

Synopsys is trying to tackle these challenges by giving its customers everything from the controller IP to the PHY to verification IP, creating a complete subsystem that can handle the complexities of ultra-fast 1.6T Ethernet.

The controller consists of the media access control (MAC) and other key building blocks of the Ethernet protocol, baking everything required to handle the complexities of high-speed connectivity into silicon.

The more advanced signaling and faster speeds that are behind 1.6T Ethernet can cause some issues, namely the high-speed signal may be scrambled or lost in transmission, raising the bit error rate (BER). Given the high-speed signals that travel through each physical link, one of the core features of the 1.6T Ethernet controller IP is its ability to do forward error correction (FEC) to prevent signal degradation.

The PHY translates the digital data from the controller to analog signals and then physically shoots all the signals from Point A to Point B in a system. High performance and low latency are critical in PHYs.

Based on 224-Gb/s SerDes, Synopsys said the Ethernet PHY taps into PAM4 signaling to sustain signal integrity at fast speeds, without adding to the BER after FEC occurs. The IP also adds additional margin for losses that crop up as the signal travels out of IC packages, over the PCB or backplanes, and through the connectors and cables in the system, all of which weaken the signal as it runs through the channel.

The complete set of IP is estimated to cut the total power consumption of Ethernet by 50% compared to what’s possible with existing SoCs. The new multi-channel, multi-rate Ethernet controllers can manage 1.6 Tb/s while reducing latency by 40% and silicon area up to 50%, primarily due to patented algorithms for FEC inside the IP. These figures contrast to the 800-Gb/s controllers in today’s SoCs, noted Synopsys.

These space and power savings are valuable for companies designing high-density AI silicon or networking switches. These chips can have tens of Ethernet controllers inside. The costs can add up.

The final industry standard for 1.6T Ethernet is years out. But not everyone is willing to wait. Synopsys said it’s already supplying new ultra-fast Ethernet PHY and controller IP to several lead customers.