The concept of ray tracing has been bandied about for more than a generation. Since 1968, it has been viewed as a promising technology, especially for visual arts disciplines and, in particular, the entertainment industry.

Why? Because the ray-tracing algorithm as developed into its recursive form can closely model the behavior of light in the real world so shadows, reflections, and indirect illumination are available to the artist without any special effort.

This file type includes high resolution graphics and schematics when applicable.

These effects, which we take for granted in life, are available only with a great deal of explicit effort in a scanline rendering environment. So, a practical, economical way of generating them implicitly in real time is highly desirable.

Unfortunately, the computational and system cost of all implementations to date has limited ray tracing mostly to offline rendering or very high-cost, high-power systems that can be real time but lack interactivity. In fact, the wait for practical ray tracing has been so long that at last year’s influential Siggraph conference in Anaheim, the headline session on raytracing was wryly entitled “Ray tracing is the future and ever will be.”

What a difference a year makes! Today, there’s a novel, comprehensive approach to real-time, interactive ray tracing that addresses these issues and proposes a scalable, cost-efficient solution appropriate for a range of application segments including game consoles and mobile consumer devices.

This new hardware architecture will start to be available over the coming months. But what does it provide to the artist? How will it fit into existing workflows? And, what sort of effects does it make possible?

A Brief Primer

The process is simple. Once the model is transformed into world space (the 3D coordinate system used for animation and manipulation of models) and a viewport has been defined, a ray is traced from the camera through each pixel position in the viewport into the volume occupied by the model and intersected with the closest object.

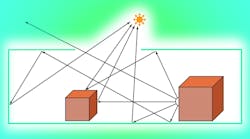

This primary ray determines the visibility of objects from the perspective of the camera. Assuming an intersection is found, three or more new rays are generated: reflection, refraction if appropriate, and one illumination ray for each light source (Fig. 1). These secondary rays are then traced and intersected, and new rays are generated until a light source is intersected or some other limit is reached.

At each bounce, a color contribution to the surface is computed and added into an accumulation buffer. When all rays are resolved, the buffer contains the final image. Once the model is created, the material properties are defined, and the lights are placed, all of the lighting effects occur automatically during the rendering process.

The general acceptance of ray tracing as a desirable technique is illustrated by its use alongside a number of ray-tracing-like techniques in the offline light map baking process used in today’s content generation middleware packages. These processes are used to generate the input images for light map baking and point the way to an incremental method of introducing ray tracing into existing real-time rendering systems, such as OpenGL and DirectX, without abandoning the overall structure of those systems.

This is obviously desirable since the use of existing tools and runtimes as well as all of the sophisticated techniques already known to developers then can be preserved and enhanced, providing a low-impact migration path to the newer techniques.

How It Plays Out For Developers And Gamers

From a graphical developer’s perspective, the barrier to using ray tracing is lowest if it is possible to retain all of the currently used development flow, including tools and application programming interfaces (APIs). This means that, rather than switch wholesale to a new rendering scheme (such as the primary ray method of visibility determination described above), it is desirable to create a hybrid system where an incremental scanline algorithm is used for visibility determination but ray tracing can be selectively added to implement specific effects.

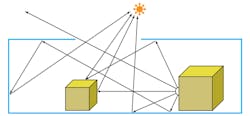

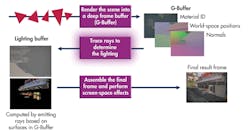

A graphical API such as OpenGL does not have the primary ray concept used to determine visibility in a pure ray tracing renderer. The scanline rasterization algorithm that takes place in the screen space coordinate system performs this function, whereas rays must be cast in world space. One way to resolve this issue is to use a multipass rendering technique commonly used in game engine runtimes known as deferred shading (Fig. 2).

This technique consists of a first geometry pass that performs the visibility determination followed by a second shading pass that executes the shader programs attached to the visible geometry. Its objective is to reduce the overhead of shading objects that are invisible, but it also can be used to cast the starting rays in a hybrid system. Since the intermediate information stored after the first pass includes world space coordinates as well as things like surface normals, the shader program has everything needed to cast the first rays.

This method benefits from the fact that only visible pixels will cast rays. But it also means that the developer has the choice of casting rays only for selected objects and can therefore easily control how effects are used and where the ray budget is spent. It can very easily fit into existing game engine runtimes as well so workflow remains the same and investment in all existing game assets is preserved. This level of control means it is possible to progressively move assets from incremental techniques to ray-tracing techniques, giving the developer the flexibility needed to successfully manage that transition.

Some Compelling Use Cases

The most obvious use of ray tracing in a game is to implement fully dynamic lights with shadowing and reflections generated at runtime. The improvements in realism and interactivity that this makes possible include shadows and reflections that are free of sampling artefacts and the ability to remove constraints on freedom of movement of the player. This enhanced freedom of movement is an enabling technology for applications such as virtual and augmented reality and opens up whole new applications in areas such as online shopping.

In addition, the developer now can access a broad range of other capabilities that would either be impossible, low quality, or too inefficient using standard techniques, such as:

• Lens effects: By the simple expedient of placing a lens model with the appropriate characteristics between the eye point and the scene rendered, effects like depth of field, fisheye distortion, or spherical aberration can be created or corrected.

• Stereo and lenticular display rendering: Newly popular computational photography techniques such as light field rendering require the user to generate a number of images of the scene from different viewpoints; two in the case of stereo but many more for use with multi-viewpoint lenticular displays. Scanline rendering incurs the overhead of transforming the geometry multiple times into the various viewports, whereas ray tracing can reuse the transformation results and simply cast rays from each needed viewpoint.

• Targeted rendering: Targeted rendering to points of interest is easily implemented by varying the number of rays spent on each area of the scene, depending on whether it is the main focus of attention or not.

• Line-of-sight calculations: Line-of-sight calculations are not necessarily a graphical technique, but they can be used to improve the artificial intelligence of actors in a game by allowing them to cast a ray to establish if they are visible to another actor or to a light source. In another use, casting rays can be useful in implementing collision detection.

Conclusion

This hardware ray-tracing solution is available today for silicon implementation in a cost and power profile suitable for handheld and mobile devices, which are today’s dominant platforms for games as well as other consumer-centric activities.

The performance and features it offers along with a low-risk migration path are very compelling to developers who want to simplify their content creation flow while creating more compelling and realistic games. As this technology is rolled out over the coming months, it soon will be possible to say that the promise of ray tracing is being fulfilled. The future will finally have arrived.

Peter McGuinness is director of multimedia technology marketing for Imagination Technologies Group plc (LSE:IMG; www.imgtec.com). Imagination Technologies creates and licenses processor solutions including graphics, video, vision, CPU and embedded processing, multi-standard communications, cross-platform V.VoIP and VoLTE, and cloud connectivity. He can be reached at [email protected].