We still have lots to learn about how a biological brain works, but what we do know is leading to breakthroughs in the implementation of artificial intelligence. Technology is approaching an inflection point in history, where humankind is able to faithfully recreate nature’s greatest achievement and produce systems that can emulate the way we process information.

Research into artificial neural networks is pursuing multiple avenues, two of which show great promise: convolutional neural networks (CNNs) and spiking neural networks (SNNs). While both CNNs and SNNs are inspired by the way the brain processes information, they differ in some significant ways in their implementation.

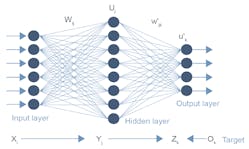

The input stimuli to the brain, whether they are visual, auditory, olfactory or in any other form, are processed through successive (hierarchical) numbers of neurons, interconnected by a dense network of synapses (Fig. 1). The input stimuli will activate some neurons while others remain unresponsive, thus creating a highly discriminating filter of sorts, which is able to detect, identify, and store information.

1. Conceptually, CNNs and SNNs are similar in their organization, but they differ in the nature of the data they process and how they process it. (Source: Ziff Davis ExtremeTech)

CNN Implementation

For CNNs, this functionality is emulated using a purely mathematical (linear algebra) approach. The data is fed through a number of inputs, gets multiplied by the respective (synaptic) weights, and then is propagated through a stochastic activation layer. This is repeated several times, resulting in a “deep” neural network.

In the training phase, this operation is repeated for each data sample in a dataset that can amount to terabytes, and each output is compared to the expected correct output. The discrepancy between the correct output (label) and the calculated output is added to the cost function. The training, through back-propagation, involves minimizing this cost function.

Needless to say, this repetitive process may take a very long time, depending on the complexity of the network, and requires the highest possible computing power. Today, these tasks are performed by powerful servers in the cloud, using CPUs, GPUs, FPGAs, and custom-designed ASICs (such as Google TPU), and may take hours, days, or even weeks.

The basic processing operation in this methodology—a multiply + add (or accumulate) (MAC)—is typically done with 32-bit floating-point weights and data (Fig. 2). This is the reason why, even though they weren’t initially intended for CNNs, GPUs have become very popular for executing such training tasks. The approach is costly in software development and execution time, and even costlier when implemented on silicon. MACs consume a large amount of power and occupy a significant amount of silicon real estate.

2. The CNN executes this type of operation many times. The operation is usually A × W + B. The accelerated (HW) implementation necessitates the use of MACs, abundant in GPUs.

There’s a growing trend toward reducing the quantization of the synaptic weights to 8-bit integers and more recently to single bits (binary networks). However, the data remains coded at least in 8-bit integers, if not 16 or 32 bits.

Neuron-Like SNNs

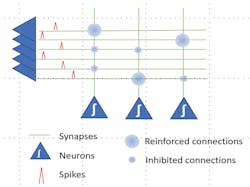

By contrast, spiking neural networks don’t use the same functions as CNNs. SNNs more closely emulate neuron functionality. The retina in the eye, the cochlea in the ear, and other sensory organs generate a train of spikes, but the brain doesn’t do any mathematical operations on the incoming spikes. Depending on how often and how many spikes are received, input neurons will be stimulated, and the synaptic weights will favor certain connections, while they inhibit or de-emphasize others (Fig. 3). The result is a discriminating filter of sorts, very similar to that of the CNN, except that data is simply whether a spike exists or it doesn’t, and it learns by either inhibiting or reinforcing the synapse. Therefore, with SNNs, the operation of the neural network remains more faithful to the way the biological brain functions.

3. SNNs more closely emulate neuron functionality. Depending on how often and how many spikes are received, input neurons will be stimulated, and the synaptic weights will favor certain connections, while they inhibit or de-emphasize others.

However, the goal isn’t to remain blindly faithful to the way the brain functions, but to leverage its structure for low power and operational efficiency, and SNNs achieve this in two ways. First, they eliminate the costly multiply-accumulate function by simply replacing it with a neuron function. Second, since they work on spikes, incoming data is transformed into trains of spikes. This data-to-spike conversion process helps to reduce the overall calculation required.

SNNs enable similar accuracy to CNNs with structures that are much smaller and easier to configure, which result in faster performance, lower power, and lower cost. SNNs can offer an order or two of magnitude lower power, while performing as fast and costing much less than CNNs.

Another critical benefit of SNNs is that they can be trained without supervision, very much like the human brain. To learn different classes of objects, it’s not necessary to train the SNNs with billions of data samples. A few samples will suffice for the SNN to learn and retain the objects it’s intended to classify. Of course, labeling will be applied to get the same results as CNNs for deployment.

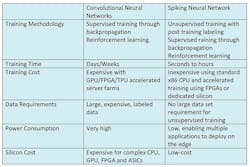

The table summarizes differences between the two network architectures. SNNs hold a tremendous advantage in inference tasks in edge applications, such as autonomous vehicles, robotics, IoT, and video surveillance, where cost, power consumption and speed of execution are critical factors. In addition, thanks to their ability for unsupervised training and the flexibility with which they can be reconfigured, they will enable upgrades in the field and avoid costly reprogramming and/or obsolescence.

Spiking neural networks represent the next step in artificial intelligence, and thanks to their many benefits, they are particularly applicable to edge processing in the IoT. This represents the new frontier of machine learning.

Robert Beachler is Senior VP of Marketing and Business Development at BrainChip.