From Code Quality to Total Security

This article series is in the Improving Software Code Quality topic within our Series Library

What you'll learn:

- Security issues go beyond buffer overflows.

- How standards help with commonly exploited weaknesses.

- Improving code quality and safety with code-analysis tools.

Poor code quality is actually a widespread problem and quite a bit of evidence supports the claim that bad coding practices lead directly to vulnerabilities. While this isn’t new, perhaps the first time that people truly became aware of it was in 2001 when the Code Red worm exploited a buffer overflow attack on Microsoft’s Internet Information Services (IIS).1 Although the first documented buffer overflow attack was in 1988 on the Unix finger command, it was limited greatly in its ability to affect the general population. Thus, it didn’t make headlines.

On the other hand, as Code Red caused massive internet slowdowns and was widely covered by news outlets, we saw a pervasive increase of buffer overflow attacks virtually overnight. It seemed like security researchers and hackers alike were finding these bugs in a wide range of systems—including embedded systems—everywhere.

This type of attack allows a hacker to run any code they desire on an affected system by targeting any code that uses fixed-length buffers to hold text or data. The hacker fills the buffer space to the max and then writes executable code at the end of the legitimate buffer space. The system under attack executes the code at the end of the buffer, which in many cases allows the attacker to do anything they want.2

Such an attack gained urgency because it wasn’t common coding practice to check and enforce the limits of buffers. Now, many coding standards like the Common Weakness Enumeration from mitre.org recommend checking buffers for this type of vulnerability.3

Unfortunately, it still isn’t common practice for developers to look for this problem when writing code. It often takes code-analysis tools to find these issues and alert a developer that there’s an issue so they can fix it. Since a simple code-quality improvement like this can take away one of the most common hacker approaches, it greatly improves the security of the code. Therefore, a good coding practice is to check and enforce the length of buffers in the code.

Not Just Buffer Overflows

Don’t misunderstand, the problem isn’t simply buffer overflows. It’s actually a systemic problem: Sloppy coding practices, in general, lead to a countless number of security holes that hackers can utilize to compromise a system. A paper published by the Software Engineering Institute (SEI) puts it in very clear words:

“…Quality performance metrics establish a context for determining very high quality products and predicting safety and security outcomes. Many of the Common Weakness Enumerations (CWEs), such as the improper use of programming language constructs, buffer overflows, and failures to validate input values, can be associated with poor quality coding and development practices. Improving quality is a necessary condition for addressing some software security issues.4"

The paper continues to show that security issues—since many of them are caused by buggy software—can be treated like more ordinary coding defects. As a result, applying traditional Quality Assurance techniques will help address at least some of these security issues.

Normal Software Quality Assurance processes allow you to estimate the number of defects remaining in the system. Can the same be done with security vulnerabilities? While the SEI stops short of confirming a mathematical relationship between code quality and security, they do state that 1% to 5% of software defects are security vulnerabilities. According to SEI, their evidence indicates that when security vulnerabilities are tracked, they could accurately estimate the level of code quality in the system.4

This conclusively shows that code quality is a necessary (but not sufficient) condition for security. It really disproves the notion that security can be treated as a bolt-on at the end of development. Rather, security must be threaded through the DNA of a project, from design, to code, and all the way to production.

Coding Standards Help

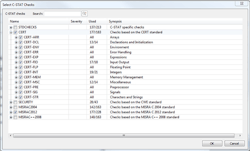

Many of the most common security holes are addressed in coding standards like the Common Weakness Enumeration (CWE) from mitre.org. They point out additional areas of concern like divide-by-zero, data injection, loop irregularities, null pointer exploits, and string parsing errors. MISRA C and MISRA C++ also promote safe and reliable coding practices to prevent security vulnerabilities from creeping into code.

While these can catch many of the commonly exploited weaknesses, a developer must think bigger when writing their code (Fig. 1): How can a hacker exploit what I just wrote? Where are the holes? Am I making assumptions about what the inputs will look like and how the outputs will be used?

A good rule-of-thumb to follow is that if you’re making assumptions, then those assumptions should be turned into code that ensures what you’re expecting is actually what you’re getting. If you don’t do it, then a hacker will do it for you.

But what about open-source software? The typical argument for using open-source components in a design relies on the “proven in use” argument: So many people use it, it must be good. In the same paper, the SEI has addresses this:

“One of the benefits touted of Open Source, besides being free, has been the assumption that ‘having many sets of eyes on the source code means security problems can be spotted quickly and anyone can fix bugs; you're not reliant on a vendor.’ However, the reality is that without a disciplined and consistent focus on defect removal, security bugs and other bugs will be in the code.4"

In other words, the SEI says that the “proven in use” argument means nothing. It calls to mind the story about Anybody, Somebody, Nobody, and Everybody as it regards applying quality assurance to open-source code. Moreover, testing isn’t enough to prove out the code.

The SEI says that code-quality standards like the CWE find issues in code that typically never get detected in standard testing and usually only are found when hackers exploit the vulnerability.4 To prove that point, in May 2020, researchers from Purdue University demonstrated 26 vulnerabilities in open-source USB stacks that are used in Linux, macOS, Windows, and FreeBSD.5 When it comes to security, code quality is key and all code matters.

Code-Analysis Tools Help to Comply with Standards

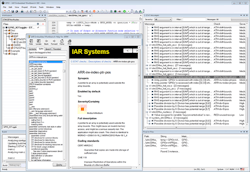

What can software engineers do to address code quality and improve application security? The easy answer is to use code-analysis tools, which come in two basic flavors. Static analysis looks only at the source code of the application. Runtime (or dynamic) analysis instruments the code looking for weaknesses like null pointers and data injection methods (Fig. 2).

High-quality code-analysis tools include checks for CWE, MISRA, and CERT C. CERT C is another coding standard designed to promote secure coding practices. These three rulesets together form a great combination of coding practices that promote security. Some rulesets overlap with others, but also provide some unique features to help ensure the code has a high degree of security. Furthermore, using these standards helps to ensure the best possible code quality. And they might even find some latent defects in the code.

High-Quality Code is Secure Code

You can’t have security unless you have code quality, and you can’t pass the code quality buck onto someone else because their bugs are likely to become your security nightmare. There’s hope because code-analysis tools can help you quickly identify issues before they bite you.

The road to security always passes through the gateway of code quality.

Read more from the Improving Software Code Quality series within our Series Library

References

1. https://www.caida.org/research/security/code-red/

2. https://malware.wikia.org/wiki/Buffer_overflow

3. https://cwe.mitre.org/data/definitions/121.html

4. https://resources.sei.cmu.edu/asset_files/TechnicalNote/2014_004_001_428597.pdf

5. https://www.techradar.com/news/usb-systems-may-have-some-serious-security-flaws-especially-on-linux

About the Author

Shawn Prestridge

Senior Field Applications Engineer/U.S. Field Applications Engineer Team Leader, IAR Systems

Shawn Prestridge is the U.S. Field Applications Engineer Team Leader for IAR Systems, where in addition to managing daily operations for his team, he trains customers and partners in using IAR’s products more effectively so that they can rapidly deliver embedded systems to the market. Shawn has worked with IAR for 12 years. He earned his degree at Southern Methodist University in Dallas, Texas.