RF communications technology is evolving at a rapid pace, and we are all enjoying the benefits. If you have flown on a commercial airline lately, you may have connected to in-flight high-speed Internet via satellite. Perhaps you have watched a cable news reporter deliver a live high-definition broadcast from the other side of the world. Maybe you have heard about the jam-resistant communications links on which the military relies for secure communications.

Applications like these, demanding higher data throughput rates and link reliability, are pushing modem and radio manufacturers to reinvent their products. These advances come in the form of firmware features like adaptive coding and modulation (ACM) or new hardware modules capable of generating ultra-wideband signals that deliver massive amounts of data.

Such technologies are effective—and complicated—methods for optimizing data-link efficiency. Of course, sophisticated designs require equally sophisticated testing methodologies. Are the techniques employed in modern test labs capable of adequately testing tomorrow’s technology?

Traditional Test Methods: Are They Enough?

Since the early days of RF communications systems, static techniques for testing have proven to be effective for validating performance. Static tests are those which impair the communications link for some period of time while the system response is measured. For example, to characterize receiver sensitivity, test engineers may insert a step attenuator between the transmitter and the receiver, recording the bit error rate at each signal power level. Likewise, tunable mixers are used to test performance of shifted frequencies due to Doppler effects, and fixed delay coils test time delay and ranging.

Because these static methods have worked well in the past, it is not surprising that they are still widely used. However, while these tests simulate a variety of environmental effects, each does so at a single point in time. Recent events highlight the importance of testing for the dynamic, time-varying environments encountered in actual RF communications environments.

Consider, for example, the Cassini-Huygens probe landing on Saturn’s moon Titan in 2005. The spacecraft made it half way across the solar system before engineers discovered a potentially fatal flaw in the communications system caused by dynamic signal distortions due to Doppler shift.1 This problem limited the data bandwidth available to the probe, and its severity required engineers to alter the flight path of the spacecraft to work around the issue. The traditional static Doppler test methods used by the engineering team did not model the dynamic Doppler compression encountered on the mission.

Modern Technologies Driving New Testing Methods

The Doppler problem that plagued Cassini-Huygens is even more pronounced in the wideband carriers used today in new communications designs. Higher frequencies are impacted more by Doppler shift than are lower frequencies; thus, signals can suffer significant spectral compression when platforms are traveling at velocities common to space missions. As a result, less bandwidth is available to those carriers, and link throughput decreases.

For example, when using a wideband digital carrier that occupies 250 MHz of bandwidth, the actual bit rate can decrease by more than 28,000 b/s due to Doppler compression. Despite this significant signal distortion, the static, single-point-in-time test methodologies employed today lack the realism to model the effects of Doppler compression. Engineers verifying wideband equipment must qualify their systems under the dynamic conditions that result in this kind of bandwidth swing, observing how the system behaves under the constant bandwidth fluctuations that are encountered during a live mission.

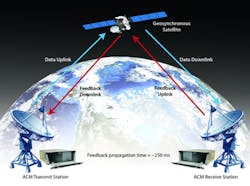

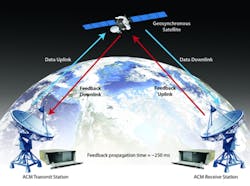

Additionally, any RF communications technology that requires a feedback loop needs particular attention during testing. For instance, ACM maximizes link throughput by using state information returned from the receiver to adjust digital modulation and encoding parameters. When the receiver detects high signal strength on a clear day, it sends back a signal telling the transmitter to use an efficient modulation and coding scheme for maximum throughput. If a rain storm obstructs the path and the signal degrades, the receiver tells the transmitter to fall back to a more stable signal type. In this way, the closed-loop system actively and dynamically adapts to the environment.

ACM systems are easy to test when signal conditions change slowly, but complexity ramps up when power levels fluctuate rapidly. A test engineer may choose to model atmospheric scintillation using a high-speed programmable attenuator in between the devices, toggling the amplitude to model the effect. However, this tests only one component of the actual scenario. It is necessary to account for the significant time delay that may exist between the two devices; this can be more than 0.25 second when radio waves propagate from Earth to a geosynchronous satellite and back to Earth.

Consider a period of heavy scintillation where received power levels may oscillate at 10 times per second. By the time the receiver’s feedback signal reaches the transmitter, the conditions at the receive site may have changed again, rendering the new signal parameters ineffective in the current environment.

To ensure proper operation of receive equipment, tests must vary the effects that trigger adaptive adjustments and the time delay on the forward and feedback links. Testing static configurations between the equipment ignores the complex timing issues that occur during actual operation in nature.

The Future of Testing

The thirst for higher bandwidth and the adoption of ACM as a standard RF modem feature will continue to drive advances in test and measurement technology. Fortunately for communications system engineers, the test and measurement industry has been working on this problem for several years by developing dynamic test methodologies and channel simulation equipment to impart realistic effects of communications links.

Dynamic channel simulators are becoming standard fixtures in test labs as engineers realize that accurate testing of complex hardware, firmware, and software requires equally complex modeling. These new test methodologies will succeed because of their realism; they model a continuous, subtly changing environment that aligns with the physics of radio-wave propagation.

To thoroughly verify the next generation of RF communications equipment, test engineers must move beyond traditional best practices and adopt a new approach, one which exercises signals the same way nature distorts them: dynamically.

Reference

1. Lombardi, M.R., “Communications Test Tools: A Study of the Cassini-Huygens Mission, EE-Evaluation Engineering, June 2010, pp. 14-22.

About the Author

Michael Clonts is a product manager in the Signal Instrumentation group at RT Logic. He has more than 10 years of software and firmware development experience in the SATCOM and data-storage industries. Clonts received a B.S. in computer engineering from Texas A&M University. [email protected]