Supercomputing or high-performance computing (HPC) has massive storage needs. The hyperscale approach takes a modular approach using high-speed connectivity to link computing, network, and storage resources. This allows resources to be added and adjusted as needed while simplifying management of the system.

These days the fastest processor interface, short of the memory channel, is PCI Express. The Non-Volatile Memory Express (NVMe) interface is based on PCI Express. NVMe is latency-optimized and it is becoming the preferred way to access flash memory because it has less overhead than conventional disk interfaces like SAS and SATA. NVMe performance can also scale because of PCIe’s multilane approach. Typically NVMe systems have one (x1) to eight lanes (x8). For example, the M.2 socket supports x4 PCIe/NVMe devices.

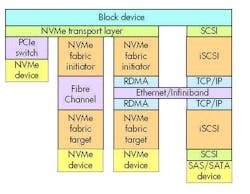

NVMe can provide high-speed connectivity to flash memory but other fabrics like Ethernet and InfiniBand provide longer-distance connections than PCI Express. These fabrics have been used to support storage protocols like iSCSI, but these protocols tend to have higher overhead than NVMe so it made sense to develop NMVe-over-Fabric (NVMe-oF). NVMe-oF uses the same NVMe transport layer as a native NVMe implementation.

NVMe devices can be accessed through direct connections (left) or using NMVe-over-Fabric with Fibre Channel or RDMA and Ethernet or InfiniBand. NVMe-oF has significantly less overhead that conventional fabric-based connections like iSCSI (right) that employs TCP/IP.

NVMe-oF can offer remote direct memory access (RDMA) support that is available with fabrics like Ethernet and InfiniBand. NVMe-oF works with other fabrics like Fibre Channel. Fibre Channel’s native transport system can handle NVMe without the need for RDMA support. Each NVMe-oF fabric has its advantages and disadvantages but operating systems and applications will use any of these transparently since they share a common NVMe transport layer.

NVMe-oF promises to provide the scalability needed for hyperscale environments. It provides a significant boost in performance compared to non-NVMe solutions. The choice of which fabric will likely depend upon the existing infrastructure. NVMe is already pushing conventional drive interfaces out because of performance advantages, and NVMe-oF will be doing the same but on a much larger scale.

NVMe-oF is relatively new and replacing existing storage area networks (SANs) will take time but if the rapid adoption of NVMe is any indication, then NVMe-oF will likely follow a similar pattern. Providing a unified block storage environment simplifies system configuration and management while providing better performance.

NVM Express Inc. manages the NVMe-oF specification. This includes the use of RDMA with Ethernet and InfiniBand. The NVMe over Fabrics using Fibre Channel (FC-NVMe) was developed by the T11 committee of the International Committee for Information Technology Standards (INCITS).

NVMe-oF solutions were presented at this year’s Flash Memory Summit. Unfortunately, a fire prevented the show floor from opening so most were unable to see the systems in action but they are available. Still, it will be a few years before NVMe-oF becomes commonplace but it will likely become the norm for large systems including HPC applications.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.