Data-Center Networking: What’s Next Beyond 10 Gigabit Ethernet?

The rising scale of cloud computing, Big Data, and mega data centers has network administrators continually scrambling to recalibrate capacity requirements and balance those requirements with budget limitations. The traffic created by increasing numbers of tenants, applications, and services in data centers pushes the limits of standard 10 Gigabit Ethernet (10GbE) links. This challenge has led to the development of new Ethernet technologies, optimized to fine-tune network capacity to meet bandwidth demands of today and tomorrow.

This file type includes high resolution graphics and schematics when applicable.

The 25 Gigabit Ethernet Consortium was established to promote industry adoption of newly developed 25GbE and 50GbE specifications for faster and more cost-efficient connectivity between a data center’s server network interface controller (NIC) and top-of-rack (ToR) switch. The 25/50GbE standard provides more options for optimizing network interconnect architecture on top of already established IEEE 10/40/100GbE standards. By opening up the 25/50GbE specification royalty-free to any vendor/consumer that joins, the Consortium provides the industry faster access to data-center interconnect innovation based on multi-sourced, interoperable technologies.

The 25/50GbE specification fully leverages existing IEEE-defined physical and link layer characteristics at 10GbE, 40GbE, and 100GbE. The latest 100GbE connection standards use four physical serializer/deserializer (SerDes) lanes to enable communication between link partners, with each of the four lanes running at 25 Gb/s. The Consortium’s 25GbE spec uses just one lane and the 50GbE spec uses two lanes, delivering a more optimal solution for next-generation connectivity between server and storage endpoints and the ToR switch. Employing 25GbE and 50GbE as ToR switch downlinks also mates seamlessly with the deployment of 100GbE ToR uplinks, because it can leverage the same 25-Gb/s per-lane SerDes technology in switch silicon and use the same front panel connector type.

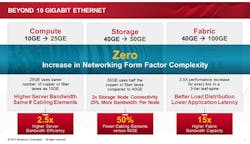

The value of 25GbE technology is clear in comparison to the existing 40GbE standard, which IEEE 802.3 has defined as the next higher link speed after 10GbE (see the figure). A 40GbE link uses four 10-Gb/s physical lanes to communicate between link partners. Using a four-lane interface between the server NIC and ToR switch instead of a single-lane interface translates into four times more SerDes channels consumed in switch and NIC silicon, four times more interface real estate on NIC and switch hardware, and four times more copper wiring in the cables that connect them.

Looking at it another way, a data-center operator deploying 40GbE to the server gets 60% higher performance compared to 25GbE, but pays for it by quadrupling the physical connectivity elements. Moreover, if data-center workloads are just inching above the 10-Gb/s threshold per endpoint, that extra 60% performance may go to waste. For applications that demand substantially higher throughputs to the endpoint, there exists 50GbE—using only two lanes instead of four—as a superior alternative to 40GbE in both link performance and physical lane efficiency.

The 25GbE/50GbE standard fundamentally delivers 2.5 times faster performance per SerDes lane using twinax copper wire than that available over existing 10G and 40G connections (twinax wire, which is also deployed in 10G/40G networks, features two inner conductors instead of the one used in conventional coaxial cables). Therefore, a 25GbE link using a single switch/NIC SerDes lane provides 2.5 times the bandwidth of a 10GbE link over the same number (two) of twinax copper pairs used in today’s SFP+ direct-attach copper (DAC) cables. A 50GbE link using two switch/NIC SerDes lanes running at 25 Gb/s each delivers 25% more bandwidth than a 40GbE link while needing just half the number (four) of twinax copper pairs.

This 25/50GbE advantage translates into 50% to 70% savings in rack interconnect cost per unit of bandwidth, making a significantly positive impact on a mega data-center operator’s bottom line. To be sure, the switch and NIC silicon combination runs faster and consumes slightly more power at 25 Gb/s per lane. However, when normalized for bandwidth, the cost and power advantage is expected to be substantial.

The advantages of 25/50GbE are also evident when considering interface real estate and cabling topology between the endpoint and ToR. NICs implementing these new standards can deliver single-lane 25GbE or dual-lane 50GbE ports over compact SFP28 or half-populated QSFP28 interfaces. Single- or dual-lane interfaces are known to be more cost-effective for LAN-on-motherboard (LOM) style NICs than four-lane interfaces.

On the ToR switch front panel, the same SFP28/QSFP28 interfaces can be deployed for one-to-one or breakout cable connectivity to the NICs — up to three meters without the need for forward error correction (FEC). The advantage of using all-QSFP28 interfaces across the switch front panel is that the user can fully configure the assignment of 25/50GbE server downlinks and 100G network uplinks, enabling the flexible deployment of copper or optics per port, and equally flexible modulation of the network oversubscription ratio at the ToR.

In essence, the cabling topologies are the same as those used today with high-density SFP+ and QSFP+ equipped switches. However, since 25GbE and 50GbE take advantage of higher silicon SerDes speeds and can use inexpensive two- or four-pair twinax copper cables for the rack-level connection, data-center operators are able to achieve highly economical cabling from a price-per-unit perspective.

Perhaps the most important benefit of 25/50GbE technology to data-center operators is that switch platforms can maximize bandwidth and port density within the space constraints of a small 1U front panel. Today’s ToR switches deployed with all pluggable QSFP+ cages typically support no more than 32 ports of 40G Ethernet. The migration of these interfaces to QSFP28 with per-lane breakout capability will support higher 25GbE and 50GbE port densities (up to 128 and 64, respectively) in a 1U ToR switch faceplate compared to 40GbE. That capability will enable connectivity to the highest rack-server densities being deployed now and in the future, and maximize the radix (another word for scalability) of the network in uplink and downlink directions.

This file type includes high resolution graphics and schematics when applicable.

Network operators in both the enterprise and cloud environment must build out their networks following carefully planned technology roadmaps that scale according to changing needs, and deliver optimal performance at the lowest possible CAPEX/OPEX. Realizing 25GbE’s compelling benefits for the data center, the IEEE formed a Study Group in an effort to standardize the interface in the same 802.3 forum as other Ethernet speeds.

Members of the 25 Gigabit Ethernet Consortium have been supportive of this IEEE effort—the goal isn’t to redefine Ethernet connectivity, but rather build upon existing Ethernet standards to address a key performance/cost optimization pain point regarding data-center rack-level connectivity. In the process, the 25/50GbE specs established by the consortium seek to offer the industry a more comprehensive roadmap for network speeds and feeds in the data-center access layer beyond 10GbE, which coincides with the adoption of 100GbE in the core and aggregation layers. As such, 25/50GbE technologies are shaping up to create a major inflection point in the performance and cost curve for Ethernet connectivity.

About the Author

Rochan Sankar

Director of Product Mangement and Marketing

Rochan Sankar serves as director of Product Management and Marketing for Broadcom Corp.’s Infrastructure and Networking Group (ING). Prior to Broadcom, Sankar held senior positions in chip architecture, strategic marketing, and business development at a variety of semiconductor firms. He earned a bachelor’s of applied science and engineering from the University of Toronto and an MBA from The Wharton School, University of Pennsylvania. He holds five U.S. patents in the area of high-performance chip architectures.