This article is part of the TechXchange: Addressing Chip Verification Challenges

What you’ll learn:

- What are hardware emulators and FPGA prototypes?

- Who are the main players in this market?

- What are the two modes that HAV platforms operate in, and which is preferable?

Editor’s Note: Following the recent announcements from Siemens EDA (formerly Mentor Graphics), Cadence, and Synopsys about hardware-assisted verification (HAV) platforms, it seems like a good time to identify and dispel a few recurring myths. Electronic Design turns to Expert Columnist Dr. Lauro Rizzatti for answers. Today, Siemens EDA, Cadence, and Synopsys are the three providers of HAV platforms, a result of industry consolidation.

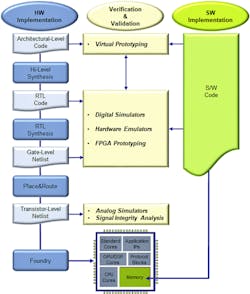

Two classes of tools—hardware emulators and field-programmable gate-array (FPGA) prototypes—fall into the HAV category.

Emulators verify hardware and integrate hardware and software of any size and type of system-on-chip (SoC) designs. They also can head-start validation of software and final system validation the entire SoC.

By comparison, FPGA prototypes, running at an order of magnitude faster than emulators on the same design size, are ideal for software and final system validation ahead of silicon. They come in two basic configurations. One is the desktop board that serves single users with an upper limit in design capacity of about 100 million gates. Enterprise platforms supporting multiple concurrent users reaching a maximum capacity of over 10 billion gates is the other.

Below are some of the myths that have surfaced regarding HAV platforms, and explanations that help debunk them:

1. HAV replaces simulation.

Not so fast! While it’s true that HAV is mandatory for software and full system validation, hardware-design-language (HDL) simulation is still required to perform thorough hardware verification at intellectual (IP), block, and subsystem levels. As long as the size of the design under test (DUT) doesn’t slow the simulator to a crawl at roughly 100 million gates, the interactivity, fast turnaround time (TTA), and ease of use of the simulator should be the preferred approach for hardware debug.

2. Only experienced verification engineers or teams of engineers can adopt HAV because it’s too complicated a methodology.

This was the case in earlier versions of the technology. Installing, operating, and maintaining a platform required a large number of specialized engineers. Over time, progress and innovations smoothed the path to adoption. New architectures, new capabilities, and simplified usage eased and facilitated their deployment in all segments of the semiconductor industry to shorten the verification cycle and increase design quality.

Adopting an HAV methodology today isn’t an option. It’s a requirement.

3. HAV may be useful for emerging applications, such as computing and storage, AI/ML, 5G, networking, and automotive, but not for the functional verification of current applications.

Emerging applications set trends in design capacity, low-power consumption, and high performance, characteristics that mandate the use of HAV. On the other hand, current applications share the same time-to-tape out (TTT) pressure as emerging applications.

Aggressive competition and shrinking profits force electronics providers to bring to market new devices regardless of their sizes. For example, the universe of IoT gadgets that’s ahead of their rivals. While HDL simulation can easily and effectively verify their hardware, any design that includes software may benefit from deploying an HAV platform to accelerate TTT.

HAV systems are highly scalable. Smaller configurations that accommodate IoT designs may fit within the verification budget and save the day.

4. The shift-left verification methodology works for software testing, not hardware verification.

The shift-left verification methodology accelerates the entire SoC design verification/validation process, not just software validation. The methodology calls for adopting a modern HAV system suite, including emulation and prototyping deployed in virtual mode.

A comprehensive test environment supported by virtual peripherals, such as VirtuaLAB, which is incorporated in the Veloce HAV system from Siemens EDA, can mimic the functional behavior of the physical target system where the SoC ultimately will be plugged in. The environment is conducive to execute real-world software workloads and industry benchmarks before silicon availability. It helps shrink the TTT and enables more testing, increasing the quality of the design.

5. It’s close to impossible to integrate emulation and FPGA prototyping.

This was true until recently. Advances in compilation technologies, ease of use, and expansion of use models enhanced the deployment of emulation and prototyping, removing integration bottlenecks. Sharing the front-end compilation flow between the two platforms ensures consistency of the DUT database running in either platform. Loading and offloading the DUT between the two platforms allows for DUT debugging using the emulator and for rapid software workload execution using the prototype.

6. Power analysis is an unfeasible verification task for an HAV platform.

That’s far from the truth. In fact, it’s just the opposite.

Best-in-class HAV platforms enable early evaluation of power consumption by generating activity plots and hotspot maps of the DUT described at a high level of abstraction. A quick review of plots and maps reveals the DUT areas of excessive power consumption and the time windows when peak power usage happens during workload processing. Focusing on those areas and time windows, the same HAV platform then can generate detailed DUT activity data at the register transfer level (RTL) to feed a power estimation tool. Some HAV platforms bypass Fast Signal Database (FSDB) and Switching Activity Interchange Format (SAIF) file generation and directly feed the power tool to speed up the process and reduce storage requirements.

While analysis at high levels of abstraction may produce discrepancies of 20% versus the actual silicon, the differences drop to 5% at RTL.

7. FPGA prototyping can replace hardware emulators.

This is incorrect. While some emulators use FPGA devices in their architectures, the differences between the two tools are numerous.

For instance, FPGA prototypes are designed to achieve the highest speed of execution conceivable by trading off fast DUT mapping and compilation efforts, DUT debugging capabilities, and deployment versatility. Emulators, regardless of their architectures—custom processor-based, custom emulator-on-chip-based, commercial FPGA-based—share several characteristics that set them apart from FPGA prototypes. These include:

- The time spent in DUT mapping and compiling with a modern emulator ranges between one day and several days versus several weeks with an FPGA prototyping system.

- Emulators support 100% visibility into the design without requiring probe compilation. While all emulators support this capability, commercial FPGA-based emulators can do so at lower execution speed. Their differences pale when compared to debug with an FPGA prototyping system.

- Emulators can be used in several modes of operation and support a spectrum of verification objectives, from hardware verification and hardware/software integration to firmware/operating-system testing all the way to system validation. They can be used for multi-power-domain design verification and generate switching activity for power estimation. Prototypes are focused on software validation and full system testing.

Emulators and FPGA prototypes have specific strengths that, when combined, can benefit the verification team as in the shift-left verification methodology.

8. HAV can only be used with physical traffic, not software testbenches.

Not anymore. Emulators and FPGA prototypes were originally conceived to test a pre-silicon design with physical traffic. Called in-circuit-emulation (ICE) mode, it was an attractive proposition that promised more thorough testing of the DUT than a software testbench could achieve. The practice came with strings attached. The entire setup was laborious and subject to several hardware dependencies. Among others, it required a speed adapter to slow down the real traffic to the slower speed of the HAV platform. Further, it didn’t allow for corner-case testing.

Transaction-based communication between the software testbench and the DUT in the HAV opened a world of possibilities, including new verification tasks such as power analysis. Today, ICE is still used for its inherent advantage, though it’s not the main deployment mode.

9. Using HAV requires on-site attendance from the verification group, a nonstarter during a pandemic and as more engineers opt to work remotely.

The opposite is true with a caveat. The platform should be deployed in virtual mode to avoid hardware dependencies such as mounting speed adapters on I/O channels.

To recap, an HAV platform can be used in two modes: ICE mode and virtual mode. In ICE mode, the DUT mapped inside the emulator is driven by the physical target system, where the taped-out chip eventually resides. In virtual mode, the physical target system is replaced by a virtual, software-based equivalent target system that communicates to the DUT inside the HAV via a set of protocol-dependent transactors.

Among its benefits, the virtual mode allows for deployment of the platform in data centers without requiring manual assistance. Other benefits include the ability to perform corner-case analysis, what-if analysis, and more, not possible in ICE mode.

10. An HAV platform is an expensive line item for all but the most complex chip design projects and well-funded startups.

Relative to the acquisition cost of an HDL simulator, the statement is certainly correct, though misleading. The acquisition cost of a modern HAV platform pales when considered in relation to the verification power and flexibility of the tool. The HAV platform has the performance and capacity necessary to tackle even the most complicated debugging scenarios, which often include embedded software content.

As strange as it may sound, the tool’s versatility makes hardware emulation the cheapest verification solution when measured on a per-cycle basis.

The total cost of ownership also has dropped significantly. Gone are the days when, figuratively, the emulator was delivered with a team of application engineers in the box to operate and maintain it. Multi-user support shares the acquisition cost among team members. The improved reliability of the product further reduces the cost of maintenance by orders of magnitude.

11. In the future, all HAV platforms will be based on commercial FPGAs.

While this has been and will continue to be true for prototyping platforms, it’s incorrect for emulators.

The three emulator providers adopt three different architectures: proprietary emulator-on-chips, custom processors, and commercial FPGAs. They claim to believe in the benefits of their architectures and will continue development to enhance them. For the proprietary/custom approaches, the path to the future lies in re-spinning their chips to lower technology nodes. For the FPGA-based approach, the future is in the hands of FPGA suppliers. This begs the question: Will the two main FPGA providers that recently changed ownership (Altera to Intel and Xilinx to AMD) continue to develop devices with properties required by prototyping applications? The jury is out.

About the Author

Lauro Rizzatti

Business Advisor to VSORA

Lauro Rizzatti is a business advisor to VSORA, an innovative startup offering silicon IP solutions and silicon chips, and a noted verification consultant and industry expert on hardware emulation. Previously, he held positions in management, product marketing, technical marketing and engineering.