ATI’s Radeon 8500: First GPU With Hardware Tessellation

This article is part of the Electronics History series: The Graphics Chip Chronicles.

The Radeon 8500 AIB launched by ATI in August 2001 used a 150-nm manufacturing process, for its R200 GPU, code-named "Chaplin". The AIB worked with DirectX 8.1. and OpenGL 1.3 APIs.

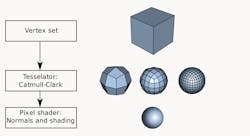

The R200 introduced several new and enhanced features, but the most noteworthy was ATI's "TruForm" feature. TruForm was a semiconductor intellectual property (IP) block developed by ATI (now AMD) for accelerating tessellation in hardware. The following diagram is a simple example of a tessellation pipeline rendering a sphere from a crude cubic vertex set.

Tessellation can be relative according to the distance of an object to the view in order to adjust for level-of-detail. This allows objects close to the viewer (the camera) to have fine detail, while objects further away can have coarse meshes, and yet at the same time seem comparable in quality. It also reduces the bandwidth required for a mesh by allowing it to be refined once inside the shader units.

ATI's TruForm creates a new type of surface composed of curved rather than flat triangles, called N-Patches or PN triangles. The new approach permitted surfaces to be generated entirely within the graphics processor, without requiring significant changes to existing 3D artwork composed of flat triangles. That, according to ATI, would make the technology more accessible for developers and allow it to avoid breaking compatibility with older graphics processors—while at the same time offering high-quality visuals. The technology was supported by DirectX 8s N-patches, which calculates how to use triangles to create a curved surface.

ATI's R200 GPU was an average-sized chip for the time: 120 mm² in area. It incorporated 60 million transistors and featured four pixel shaders and two vertex shaders, eight texture mapping units, and four ROPs. ATI said at the time that the R200 was more complex than Intel's Pentium III processors.

The Radeon 8500 ran at 275 MHz and was supplemented with 64 MB DDR using a 128-bit memory bus. It was a single-slot AIB and didn't need an additional power connector, since it only consumed 23 W. The AIB had an AGP 4x interface and offered three display outputs: DVI, VGA, and S-Video.

The R200 was ATI's second-generation GPU to carry the Radeon brand. As most AIBs of the time, the 8500 also included 2D GUI acceleration for Windows and offered video acceleration with a built-in MPEG CODEC.

Whereas the R100 had two rendering pipelines, the R200 featured four. ATI branded the larger processing pipeline as Pixel Tapestry II. The new design increased the AIB's fill rate to 1 Gigapixel/s.

ATI built the original Radeon chip with three texture units per pipeline so it could apply three textures to a pixel in a single clock cycle. However, game developers chose not to support that feature. Instead of wasting transistors on an unsupported feature, ATI decided to build the R200 with only two texture units per pipeline. That matched the Nvidia GeForce3 and made developers happy.

But ATI was clever and enabled Pixel Tapestry II to apply six textures in a single pass. Legendary game developer John Carmack, who was working on the upcoming Doom 3, said at the time, "The standard lighting model in Doom, with all features enabled, but no custom shaders, takes five passes on a GF1/2 or 2 Radeon." He said that the same lighting model would take "either two or three passes on a GF3, and should be possible in a clear + single pass on ATI's new part."

With the original Radeon, ATI introduced its "Charisma" hardware transform and lighting engine. The R200's Charisma Engine II was the company's second-generation hardware accelerated, fixed-function transform and lighting engine, and it benefitted from the R200's increased clock speed.

ATI overhauled the vertex shader in the R200 and branded it the "Smartshader" engine. Smartshader is a programmable vertex shader, and was identical to Nvidia's GeForce3 vertex shader, as both companies conformed to the DirectX 8.1 specifications.

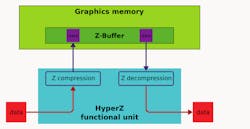

In late 2000, just before the rollout of the Radeon 8500/R200, ATI also introduced its "HyperZ" technology, which was basically a Z-compression scheme. ATI boasted that HyperZ could offer a 1.5 gigatexels per second fill rate, even though the R200's theoretical maximum was only 1.2 gigatexels. In fact, the HyperZ did provide a performance improvement during testing.

ATI's HyperZ technology consisted of three features working in conjunction with one another to provide an "increase" in memory bandwidth.

ATI's HyperZ piggybacked on several concepts from the deferred rendering process developed by Imagination Technologies for its PowerVR tiling engine.

A large amount of memory bandwidth is required to repeatedly access the Z-buffer to determine what pixels, if any, are in front of the one being rendered. The first step in the HyperZ process was to check the z-buffer before a pixel was sent to the rendering pipeline. This approach removed unneeded pixels before the R200 rendered them.

Then the Z-data was passed through a lossless compression process to compress the data in the Z-buffer. That also reduced the memory space needed for the S-data, and conserved data transfer bandwidth while accessing the Z-buffer.

When the Z-data was used, a Fast Z-Clear process emptied the Z-buffer after the image had been rendered. ATI had a particularly efficient Z-buffer clearing process at the time.

The first Radeon employed 8×8 blocks. To decrease the bandwidth needed, ATI adjusted the block size to 4×4. The R200 could discard 64 pixels per clock instead of eight. (The GeForce3 could discard 16 pixels per clock.)

ATI also implemented an improved Z-Compression algorithm that, according to the datasheet, gave them a 20% boost in Z-Compression performance.

Jim Blinn introduced the concept of bump mapping in 1978. The approach created artificial depth by using illumination on the surface of an object. However, most game developers didn't start using bump mapping until early 2000; Halo 1 was one of the first games to use it in 1998.

Putting It All Together

Prior to the adoption of bump, normal, and parallax mapping to simulate higher mesh detail, 3D shapes required large quantities of triangles. The more triangles used, the more realistic surfaces you could create.

To reduce the number of triangles in use, tessellation was employed. TruForm tessellated 3D surfaces using the existing triangles and tacked on additional triangles to them to add detail to a polygonal model. The result was that the TruForm technology improved image quality without significantly impacting frame rates.

However, TruForm was seldom used by game developers because it required models to work outside of DirectX 8.1. Without widespread industry support, most developers simply ignored it. On top of that, in 2000 Nvidia had eclipsed ATI in AIB market share, and developers were not as willing to invest in a unique feature from the No.2 supplier. By the time ATI, now part of AMD, upgraded to the Radeon X1000 series in 2007, TruForm was no longer a hardware feature.

With the Radeon 9500, the render-to-vertex buffer feature in it could be used for tessellation applications. This was also helped along by hardware supporting Shader Model 3.0 from Microsoft. Tessellation in dedicated hardware returned in the ATI's Xenos GPU for the Xbox and Radeon R600 GPUs.

Support for hardware tessellation only became mandatory in Direct3D 11 and OpenGL 4. Tessellation, as defined in those APIs, is only supported by newer TeraScale 2 (VLIW5) products introduced by AMD in 2009 and GCN-based products (available from January 2012). In AMD's GCN (graphics core next), the tessellation operation is part of the geometric processor.

When the Radeon 8500 came out, ATI's software group was going through a difficult management shakeup and the drivers the company issued were buggy. To make matters worse the company cheated on some benchmarks and reported higher scores than were attainable by reviewers. ATI also had problems with its Smoothvision antialiasing.

TruForm, the feature that should have propelled ATI to a leadership position, was lost due to mismanagement and lackluster marketing. The technology leadership ATI had built up through its development was wasted.

Read more articles in the Electronics History series: The Graphics Chip Chronicles.

About the Author

Jon Peddie

President

Dr. Jon Peddie heads up Tiburon, Calif.-based Jon Peddie Research. Peddie lectures at numerous conferences on topics pertaining to graphics technology and the emerging trends in digital media technology. He is the former president of Siggraph Pioneers, and is also the author of several books. Peddie was recently honored by the CAD Society with a lifetime achievement award. Peddie is a senior and lifetime member of IEEE (joined in 1963), and a former chair of the IEEE Super Computer Committee. Contact him at [email protected].