Single-Neuron-Based AI Reacts to the Human Voice

This article is part of the 2022 April 1st series in the Humor topic within our Series Library.

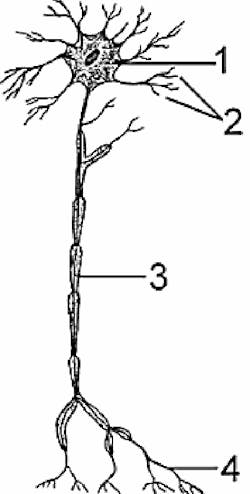

Artificial Intelligence (AI), in the modern sense, is more about predicting and responding to input, using a lookup table, with a predefined response. That’s all it was—until now. In a breakthrough, scientists from SHUT and Harvard universities have created a single artificial neuron that has shown an autonomous reaction to a human voice (Fig. 1).

A multinational team led by Ph.D. S. Goolie (Sapientia Hungarian University of Transylvania, or SHUT) and Ph.D. C.F.A. Twell (Harvard AI research labs) set out to recreate the entire human brain and “see what happens.” Goolie explains, “There’s something like 86 [billion] neurons in a human brain. Funding at the time was based on the number of neurons we created. I requested funding for 172 million [sic] neurons. We only got the budget for one. We set on to manufacture that one.”

Twell, on the creation of neurons, said, “The human brain is the most complex neural network in existence. The human brain is action-packed with neurons. So many, it’s mind… boggling. Starting with one was probably the best foot forward. A lot of work otherwise.” Many attempts returned varying results.

Some existed and seemed to power for mere moments.

Twell continued, “Merriam-Webster defines a neuron… well, I’ll just read it here. ‘A grayish or reddish granular cell that is the fundamental functional unit of nervous tissue transmitting and receiving nerve impulses and having cytoplasmic processes which are highly differentiated frequently as multiple dendrites or usually as solitary axons which conduct impulses to and away from the cell body.’

“It was that grayish reddish color that was the key. Before, most things we made were blue. We hammered out a color agreement ahead of time. Blue was calming, but we were not in the right area of colors. Luckily, I had my trusty dictionary. Really pushed our research in the right direction.”

“The grayish reddish device powered on and stayed on. We were amazed,” said Goolie. The artificial neuron’s power consumption, on the other hand, was a bit more than “the parameters we were expecting,” continued Goolie. “The neuron consumed about 4338.808 joules per minute. Roughly the amount of calories in a whole chicken, we used to joke. It was a hungry neuron.”

Goolie paused momentarily, trying hard to contain being excitedly proud of his joke, “The real issue was getting an ascertainable result from a single neuron.”

From the research paper:

More than one node in a neural network is not enough to produce a parallel with the human brain. More neurons produce more results. We developed a method of allowing the single node to do more by creating something akin to a deep-neural-network (DNN) feedforward-single-neural (FFSN) network loop (NL), or DNNFFSNNL. Time could multiply the results via a temporal sequentialization of operations.

This device needed input and output. The team attached a microphone input to the DNNFFSNNL. Output was simply recording and digital output.

Listening In

“The DNNFFF [sic] neuron was listening, I suppose,” said Goolie. “We tried several sources of input. Music, ambient sound. Human speech was what we discovered delivered the most reaction. Twell talking seemed to give the most response.”

Twell explained, “I was walking into the lab telling Goolie about a dream I had where I was being chased by zombies when the DNFFSFS [sic] single neuron returned a hexadecimal response to a screen. We saw that, and it was a jaw-drop moment for sure.”

Goolie continued, “It wasn’t just Twell’s voice, we hypothesized. I was talking about a kids’ birthday pool party I had to attend, and weather I wanted to wear a swimsuit or not. The results were the same. It was astonishing.”

Getting Robotic

Not getting anywhere with what the hexadecimal response meant, the team went on to give the system movement (Fig. 2): a robotic platform around the single neuron device.

Twell explained, “I looked at it like John Conway’s Game of Life. Provide input and get an evolving output. But what if we change the rules suddenly? Instead of text output, give it motion. In a rush of brains to the head, we put wheels on it.” The change in response was even more startling.

After applying a similar dream story about how he was “part of a superhero team without any discernable powers,” Twell had recorded movement from the robotic platform (Fig. 3).

When we would speak, the robot would turn its back to the sound. “Perhaps in a way to stop hearing sound altogether, now that I think about it,” hypothesized Twell.

“I really think it hates Twell at this point,” confided Goolie privately. “Oh, I get it. If I heard another dream description about how he just ‘went to work and had a normal day,’ I’d want to do the same. I fully believe the neural network has developed a complete and utter disdain for Twell. I mean, if you heard a 15-minute dream description about ‘not finishing high school, and looking to get a dream GED,’ wouldn’t you feel the same way?”

Goolie concluded, “Speak only if it improves upon the silence, you know? Gandhi said that. You know what, I think I’m going to, uh go,” he trailed off while walking out of the room.

From the research paper:

Spreading processing over a timespan allows the system to react to sound in real-time. It’s akin to someone at a party switching between conversations, at the speed of light, but never escaping that one guest who won’t leave you alone. It’s the conclusion of this paper that the artificial neuron is trying to drop a hint. We concluded that the response was only one human emotion—disdain. Feeling dejected, both scientists leave the work with blinky, watery eyes.

It’s a bit of a wake-up call for us researchers and our social prowess. It can be pretty harsh when even a robot wants to spin itself out of power when you speak. Due to delicate feelings, half the research team has decided to smash the neuron. The other half was not available to make a final statement due to early departure for an extended vacation. What does this mean for AI? Like we care what AI thinks. (The paper was published in the “AI with Attitude” journal on April 1, 2022.)

If you got this far, it’s not a total loss. You can find something useful in my real 555 Timer book. Feel free to get two or more. Give as gifts or use as shims.

Read more articles in the 2022 April 1st series in the Humor topic within our Series Library.

About the Author

Cabe Atwell

Technology Editor, Electronic Design

Cabe is a Technology Editor for Electronic Design.

Engineer, Machinist, Maker, Writer. A graduate Electrical Engineer actively plying his expertise in the industry and at his company, Gunhead. When not designing/building, he creates a steady torrent of projects and content in the media world. Many of his projects and articles are online at element14 & SolidSmack, industry-focused work at EETimes & EDN, and offbeat articles at Make Magazine. Currently, you can find him hosting webinars and contributing to Electronic Design and Machine Design.

Cabe is an electrical engineer, design consultant and author with 25 years’ experience. His most recent book is “Essential 555 IC: Design, Configure, and Create Clever Circuits”

Cabe writes the Engineering on Friday blog on Electronic Design.