What’s the Difference Between Analog and Digital Computing?

Members can download this article in PDF format.

What you’ll learn:

- What is analog computing and how is it used?

- What is digital computing and how is it used?

- Why both methods provide promising results, depending on the application.

The unveiling of intelligent technologies in 2024 is already off to a hot start. With on-device artificial intelligence (AI), cloud AI, and AI inferencing emerging to the forefront, engineers and designers are redefining what's possible every day. And let’s not forget the market potential.

At the 54th annual meeting of The World Economic Forum, Arvind Krishna, CEO of IBM, shared that AI will generate over $4T of annual productivity by the decade’s end. Then you have IoT, which Statista estimates will reach 17.4B connected devices by the end of this year and will hit almost 30B by 2030.

While these massive developments impact our lives, the compute methods that help make this possible are often overlooked. AI and IoT expansion may be newer, but the computing accompanying these two industry segments has quite a history and distinctly different use cases, namely, analog computing and digital computing.

What is Analog Computing?

Analog computing has been around for decades and uses continuous signals, such as currents, electrical voltages, or even mechanical movement, to process information. The first known analog computer was the Antikythera mechanism. Dating back over 2,000 years, it predicted eclipses and other astronomical events.

Analog computing processes information, such as various physical phenomena, representing a continuous range of values instead of binary ones and zeros. For example, instead of using 32 digital wires to communicate a 32-bit value, analog computing can use a single wire with a continuous range of voltages to communicate the same signal.

Fast forward to 2024, and analog computers now power high-performance and AI-capable applications. The continuous signals of analog computing enable dense computing.

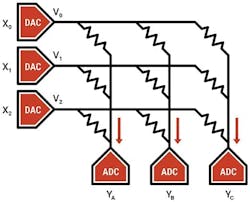

For instance, flash memory is compact and retains hundreds of unique voltage levels on a single device. By using the flash-memory elements as tunable resistors, supplying the inputs as voltages, the outputs are collected as currents to perform vector-matrix multiplications. The flash memory is also non-volatile, meaning it continues to store the value even when the power to the chip is off. An example is Mythic’s approach of computing directly inside the memory array (see figure).

The Benefits of Analog Computing

With clever design, the signal density of analog computing translates to high energy efficiency, performance, and cost efficiency. For example, multiplying an 8-bit parameter like a neural-network weight against an 8-bit input signal takes as few as one transistor for analog computing. For digital computing, it still requires hundreds or thousands. The analog-computing solution even stores the parameter for free.

Combining flash memory and analog computing is referred to as analog compute-in-memory (CIM). It offers incredible density (20X lower cost), ultra-low power (10X compared to digital), and high performance that rivals the compute of high-end GPUs.

Memory density has become incredibly important in recent years, causing companies like NVIDIA to include expensive High Bandwidth Memory (HBM) in their latest systems. Analog CIM addresses this issue by computing directly in the memory itself, leading to incredible memory bandwidth at significantly lower costs.

What is Digital Computing?

John Vincent Atanasoff and Clifford E. Berry designed the first digital computer in 1942. The computer would accept two linear equations at a time with up to 29 variables and a constant. Then, it was tasked to drop one of the variables. It continued eliminating each of the 29 variables until both equations were solved.

At its core, digital computing processes information in discrete form, operating on data expressed in binary code using 0s and 1s. Digital computing employs an automated approach to performing tasks and doesn’t rely on the presence of physical phenomena.

From bulky computers and mainframe servers to sleek laptops, distributed servers, smartphones, cameras, and watches, digital computing is no stranger to change. The critical turning point was the invention of the microchip, which led to small coin-size components to create smaller computers. As chips have advanced, so have digital devices, becoming ubiquitous and changing how society operates and interacts.

The Benefits of Digital Computing

Digital computing is repeatable—it gets the exact same answer every time it runs, given the same inputs. This makes it critical for running applications like spreadsheets and databases, which must be perfectly precise in their results.

Digital computing is also highly programmable, meaning that it can run a wide variety of sequential operations. This is critical for general-purpose processors like the primary application processor in laptops, desktops, and cell phones, since they must be able to support programs written for many different types of applications.

The Verdict for Both Computing Methods

Choosing analog over digital or vice versa is application-dependent. If a system runs a vast variety of applications, it will need to be a reprogrammable digital system. Systems that require perfect precision, like spreadsheets, must be digital systems.

If a system runs predictive applications, like AI, signal processing, recommendation engines, or even predicting the stock market, then it could be beneficial to deploy analog computing. Designers can build highly efficient, low-power, and cost-efficient systems in these applications.

The best analog systems add digital control and processing to make the system reprogrammable, enabling designers to get the best of both worlds.

About the Author

Dave Fick

CEO and Co-founder, Mythic

Dave Fick leads Mythic to bring groundbreaking analog computing to the AI inference market. With a PhD in Computer Science & Eng from Michigan, he brings a wealth of knowledge and expertise to the team. Dave co-founded Mythic with Mike Henry.