Reprinted with permission from Evaluation Engineering

With all of the talk around the development of more intelligent autonomous and assisted-driving systems to address the next generation of vehicles, it is easy to overlook the equally-vital need for advanced sensing solutions to provide the required levels of awareness.To properly operate in the real world, a smart vehicle, with or without passengers or cargo, must be able to identify its surroundings and navigate within them (Fig. 1).

One company aggressively pursuing sensing solutions based on high-precision MEMS-based IMU technologies, ACEINNA creates a wide range of cost-effective and easily-integrated autonomous navigation solutions for markets like automotive, industrial, consumer appliances, agricultural, and construction. We spoke with Reem Malik, the company’s Business Development Manager, and James Fennelly, Product Marketing Manager, about the company’s efforts in the sensing space.

EE: When we start talking about autonomous vehicles, there's a perception and a reality and a gray area in between, wouldn't you say?

Reem Malik: Yeah, for sure. There's a lot of hype around certain areas, but maybe not a lot of insight or knowledge in the general public.

EE: Even if you think about it in the general... Let's say for example, you're an engineer that's not working directly in the space. You may have the ability to recognize the state-of-the-art, but you may not know what the state-of-the-art is, because of the mixture of a vaporware-real product promise and bravado that's out there.

Reem Malik: That's true, and I guess the way I see it is, the term autonomous vehicle or autonomy is huge, it's just very general and there's so many aspects to both the technology and actually making it a reality in different stages. It's not zero to 10 in one go.

EE: So there are multiple levels within the autonomous vehicle definition, to recognize that to a degree?

Reem Malik: Yes, and there are so many players in the ecosystem and there's so many different parts of it that have to come together to make something work. But I think it's only natural that people that are focused in one specific area may not... And that would be the case for myself or us as well. We have a general broader view, but a very specific focus. And so it's always interesting to get exposure to other aspects of this whole ecosystem.

EE: Now, in our case, we're basically going to be talking about the sensors and the sensor suites and sensor integration, which, if you think about it, is in itself a market, a huge market within that application space.

Reem Malik: When I think about it, if I start at a high level first... Why autonomy or autonomous vehicles... Why does anybody care about it? There are a few big drivers. One of course is safety. Whether we're talking automotive or mobility applications or industrial applications for agriculture, construction, et cetera. Safety... Preventing accidents, saving lives.

The other aspect is of course cost, efficiency, and productivity, using automation and autonomy to improve all those aspects, which are important to business in any realm. When it comes to safety, knowing where you are and knowing what's around you in the world, there in 3D space, requires a whole lot of different sensors that have to come together and in an intelligent and coherent way.

EE: Now, what are some of the challenges that are involved right now? Because obviously we've got sensors for pretty much every environmental parameter you care to mention, but I guess making them work together in harmony is, I guess, the biggest issue, or are there other issues as well?

Reem Malik: At the level of a company like ACEINNA, our specialty is really high performance inertial sensors and IMUs, which measure motion and help with machine controls. What is our focus? Our focus is to provide the best performance, reliability across all operating and environmental conditions, and then make that sensor easy to integrate into the broader system. Providing enough intelligence and smarts and usability in it, to make it easy for the system integrator to use it.

EE: Isn't sensor fusion currently the magic word out there right now?

Reem Malik: It is a magic word, and it's not an easy thing to do. Where you're combining multiple sensor inputs in an intelligent way. We do that internally in our products where we may have multiple IMU sensors, or adding a GPS input, or a steering-angle input. You have to integrate that in the sensor fusion algorithm, which we call an extended common filter. We do that on the level of our product. Now, when that product is put into a system, the system developer maybe has to integrate it into their own broader architecture. They may be including other inputs and doing their own... Another level of sensor fusion on top of that.

EE: Now, are the biggest challenges that handoff between the capabilities that you've baked in, and the capabilities that they're trying to build in the larger system, or is it once the capabilities have been baked into the individual sensor, it's easier to build that system?

Reem Malik: I'll give an example. That's a good way to talk about it, because there's different levels of complexity. If we're trying to make everybody's job a little bit easier you split that up a little bit. Our core competency is around, as I mentioned, high-performance, IMUs that are stable and reliable over temperature and all environmental conditions.

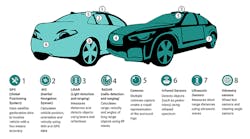

For an inertial navigation system, you need a really good IMU that you combine with data from your GPS or GNSS system. The GPS gives you an idea where you are on the globe. Then with the IMU, it helps you... It measures your motion and your movement, so you can track your position and your location. That, in itself, is one form of sensor fusion. Now GPS on its own with an IMU can give you a couple of meters of accuracy (Fig. 2).

If you're in a lane on a highway, you generally know you're on this road, but you may not know which lane you're on, or which part of the lane you're in. So then we add the other technology called RTK or real-time kinematics, which is based on ground-based space stations that are sending you ... Correcting for the ambiguities and your GPS data. If you're able to integrate and process that data appropriately, you can get down to less than 10 centimeters of accuracy. So suddenly, you know exactly where you are and your lane even without any additional sensors or anything.

But in terms of stepping back to that question of sensor fusion. Now, I don't know the exact levels, but for a common filter for an IMU plus GPS, that might be an order of level of a four-stage common filter, for example. When you add RTK, that increases that complexity of that algorithm by a factor of two or three, and it takes a lot of investment and development to build that out correctly. So that would be called a positioning engine.

Let's say an OEM or a system developer could spend a year or several years developing something like that... That kind of a positioning engine, whereas we've already developed it and with our background and expertise in the area, and embedded that into some of our products. That removes a load from the system integrator to focus on other aspects of their design.

EE: Are there different levels of the amount of awareness I would want? For example, obviously for a human-safe system I'd want as much awareness as humanly possible, but is there a minimum awareness level for say a non-manned autonomous vehicle, or is it basically, once I have a good IMU and my other stuff, I already know everything I need to know, regardless of the level of application?

Reem Malik: Definitely, I think there is different levels of awareness, accuracy that are needed either to different applications or scenarios. It depends where are you, where are you going to and coming back from, what's surrounding you. What kind of maneuvers you have to make. That could go anywhere, I think you have to be prepared to have all levels of awareness, but say you're in an agriculture environment where you can plan out your route ahead of time, and you know what the route is, and you can practice that route, and there's some level of predictability around that. So then, maybe the level of awareness that you need is slightly less because you already know. James, what do you think?

James Fennelly: I'm just going to inject a couple of comments. It really depends on the mission, and the vehicle and what you're trying to do. For example, a car is going to have LIDAR and forward-looking radar, and cameras that are going to help with all the cues that you get from a road, like the lane markers and stop signs and things like that.

That's very different than maybe the sensor suite, you would see on a drone, which doesn't have lane markers and it isn't needing to look for oncoming cars and generate trajectories and look for people, which again, is very different from an agriculture or a construction environment.

Where there aren't those lane cues, cameras that would be looking for lane markers are useless. A lot depends on the mission of the autonomous vehicle and the environment it's expected to be working in. Part of the solution that we provide that seems to be resonating well with the engineering community, is the inertial measurement units that we sell as part of our product portfolio, which rely just on physics (Fig. 3).

They're measuring rotational rate and they're measuring acceleration due to gravity and due to motion. There are IMUs out there that you could fly around the world with, and take off, fly across the country and land, but their ring laser gyros are so cost prohibitive, they only put them on satellites.

Reem Malik: Or fighter aircraft and such.

James Fennelly: Yeah, so it's not practical to deploy in an automobile or on an excavator or on a wheeled loader or a delivery robot. It's just not practical. Seeing as the last five years, 10 years has really been driving up the performance of lower costs, I guess depends on how you look at it, it's driving a higher performance needed for autonomous operation down to a cost that's acceptable for wider adoption in the marketplace. As I was alluding to, the technology exists, you can make anything autonomous and have it never hit a person, never hit a wall and go anywhere it wants to go, but it's just impractical from a cost perspective.

James Fennelly: One of the biggest challenges for wide adoption of autonomous vehicles in engineering is getting to the cost point, where it's not cost-prohibitive for all these sensors, and different companies, even in the automotive space, take different tacks on how they want to achieve it. Tesla has been a notorious detractor from LIDAR, and has been a big promoter of just using cameras and radars and things like that.

But in any of these systems, there needs to be some type of IMU, because all these sensors have overlapping strengths and weaknesses, but they can all fail in certain conditions. The things that don't fail are physics. If you can measure a rate and acceleration, you can just use simple math to calculate velocity and distance and heading and things like that. From our perspective, a big part of the sensor fusion is that we can take information from the GPS and the RTK ... these two that we've already readily integrated to correct for errors that accumulate through the integration of the rate signal and the acceleration signals. Our sensor fusion, our Extended Kalman Filter uses that information that's available to it, to correct for the bias errors of the rate sensor, as they drift over time and to correct for those integration errors that I was alluding to.

When you integrate random noise intuitively you think, "Well, it's all going to average to zero," in reality it doesn't. It's a phenomenon called angle random walk and it's illustrated well by, if you take the analogy of standing in the middle of a football field and you flip a quarter a thousand times, you would think that you would end up back at the 50 yard line. But in reality, if you do this a bunch of times, you may end up in the end zone one time and you may end up in the other end zone some other times.

That's the phenomenon called angle random walk, and it's a phenomenon associated with integrating a random process. If you have random noise, the amount of noise will determine the step size that you take when you flip the coin. You do want to try and minimize your noise level, but you'll never make it go away, there's voices there. So, by taking information from the GPS says, as long as my velocity is really X meters per second, but my integration here is telling me it's X plus some error meters per second, then I can correct for that.

Reem Malik: The redundancy of information is one of the positive flip sides to sensor fusion.

James Fennelly: Oh, sure! Each sensor's got its own weak spot, and by overlapping them, you cover those weak spots, and if you lose a sensor, you have other information to pull on to remain in a safe-type condition.

EE: On the nuts and bolts side, why don't we talk about one of your latest solutions and put that into perspective as it regards this conversation and the application. In other words, walk us through that device as it applies to making sure you have your sensor fusion dialed in, your information dialed in, your safety, and all of that other.

James Fennelly: I was thinking OpenRTK 330 LI, with the integrated inertial measurement unit in it as an example.

Reem Malik: I can start talking about this a little bit. The OpenRTK 330 is a surface-mount module that we have developed, which integrates a dual-band GNSS receiver, so high performance basically tracking all the constellations. We also have integrated triple redundant IMU. We're basically fusing 18 axes of motion measurement. That provides that redundancy, fault tolerance and also an aspect of improving performance of precision and the motion sensing (Fig. 4).

Then we also have a microcontroller in there where we've embedded our high-performance positioning engine, which actually fuses all of that data. And you can also input other sensors such as a Real Tech sensor or a RIOEncoder sensor data. You can correlate GNSS with your IMU data with external sensors, like you're steering angle or wheel and add on top of it and RTK engine, where we're receiving RTK corrections and giving a final solution of position, velocity and time of the output.

James Fennelly: From the high level, we have this GNSS chip. It's embedded in there. As Reem was saying, you can get a few meters of accuracy with your regular standard GPS. Most time it's better, but at a high competence level, it's actually worse. You have to work with your high competence level. So it's a few meters of accuracy. Inside that we have this positioning engine, this RTK position engine. We get these RTCM corrections, they correct for ionosphere data and the RTK actually measures the carrier signal, zero proxies and there's a lot of information out there, even on Wikipedia, how it does it, but you get down to sending me or type level precision, but that's happening at about a 1 Hertz rate, maybe a 10 Hertz rate. There's hundreds of milliseconds... A hundred milliseconds to one second of, a gap in the knowledge. If you're traveling 60 miles per hour, that's a big distance.

You have this cheap GPS that gets you 1 Hertz data, maybe 10 Hertz data. What are you doing the time in between? Our sensor fusion algorithm is using the information from these 18 axes of motion sensing, to bring that to a 100 Hertz data rates. So we're filling in all the gaps or we're filling in... We're making the gaps much smaller between these GNSS updates, so that you have much more competence of where you are throughout that whole GNSS gap. Then, if you think about it, you take it even a step further.

Now I go into a tunnel, it's going to take me 30 seconds to traverse this tunnel. I've lost my GNSS and I've lost my RTK, so when I entered the tunnel, I knew precisely where I was, but now I need to try and navigate through to the other end of that tunnel. Part of that navigating through to the other end of the tunnel is using the information within the IMU, to what we call dead record, and to continually update the position velocity, heading information during that GNS outage event. The better your IMU, and the better performance you have in your IMU extends the time before you're out of your lane, or before you could potentially be out of your lane.

In automobiles with Level 4, Level 5 ADAS, it's not just our IMU that's doing that. They also have cameras looking at the lane, and they have the radar looking forward. That's a level of fusion above us that we're providing our fused data and updating the GNSS, or the position and velocity and the heading based on last GNSS, and providing that information to the system level, who's then doing a further level of integration with the camera and and the other sensors, the ultrasonic sensors, the forward-looking LIDAR and all that stuff to bring it to a whole solution.

Reem Malik: What James is talking about is right on, and the other aspect that he had mentioned earlier is, again, the cost around providing a system like this. An INS with RTK has been used for that concept, and those kinds of products have been used in construction and agriculture for many years. The RTK is not new, but it's been very specific to a certain segment, where you have a base station, a farmer will buy a base station and then install it and then communicate the RTK corrections to their equipment or rovers that are doing the work on the field.

With this kind of emergence and proliferation of autonomy, there is a need for more, very high accuracy and very high precision positioning and localization capabilities. The cost of the RTK service is coming down, and that's part of the pride of the infrastructure for autonomy and autonomous vehicles. Also the OpenRTK, we've integrated and been able to maintain a certain level of performance, but make it viable in terms of costs to be used in higher volume applications.

EE: Before I turn off the recorder, are there any other issues that you wanted to mention as far as the solution or the situation or the application that you feel that the audience should know?

Reem Malik: Another component of this is, as I had mentioned, the RTK service, there are a few different players. There are free, government sponsored RTK networks that you can plug into, but, going back to a high level of what's important in this space, safety, productivity, reliability, and as you had mentioned, ease of use. If you purchase an OpenRTK Eval kit, it comes with a 30-day free trial for OpenARC, which is our RTK network, and it seamlessly plugs in and connects. You have all the documentation available and you can be up and running really within 10, 15 minutes. Try it out.