Game of Drones Returns: Welcome to HoverGames 2

NXP’s HoverGames is now in its second iteration. The developer kit in the competition centers around the RDDRONE-FMUK44FMU flight management unit (FMU) that contains a Kinetis K66 SoC based on a Cortex-M4F. It’s designed to run the open-source PX4 flight management software (FMS). This system has a good bit of head room, but a bit more horsepower is needed apply machine learning (ML) to sensors like cameras or 3D image sensors. Quite a few of the entries in the initial competition paired the FMU with platforms like a Raspberry Pi.

HoverGames 2 takes the competition to the next level by standardizing on the NavQ stack (Fig. 1). The stack includes three boards, starting with the i.MX 8M Mini system-on-module (SOM). The middle board adds an SD Card, Ethernet networking, a MIPI-CSI camera interface, and a MIPI-DSI display interface. The last board provides interfaces for drones or rovers that follow the PX4 standard like the NXP FMU.

The i.MX 8M Mini includes a quad Cortex-A53 and Cortex-M4. The SOM adds 2 GB of LPDDR4 DRAM, 16 GB of eMMC flash memory, and a 32-MB QSPI flash. There’s a PCIe M.2 interface as well. The SOM also supports Wi-Fi 802.11ac and Bluetooth 4.1.

The SOM takes the platform to the next level with ML support and the ability to run operating systems like Linux. ML models from frameworks like TensorFlow, Caffe, and PyTorch can take advantage of hardware acceleration, enabling real-time processing of sensor data as well as supporting other robotic chores like mapping and situational awareness.

In theory, the NavQ could run the PX4 FMS, but the FMU also has all of the sensors built in. The only sensor directly connected to the NavQ is the camera.

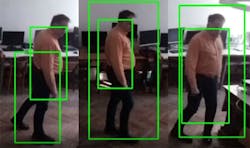

The winner of HoverGames 1 was Dobrea Dan Marius’s Autonomous Human Detector Drone. It added a Raspberry Pi 3 Model B+ to the mix along with a camera. OpenCV was used to identify people by processing the video stream from the on-board camera in real time (Fig. 2). The system was designed as a flying warning and risk-assessment tool. It could be used for search and rescue as well as assist in locating and tracking people in areas where there were fires. A secondary part of the project was to develop and test an ultrasonic obstacle avoidance system.

There were quite a few entries for the first HoverGames; you can find those online. Most, like the Human Detector Drone, have open-source code and schematics so that you can build on and extend the work done by the participants. I was impressed by the various tips and tricks that highlight design and implementation issues to help others, especially those participating in HoverGames 2.

The output from science and engineering competitions continues to amaze me. The combination of new hardware and sensors combined with machine-learning software is changing what’s possible.