Machine Learning on the Path to Focused Adoption

This article is part of TechXchange: AI on the Edge

What you’ll learn

- Who is developing machine-learning solutions?

- What types of data are being analyzed?

- What types of deployment platforms are popular?

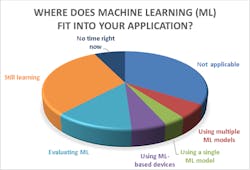

Machine learning (ML) is a hot topic, but many are still in a learning curve with it or evaluating whether ML is applicable to their application. We recently did a survey of Electronic Design readers and found that a majority of respondents also discovered that ML isn’t applicable to their application (Fig. 1). The number of people using artificial-intelligence (AI) support to enhance their products or make them practical is small but growing.

Certain applications have pushed wider adoption due to volume, such as natural language processing in the cloud with platforms like Amazon Alexa and Apple’s Siri, as well as in applications like automotive advanced driver-assistance systems (ADAS). The payoff is significant and providing accelerated ML platforms is part of the design.

In the case of the cloud, processing audio and video data in parallel makes excellent use of cloud resources. Utilizing platforms such as smart NICs to service cloud computing make it possible for some ML models to reside in FPGAs in these adapters.

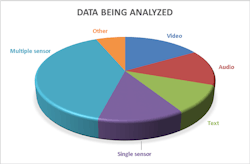

What I found most interesting was that most applications using ML employ multiple sensors (Fig. 2). Then again, this should not be too much of a surprise as correlation and analysis of complex datasets is one area where deep neural networks (DNNs) can do well when properly trained.

The emphasis on data streams like audio, video, and text/language processing should not come as a surprise either. These were some of the first areas to be addressed in research—numerous ML models can be trained and customized for a particular application. Building a new model is a much more difficult task, but one that can pay off in the long run.

Software-Based AI

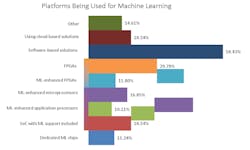

One result I found very interesting was the dominance of software-based AI solutions (Fig. 3). All platforms employ software somewhere to run the ML models. However, this question asked about the platform on which the models were run. It also was a multiple option question; the software could be running on ML-enhanced processors as well.

Still, the fact that software-based solutions are being employed without hardware acceleration indicates many applications don’t require additional hardware. We do know that many useful models can run on standard microcontrollers. The trick is matching the software to the application and running it on a hardware platform that will provide suitable performance to make the application work. This isn’t any different from the challenge faced by engineers and programmers when working on any project, since unlimited memory, computational power, and communication isn’t an option.

Another interesting piece of data is the low percentage of developers who rely on the cloud. This implies that most are involved with standalone ML applications. It also means that the hardware and software AI options available to developers are sufficient to incorporate ML models into their applications.

Types of Tools

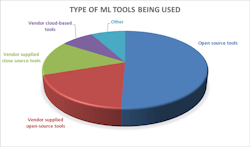

Finally, we asked about the tools developers were using (Fig. 4). I probably should have included a question about their use of development kits like those we’ve been highlighting in our Kit Close-Up video series.

Suffice it to say, every major chip vendor has an array of kits, reference designs, and tools that cater to AI applications. The only difference between them is the depth and breadth of the support, and the number of app notes and applications included with these kits. In general, it’s substantial and growing. It mirrors the rise of software support for processing hardware in general over the past couple decades, but it has taken a lot less time.

The driving force behind this process is due to open-source software. The availability of tools highlights how sharing them makes a big difference in adoption. The array of open-source software supplied by vendors also is significant. The amount of closed-source software is smaller, but often a vendor provides both types, with the closed-source support applied to make the “secret sauce.” These days, there’s a lot of sauce to go around, since the models are key to the success of an application.

Regardless of the rampant adoption of AI/ML, the technology is still in its infancy. Not to say that it’s not ready for prime time—the amount of change and improvement is substantial. The difference between the tools, platforms, and applications over time has been large, and it continues as improvements and new approaches are added to the mix.

Likewise, the understanding of where AI/ML can come into play is still growing. Unlike many tools, such as ray tracing for graphics, the applicability, suitability, and practicality of using AI/ML techniques for a particular application aren’t necessarily obvious.

AI/ML isn’t a magic bullet and it can’t address all aspects of computing. We still need to code in C or other programming languages for the bulk of most applications. Nonetheless, AI/ML is simplifying many aspects of these applications, or it may make new features practical.

Read more articles in TechXchange: AI on the Edge