My Hands-On Trial Run with Jetson AGX Orin

What you’ll learn

- What’s up with the Jetson AGX Orin Development Kit.

- What is JupyterLab and what does it have to do with Jetson AGX Orin?

- Why Bill Wong doesn’t follow directions.

Ok, I’ll start first with the last bulleted item above. I would have written this review earlier if I’d been able to get things running sooner. However, due to not reading the directions and doing things in order, I had to go back and forth with tech support to figure out why I could not run the demos. I might be forgiven as the system comes up right out of the box running Ubuntu as noted in my initial Kit Close-Up video.

Once I installed the JetPack SDK and DeepStream software, I was able to quickly run through all of the demos and benchmarks. I’ll skip the latter since you can find system specs easily and they, of course, agree with what the hardware spits out. It was one of the few things that actually made the fan run enough to be noticed. It was pushing the hardware to the limits, which wasn’t the case with most of the demos.

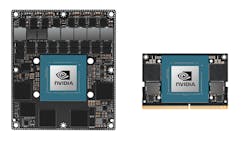

The development kit is a complete system that has the Jetson AGX Orin module at its core (Fig. 1). The extra memory and flash storage meant I didn’t worry about adding a NVMe M.2 card as I’ve done with past Jetson platforms. Adding one may be useful for more demanding applications. The figure also shows the smaller Jetson Orin module that has less memory and performance than its big brother. It works for lower-cost, lower-weight applications that can get by with a little less compute power.

Assuming you didn’t make my mistake and jump ahead, then you could finish testing the system in an afternoon.

Software Support

To fully take advantage of the system, you will need to learn about JupyterLab. It’s possible to use all the libraries, etc., without this, but most of the demos and support is done using JupyterLab (Fig. 2). It’s a web-based, notebook-style interactive system that’s been adopted by a number of AI developers and platforms.

The system is very nice—it can run things like command line scripts and present the results in the same browser window as the commands. It interacts well with other open-source platforms like Docker and Kubernetes, which is important for NVIDIA, as these are used both in the cloud and on platforms like Jetson AGX Orin. It includes some of the demos. There’s also a multiuser version called JupyterHub.

I haven’t delved deeply into JupyterLab at this point, but you can run the scripts in a block of code by simply typing Control-Enter while the cursor is in the block. Likewise, a bar to the left can cause the block to be expanded or collapsed, which is handy as some results can be pages long.

TAO: Train, Adapt, and Optimize

Just for the record, my mistake in setup was not getting DeepStream installed properly. Once I did, I was able to check out the pretrained models as well as use the TAO (train, adapt, and optimize) support. This runs containers either in the cloud or on the system to train or utilize a trained model. The example showing off the trained model on the Jetson AGX Orin was able to identify multiple people moving in multiple video streams (Fig. 3).

Getting all of it up and running was just a matter of running through the JupyterLab notebook for the various demos. The platform seemed to have plenty of headroom; the Linux load and heat load (based on fan operation) was minor. Not sure how to check the load on the GPU or the AI accelerators, but I suspect that was low as well. This would mean that a single chip could handle half-a-dozen cameras on a car without much trouble, allowing even more analysis of the video streams used for basic people identification.

Of course, it had no trouble picking me out (Fig. 4). I didn’t have a crowd handy or multiple cameras, but I don’t doubt that the system would work just as well in those cases since I was able to feed it different video files.

Riva Speech Analysis

No pretty pictures are available for the Riva demonstration since it’s audio in nature. Likewise, seeing the gibberish I tried to give it is just a matter of looking at the transcript of my utterings, which the Jetson AGX Orin and Riva software had no trouble analyzing and presenting back to me.

The Riva SDK is designed to build speech applications. The model improvements alone have improved performance by more than a factor of 10. It matches or exceeds the automatic speech recognition (ASR) and text-to-speech (TTS) support available in the cloud. I only used English, but pre-trained models for different languages are available.

The TAO support mentioned earlier was shown on video streams, but the same approach works for other platforms like Riva. Likewise, it uses NVIDIA TensorRT optimizations, and it can be served using the NVIDIA Triton Inference Server if cloud solutions are more inline with what you want. For me, the standalone support of Jetson AGX Orin is more interesting. It could handle multiple audio streams as in a car with multiple people and multiple microphones.

Follow On

While the demos and benchmarks quickly rolled off the system, moving into using things like the Riva SDK takes a good deal more work—especially at the program level. The reason simply centers around the large number of interfaces and options, as well as dealing with TAO, etc. This doesn’t even include the underlying technology like TensorRT, cuDNN, or CUDA.

Still, this is the area where NVIDIA excels—the documentation and library support is available and quite good. Likewise, most of the software spans the platforms from the lower-end Jetson modules though the company’s high-end enterprise systems. These typically power the cloud that’s involved with cloud-based training.

Compared to the first Jetson platform I used, this ranks so far above in terms of quality and out-of-the-box support that I’m left amazed.