Embedded Devices and Software Offer End-to-End Solutions for Edge AI

Members can download this article in PDF format.

Artificial intelligence (AI) dominates business news these days as companies across a wide range of industries seek to leverage the technology to boost functionality and control costs. While generative text and image AI tools tend to reside in cloud-based data centers, AI applications are also making their way into consumer, automotive, and industrial products with the AI-capable microcontroller units (MCUs) operating at the network edge.

Sponsored Resources

Running AI Models Near the Data Source

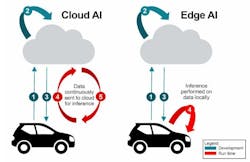

Edge AI—the ability to run AI models near the source of the data—enhances responsiveness and security by keeping sensitive data local, and it removes the telecommunications link to the cloud as a potential point of failure. Figure 1 illustrates key differences between cloud and edge AI.

Both are likely to use the cloud for development, with data moving from the edge to the cloud (step 1), processing occurring in the cloud (step 2), and results returning to the edge (step 3). But during run time, cloud AI (left) requires that data continue to be sent to the cloud (step 4), where the inference processing occurs, with results returned to the edge (step 5). For run-time operation with edge AI (right), though, inference is performed locally, with no need for a cloud connection.

Edge AI is enabled by new embedded processors with integrated components such as neural processing units (NPUs), including members of Texas Instruments’s C2000 family of real-time MCUs. Figure 2 shows a C2000 in an edge AI-enabled real-time control system. The C2000 provides power-factor-correction (PFC) control and motor-drive control while its NPU performs fault detection based on electrical and mechanical data. The depicted system, for example, could predict an imminent bearing failure by analyzing vibration, sound, and temperature information.

Edge AI Demos

TI offers a series of video demonstrations describing a variety of edge AI use cases, ranging from perception to real-time monitoring and control, that you can adapt to your needs.

Perception

In the perception category, the company highlights a defect-detection application. It identifies incorrectly manufactured parts on a conveyer belt and provides live statistics regarding total products produced, the production rate, and the defect percentage—it produces a histogram indicating defect types, too.

Also in the perception category is a people-tracking application, which provides insights into the total number of visitors, current occupancy, and average visit duration. It generates a heat map indicating frequently visited areas.

Still other perception applications include a smart retail scanner for codeless food scanning and a human-vs.-non-human detector that can minimize false detections for automatic door openers. To help you get started on such applications, the company offers a starter kit and software-development kit based on its Sitara family of low-power processors.

Monitoring and Control

In the monitoring and control category, TI addresses arc fault detection in solar-energy systems. The company offers a reference design for the reliable detection of arcs with better than 99% accuracy in accordance with UL 1699B, “Photovoltaic (PV) DC Arc-Fault Circuit Protection.”

The design (Fig. 3) describes an analog front-end (AFE) that feeds an AI model running on a C2000 device to detect arcing frequencies. The design also supports the collection and labeling of arcing data for AI model training.

Furthermore, TI offers a demonstration of a Bluetooth channel-sounding application. Bluetooth channel sounding enables improved phase-based ranging to accurately measure the round-trip time-of-flight of modulated data packets, which are randomized to enhance security.

Bluetooth channel sounding finds use in automotive, personal electronics, and industrial smart-access and asset-tracking applications. For example, it can accurately measure the distance from a key fob to a vehicle, precisely track movement of products in shipping and receiving departments, and help consumers locate lost personal electronics. It operates in a one-to-one topology where one device is the initiator and the other a reflector.

TI provides out-of-the-box algorithms to enable the quick testing and customization of Bluetooth channel-sounding designs based on its CC27xx 2.4-GHz wireless MCUs. They integrate an algorithm processing unit that leverages machine learning to efficiently enhance line-of-sight and non-line-of-sight performance.

TI also addresses audio with edge AI, having implemented a wake and command application on its CC2755 wireless MCU. The application makes use of partner Sensory Inc.’s TrulyHandsfree library and VoiceHub online portal for the development of wake-word models and voice-control command sets.

To run the models, the CC2755 includes a custom data-path extension (CDE) for machine-learning acceleration and an algorithm processing unit (APU) for math and vector operations. TI reports that the application uses only 40% of the device’s processing bandwidth for wake-word recognition and only 27% for command recognition.

Software-Development Tools

You can choose from a variety of software-development options to train, compile, and evaluate models for deployment at the edge. TI’s Edge AI Studio offers a collection of graphical and command-line tools. The Model Selector tool, for instance, supports vision applications and helps you find a model from TI’s Model Zoo that meets your performance and accuracy goals.

There’s also Model Analyzer, a free online service that allows you to evaluate edge AI vision applications on remotely accessed development boards. Model Composer is a fully integrated tool for collecting and annotating data as well as training and compiling models for deployment on a development platform. And, finally, Model Maker is an end-to-end command-line model-development tool that you can use to leverage your own models.

Conclusion

To effectively deploy AI at the edge, you can use AI-capable MCUs running models ranging from prewritten ones to those you have developed and trained yourself. TI offers a full lineup of processors with AI-capable features such as NPUs and APUs, and it provides evaluation modules and flexible software support to help meet your performance requirements.

Sponsored Resources