NXP Eyes AI at the Edge with New MCU Families

This article is part of our embedded world 2022 coverage.

NXP hopes to bring artificial intelligence (AI) to low-power embedded and edge devices with a new family of 32-bit Arm Cortex-M MCUs that for the first time contain a custom neural processing unit (NPU).

The flagship MCU in the company’s new MCX portfolio uses the NPU to run machine-learning workloads 30X faster compared to a CPU alone, bringing AI out of the cloud and into consumer and industrial IoT devices.

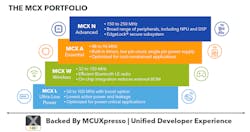

NXP said the Cortex-M33 MCUs span several families, each covering a different base within the world of real-time embedded systems, giving engineers more options to select the component that works for them. These include the high-performance, highly secure MCX "N" family, the low-cost MCX "A" lineup, the low-power wireless connectivity MCX W, and the ultra-low-power MCX L series for battery-powered devices.

The MCX series is supported by NXP's popular MCUXpresso suite of development tools and software. The company said the unified software suite helps maximize software reuse to speed up development.

NXP stressed that the MCX portfolio is not a replacement for the more than 1,000 SKUs across its LPC and Kinetis 32-bit MCU families, and it plans to continue to supply and support them going forward.

"With the MCX portfolio, we're selecting the best LPC and Kinetis peripherals and architectures, bringing them together in next-generation MCUs," said CK Phua, product manager of microcontrollers at NXP.

Flexibility in Focus

At the hardware level, all edge-computing devices are alike. But every device is unique in its own way.

Today, engineers must navigate a complex landscape of performance requirements, as well as wired and wireless connectivity options, while balancing security, power efficiency, and costs of the system.

“This is not a one-size-fits all situation,” said Phua. Ideally, you would want to be able to overhaul the underlying hardware as market needs change without having to overhaul the software that runs on top of it.

To bring more flexibility to the table, NXP is not simply adding more SKUs to its MCU portfolio. "We are entering a new era of edge computing, requiring us to fundamentally rethink how to best architect a flexible MCU portfolio that is scalable, optimized, and can be the foundation for energy-efficient industrial and IoT edge applications today and in the decades to come," said Ron Martino, VP and GM of edge processing.

With each 40-nm MCU in the family sharing the same CPU architecture, based on Arm’s Cortex-M33, NXP is trying to make it as easy as possible to scale up or down to different devices and maximize software reuse.

The MCX MCUs feature up to 4 MB of on-chip flash memory, energy-efficient cache, and advanced memory-management controllers, plus up to 1 MB of on-chip SRAM with error-correction code (ECC) to improve real-time performance.

The MCX range is based on NXP’s security-by-design approach, offering a secure boot with an immutable root-of-trust, hardware-accelerated cryptography, and, on some products, its EdgeLock secure subsystem. Flexible interfaces and intelligent peripherals give developers more design flexibility.

Dial "N" For NPU

The flagship MCX “N” family features the same Cortex-M3 CPU as the other MCUs, giving it what NXP said is a best-in-class combination of performance and power efficiency to run real-time intelligent edge devices.

The MCX N has many of the same building blocks that helped put the company’s 32-bit MCUs on the map, which have shipped in the billions of units to date. But it also contains the first NXP-designed NPU. The NPU is able to run machine learning on the device itself instead of in the cloud, reducing latency that is intolerable for real-time edge devices.

The MCX N features the same Cortex-M33 CPU as other family members, clocked at 150 up to 250 MHz. It is supplemented with a range of other peripherals, including a digital-signal-processing (DSP) subsystem.

NXP is placing a bigger bet on the NPU in the MCX N family because it believes more embedded and edge devices powered by 32-bit MCUs will take advantage of machine learning in the coming years.

This is reflected by its decision to keep development of the machine-learning engine in-house instead of buying the blueprints for Arm's Ethos NPUs. NXP first signaled its ambitions around on-device AI in 2018.

Bringing chip development in-house also gives it more control over the future roadmap for the NPU and the flexibility to fine-tune it for customers. NXP said the NPU is scalable from the 32 operations per cycle in the NPU at the heart of the MCX N family to over 2,000 operations a cycle. Future expansion is possible. NXP plans to plug the NPU into other parts of its embedded portfolio, including its i.MX RT and i.MX lineups.

“The NPU's architecture will be scalable,” Phua said. “We will cover every CPU architecture within NXP, but all with a single architecture for the NPU."

The NPU inside the MCX N can pump out up to 8 billion operations per second at 250 MHz, a fraction of the performance of the AI engines designed by the likes of Apple, MediaTek, and Qualcomm for mobile phones.

NXP said the NPU in the MCX portfolio will bring a performance boost to space- and power-constained IoT devices that run on 32-bit MCUs. This opens the door to functions such as classifying objects in video and images and identifying keyworks in audio. Predictive maintenance in factories is also possible, said NXP.

Machine learning will be supported by NXP’s eIQ ML software development tool kit. Developers can rely on the easy-to-use tools to train models and deploy them on the NPU or CPU, according to the company.

From Point "A" to Point "N"

On the other side of the portfolio is the MCX A family for embedded devices where cost and time to market are the priority. Designed to be easy to use, the MCX A series runs the same Cortex-M33 as the rest of the family but with more limited functionality to keep costs down, including clock speeds of 48 to 96 MHz. It comes with a single-pin power supply, built-in timers, and reduced pin count for "cost-constrained" devices.

The MCX A fits inside the same package as the MCX N, allowing customers that require more performance, additional memory, or stronger security measures to use the MCX N as a drop-in replacement.

The MCX N also stands out by implementing a secure, immutable subsystem called EdgeLock, said NXP. The so-called secure enclave supports the "root of trust" of the device to carry out a secure boot, advanced key management, device attestation, and trust provisioning to stymie all sorts of attacks on IoT devices.

As noted, the MCX portfolio will be supported by the MCUXpresso tool and software suite—the same used by its Kinetis and other 32-bit MCUs. This allows developers to reuse large swathes of software from one device to the next, instead of starting over from scratch when designing a new product or upgrading the underlying hardware.

The breadth of the MCX portfolio allows engineers to choose MCUs that best fit the needs of their design. NXP said maximizing software reuse frees them up to invest in differentiating aspects of their application.

"L" for Low-Power, "W" for Wireless

The MCX L, based on a Cortex-M33 MCU clocked at 50 to 100 MHz with an optional 50% boost mode, is intended to minimize power consumption as much as possible in edge IoT devices.

The MCX L operates on subthreshold voltages and uses technologies such as dynamic voltage adjustment and body biasing to strike down both dynamic (active) and standby (leakage) power consumption.

NXP said the MCX L series will help prolong battery life in industrial and consumer IoT systems and other edge devices where battery power is a valuable commodity.

The MCX “W,” with clock speeds of 32 to 150 MHz, promises to add wireless connectivity to IoT devices with support for Bluetooth LE 5.2 as well as a high degree of on-chip integration that reduces BOM costs.

The first MCUs in the MCX portfolio will start sampling by the end of this year, with volume production slated for 2023.

The company declined to discuss pricing.

Check out more articles/videos in our embedded world 2022 issue.

About the Author

James Morra

Senior Editor

James Morra is the senior editor for Electronic Design, covering the semiconductor industry and new technology trends, with a focus on power electronics and power management. He also reports on the business behind electrical engineering, including the electronics supply chain. He joined Electronic Design in 2015 and is based in Chicago, Illinois.