Liquid Cooling Dials Down AI Supercomputer Heat

Two things stand out with Supermicro computers that were used to build the xAI Colossus for X: fiber optics and liquid cooling. Fiber optics has been around a long time and is already used regularly within data centers when moving data outside of the rack or if there's the need for very high bandwidths.

Liquid cooling isn't new. What is novel, though, is using it in large-scale systems, which is where xAI stands out. The system incorporates thousands of very powerful GPUs to handle massive machine-learning (ML) workloads.

Each liquid-cooled rack has eight 4U servers. Each server contains eight NVIDIA H100 boards. The H100 was introduced in 2022 and incorporates HBM3 memory. The xAI rack cools the system using the Supermicro Coolant Distribution Unit (CDU).

Different Types of Liquid Cooling

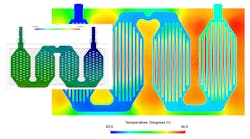

The CDU uses direct-to-chip (D2C) liquid cooling that keeps the liquid contained within a system of pipes and tubes. The heat exchange at the source is via a cold plate. Immersion cooling keeps the electronics within a bath of cooling fluid, but this requires either specially designed boards or the use of fluid that will not react with the electronics (see figure).

Liquid Cooling and Superclusters

The xAI Colossus supercluster has racks arranged in groups of eight, which translates to 512 GPGPUs. All of the GPGPUs are connected into one logical system using NVIDIA's NVLink interface on each GPGPU plus a set of NVSwitches like those introduced in the NVIDIA DGX2.

As noted, it's the scale of the implementation that makes the xAI Colossus so impressive. It runs the fiber-optic cabling above and the cooling system below. The individual liquid tubing is connected via a heat exchangeer to a massive pipe system. This is used to move the liquid outside, where the heat for the system can be dissapated. The video below provides a tour of the system.

Inside the World's Largest AI Supercluster xAI Colossus

The individual systems within the supercluster are designed for hot swapping. This allows for upgrades as well as replacement of broken systems. With the quick connect tubing, a system can be removed from the cooling infrastructure while the electrical contacts make it possible to extract systems from the compute environment.

Like power supplies, the system employs redundant CDUs, including the integrated pumps. This allows for cooling components to be swapped out in a modular fashion just like with the systems and power supplies.

Another impressive item of note was how quickly the system was built: It took 122 days to put together 100,000 NVIDIA H100 GPGPUs. That may seem like a lot of time, but most supercomputer installations have taken much longer to put together, including debugging challenges on such a complex system.

Read More About Liquid Cooling

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.