Programs mixed or aired live today generally are produced in a real-time or “linear” workflow, just as they have been for decades. Microphone signals, recorded music or replays, and other audio sources go to an audio console. Here, an operator controls their level, adds equalization or compression, and monitors the overall program to ensure it maintains a specified loudness level. The operator (an Audio Mixer or "A1" in TV productions) fades microphones in or out of the overall mix to match the on-screen images from cameras switched by the Technical Director (TD). Both listen to the main Director, who gives instructions to the cameramen, TD, and A1 on the visual and audio elements he wants them to create to tell the program’s “story.”

In classic studio production, this procedure would involve three cameras, one for a long shot and two for close-ups of actors. Sports broadcasts could involve 10 or more cameras to quickly switch from one viewpoint to another, where the Producer will also communicate with the Director, A1, and replay operator to coordinate the overall flow of the program.

The combined audio and video program for a remote broadcast is then encoded (perhaps with MPEG-2 video encoding and MPEG-1 Layer II or Dolby E audio codecs) for transmission to a network's operations center or master control room. For a recorded program, intra-frame video codecs such as Apple ProRes422 or Avi’'s DNxHD are typically used to reduce the recorded video bit rate, but 8 or 16 channels of audio are recorded uncompressed. Real-time signals in a facility are carried as uncompressed video, with 16 channels of audio on a single cable using the 1.5-Gb/s HD-SDI standard. As the industry eventually transitions to 4K video, the HD-SDI infrastructure will likely be replaced gradually by IP transmission over 20- or 40-Gb/s Ethernet.

In contrast, film-style programming (e.g., feature films, TV dramas) is typically shot with a single camera. Close-ups or changes in viewpoint are usually shot by repeating the action with a re-positioned camera. Sound is recorded by radio-pack microphones or an overhead boom microphone.

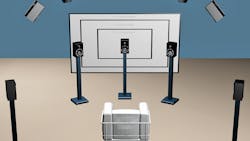

The takes of the camera are then combined in a video editor, such as Media Composer or Premiere Pro, to make an initial cut, and the recorded audio is edited to match this cut in an audio workstation like Pro Tools. Sound effects and music are added at this stage, and poor dialogue may be replaced. There may be iterations in editing that require the audio be conformed to new picture (video) edits. Audio and video are then combined in a DCI file for theatrical distribution on disk drives. For Blu-ray release or broadcast, the audio is usually modified to create a “near-field” mix for a sound image reproduced in a viewer’s home with less dynamic range and reverberant sound than in the cinema.

For TV broadcast, live and pre-recorded programming are combined in the network’s operations center and encoded for transmission to local affiliate stations, where local commercials are inserted. The affiliate’s signal is then sent to a cable or satellite operator’s headend, where additional commercials may be added or replaced, and then to the consumer’s set-top box. Of course, free-to-air signals from local stations may also be received directly by the TV. The emerging over-the-top distributors, such as Netflix or Apple, use dynamic adaptive streaming over HTTP (DASH) or HTTP live streaming (HLS) over IP networks to send signals directly to digital media adapters, game consoles, or smart TVs.

About the Author

Robert Bleidt

Division General Manager

Robert Bleidt, Division General Manager of Fraunhofer USA Digital Media Technologies, is the inventor of the award-winning Sonnox/Fraunhofer codec plug-in, widely used in music mastering. He led the extension of Fraunhofer’s codec business to an open-source model with its inclusion in Android, and developed Fraunhofer’s Symphoria automotive audio business.

Before joining Fraunhofer, he was president of Streamcrest Associates, a product and business strategy consulting firm in new media technologies. Previously, he was Director of Product Management and Business Strategy for the MPEG-4 business of Philips Digital Networks and managed the development of Philips’ Emmy-winning asset management system for television broadcasting.

Prior to joining Philips, Mr. Bleidt served as Director of Marketing and New Business Development for Sarnoff Real Time Corp., a video-on-demand venture of Sarnoff Labs. Before that, he was Director of Mass Storage Technology and inventor of SRTC's Carousel algorithm.