While PCI Express (PCIe) and Ethernet have coexisted for years—PCIe as a chip-to-chip interconnect and Ethernet as a system-to-system technology—the two technologies generally have assumed distinct roles in data centers and cloud computing. At the same time, however, PCIe has methodically encroached on the types of designs that were once the sole domain of Ethernet.

This trend poses both a challenge and opportunity for designers when choosing one for specific designs, in no small part because the two technologies differ greatly in the components, supporting software, and power requirements. This “What’s the Difference” article will compare and contrast the costs PCIe and Ethernet exact in terms of hardware and software costs, as well as power consumption

This file type includes high resolution graphics and schematics when applicable.

Current Architecture

Let’s look at the current architecture to find out how differently Ethernet and PCIe are being used.

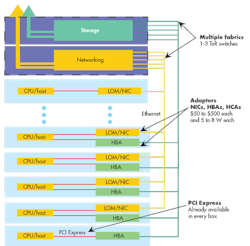

Traditional systems currently deployed in volume have several interconnect technologies that need support, such as Fibre Channel and Ethernet (Fig. 1). What’s evident is that PCIe is native to almost all of the CPUs (represented by the red lines). Native PCIe from the CPU is converted into Ethernet (represented by the yellow lines) by the network interface card (NIC) and LAN-on-motherboard (LOM) adapter cards. PCIe is already present on the servers in the rack, while Ethernet can be found in the server-to-server connection within a rack, in addition to rack-to-rack connections at the top of rack (ToR).

Using adapters to convert PCIe into Ethernet has multiple shortcomings:

- The existence of multiple I/O interconnect technologies

- Low utilization rates of I/O endpoints

- High power and cost of the system, due to the need for multiple I/O endpoints

- Fixed I/O at the time of architecture and build, thus no flexibility to change later

- Management software handling multiple I/O protocols with overhead

Because this architecture employs multiple I/O interconnect technologies, it increases latency, cost, board space, design complexity, and power demands. Furthermore, under-utilized end-points force system developers and users to pay for the overhead of these various endpoints’ limited utilization. The increased latency stems from the need to convert the PCIe interface native in the processors to the multiple protocols. However, that’s unnecessary because designers can reduce system latency by using the PCIe native on the processors, then converging all endpoints using PCIe.

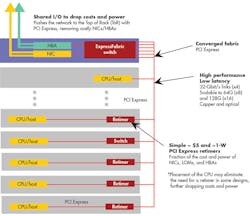

New Architecture

Sharing I/O endpoints is the most obvious solution to the shortcomings of the older architecture (Fig. 2). System users could replace the adapter cards and use PCIe within the rack for server-to-server connection while continuing to employ Ethernet for rack-to-rack connection via the ToR. The difference is that Ethernet continues to stay in the ToR for rack-to-rack connection while PCIe (already native in the CPU anyway) will take over the server-to-server connection. This helps designers lower costs and power, improve performance and utilization (of endpoints), and simplify the design.

Several advantages emerge with a shared I/O architecture:

- As I/O speeds increase, the only additional investment needed is to change the I/O adapter cards. In earlier deployments, when multiple I/O technologies existed on the same card, designers would have to re-design the entire system. With the shared-I/O model, upgrading to a particular I/O technology simply involves replacing an existing card with a new one.

- Since multiple I/O endpoints needn’t exist on the same cards, designers can either manufacture smaller cards to further reduce cost and power, or choose to retain the existing form factor and differentiate their products by adding multiple CPUs, memory, and/or other endpoints in the space saved by eliminating multiple I/O endpoints from the card.

- Designers can reduce the number of cables that crisscross a system. With multiple interconnect technologies comes the need for different (and multiple) cables to enable bandwidth and overhead protocol. However, simplification of the design and the range of I/O interconnect technologies reduces the number of cables needed for proper functioning of the system, thereby significantly diminishing design complexity and saving cost.

In Figure 2, the key enabler is the implementation of shared I/O in a PCIe switch. Single-Root I/O Virtualization (SR-IOV) technology adds I/O virtualization to the hardware to improve performance, and makes use of hardware-based security and quality-of -service features in a single physical server. SR-IOV also allows the sharing of an I/O device by multiple guest operating systems (OSs) running on the same server. Most often, an embedded system has complete control over the OS to facilitate this issue.

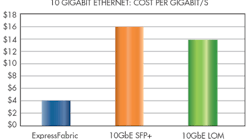

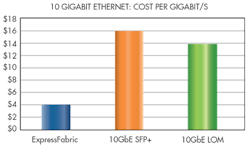

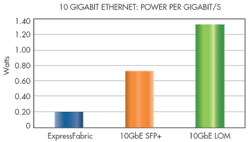

The cost and power-draw comparisons between PCIe and10 Gigabit Ethernet present stark contrasts (Figs. 3 and 4). Price estimates are based on a broad industry survey, and assume pricing will vary according to volume, availability, and vendor relationships with regard to ToR switches and the adapters. These tables provide a framework for understanding the cost and power savings by using PCIe for IO sharing, principally through the elimination of adapters.

Summary

A number of factors emerge when viewing the commonalities of and distinctions between PCIe and Ethernet. PCIe is native on an increasing number of processors from major vendors. Embedded designers can benefit from lower latency by eliminating the need for components between a CPU and a PCIe switch. With this new generation of CPUs, those designers can place a PCIe switch directly off the CPU, thereby reducing latency and component cost.

This file type includes high resolution graphics and schematics when applicable.

To satisfy the requirements in the shared-I/O and clustering market segments, vendors such as Avago Technologies offer high-performance, flexible, and power- and space-efficient PCIe solutions. ExpressFabric, for example, helps designers realize the full potential of PCIe by applying the interconnect in a cost- and power-efficient system fabric. Looking forward, PCIe Gen4, with speeds reaching 16 Gbits/s per link, will help to further expand the adoption of PCIe technology while making it easier and economical to design and use.

PCI-based sharing of I/O endpoints is expected to make a huge difference in designs that predominantly used Ethernet for interconnection. However, Ethernet and PCIe will continue their co-existence, with Ethernet connecting systems to one another, and PCIe blossoming into its role within the rack.

References:

http://www.plxtech.com/products/expresslane/switches

Krishna Mallampati, senior product line manager for the PCIe switches, Avago Technology, (www.avagotech.com), holds an MS from Florida International University and an MBA from Lehigh University. He can be reached at [email protected].