Propelled by the exploding demand for mobile products, the competition to provide the centerpiece of mobile devices—the system-on-chip (SoC) driving the entire mobile platform—is placing design teams under enormous pressure to implement ever-increasing functionality in ever-shorter periods of time. To fulfill this daunting task and accelerate time-to-market, more and more functionality is implemented in the embedded software rather than in the inherently less flexible and harder to change underlying SoC hardware.

The net result of this state of affairs is that system complexity, not only in hardware but even more so in software, is escalating. Hardware-software integration is now the fundamental challenge facing the development team.

Traditionally, the integration of hardware and software started late in the design cycle and was performed on the first engineering samples (ES) of devices delivered by the foundry. The later the integration activity and resolution of problems occurs, the larger the impact on profits, as proven by several research studies.1 This makes a strong case for integrating hardware and software as early as possible in the development cycle.

The software team and the hardware team normally debug their respective portions of the SoC. Software is typically validated against high-level models of the design, and hardware is generally simulated as register transfer level (RTL) with specific testbenches validating the hardware specifications. Most problems are uncovered and fixed during these operations. However, full system validation of conformance to the intent of the full SoC specification requires testing in a real-world environment with the two together. When this level of testing is done, new bugs always emerge.

Days and weeks of real-time operation are often needed to uncover these bugs. For example, data may be written at an incorrect address because an interrupt is not being issued, causing the system to hang or a peripheral to skip its operation. Such bugs may not even manifest themselves for several billion cycles of operation after the problem occurred. Consequently, the actual project time spent in full SoC validation to uncover these problems can be longer than the upfront RTL and software development period, especially if the SoC needs to be re-spun as new silicon, which can require several months of layout, mask making, and fabrication.

Finding critical bugs in the interaction of the embedded software running with the underling hardware is like finding the proverbial needle in a haystack. Finding problems quickly in the course of running billions and trillions of cycles of operation requires unique hardware debug tools and a rigorous tracing methodology.

Multi-Level SoC Debug

More and more project teams are realizing that the best way to identify and fix system-level problems is by using SoC emulation systems before first silicon is fabricated. Emulation systems provide a virtual transaction-based test environment to validate the SoC with real-world test scenarios with full visibility into the design for both hardware and software debug. From a project schedule perspective, emulation systems offer the flexibility to “virtually spin” the silicon and test fixes in hardware or software in hours, saving months of scheduling for each major problem encountered.

The first challenge in debug of system-level problems is locating them, and that means potentially sorting through millions of lines of code and test data from billions or trillions of execution cycles. To address this monumental task, it is critical to verify the SoC at high speed, possibly in excess of a megahertz.

In fact, at emulation speeds of 2 to 3 MHz, 1 billion cycles are processed in 5 to 9 minutes, by all means a long stretch compared to real time, but still acceptable to perform a debug session within a day. Running real-world tests for a few days can yield trillions of cycles of operation. This is significantly different from simulation-based hardware verification where most bugs are found, which commonly runs at speeds in the tens-of-hertz range or less.

Without a system-level debug methodology, a common mistake is to jump to the low-level debug as if the emulation system were a simulator, try to capture the entire design state data for every test cycle, and debug by reviewing the resulting waveforms. Processing billions or trillions of cycles on designs of hundreds of millions of gates and multi-millions of lines of embedded code would generate terabytes of this low-level data.

Sorting out such data via waveforms at the lowest level of abstraction would consume days and even weeks of precious time of many engineers, and deciphering and tracing the problem may be impossible. Instead, the focus of debug ought to be on the highestpossible level of abstraction and work its way down as the problem is isolated. This means typically starting with the embedded software tools and then working one’s way into system-level hardware mechanisms. What the software developer perceives as “single steps” through a driver source code may result in millions of cycles of design activity on the hardware side.

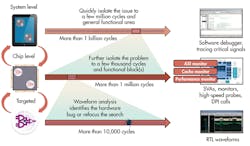

Starting from a database comprising billions of clock cycles, a software debugger can localize a problem to within a few million clock cycles. At this level, either the software team quickly identifies the source of troubles in the software code or it works with the hardware team using a software-aware hardware debug approach to zoom in to a lower level of abstraction.

Locating the problem at the software level circumscribes the neighborhood of the bug, but its hardware cause would still likely be anywhere in a range of a few billion cycles. Typical questions addressed at this level include:

- Has the operating system booted yet?

- Is the processor stuck in an interrupt handler?

- Why is the device driver not handling the data properly?

A few million cycles still poses a challenge if hardware debug is based purely on waveform analysis. Expanding that window to billions of cycles makes waveform analysis virtually useless. Instead, by using monitors, checkers, and assertions such as SystemVerilog Assertions (SVAs), the zooming onto the problem can be dramatically accelerated.

This debug technology confines the context that triggers the bug to a few thousand cycles. By that time, it is expected to know where and when to look for and avoid the discomfort of analyzing an overwhelming amount of data. Once a strong suspicion of the bug location arises, engaging with waveform analysis is appropriate for the final proof (see the figure). Multi-level debug gives the engineering team a way to systematically move between different levels of abstraction and quickly home in on a bug.

Emulation To The Rescue

Project schedules on complex SoCs are so tight that these system-level problems must be identified before first silicon is fabricated. Emulation has proven to be invaluable for the type of multi-level SoC debug that is required.

While hardware designers use hardware description level (HDL) simulators to verify the SoC hardware and software, developers use either engineering samples or FPGA prototypes to test their embedded software—two rather different design environments. Both parts of the engineering team can use emulation platforms across the board. By using emulation, they can share the same system and design representations. Thanks to combined software and hardware views of the SoC design, they can work together to debug hardware and software interactions.

Most importantly, best-in-class emulation platforms support multi-level debug methodology via the following capabilities.

• Fast runtimes: Running usually at multiple megahertz speeds, they can execute billions of verification cycles as required in embedded designs, in a short period of time, without constraints on design sizes.

• Transaction-based verification: They support transaction-level verification in a system-level verification at accelerated speeds necessary for debug at a high-level of abstraction via monitors, checkers, and assertions.

• 100% visibility: Total visibility into the design is available in the specific time domain window you need, within billions of cycles of operation, to provide just the data you need to trace the problem quickly.

• Deterministic debug: They enable exact replication of test scenarios starting at one or more user-defined points in time anywhere within the test run. During system-level tests, many of the scenarios tested involve asynchronous activity that may be extremely hard to duplicate. By initiating tests at different times in the test sequence, one can also trace multiple problems detected from a single test run.

• Easy navigation: The systems facilitate the navigation between the software and hardware levels. Automatically converting software symbols and function names into bus values is an extremely powerful way to ease the switch between the different views. Once you know where in the software you want to look, it’s easier to just type the function name into a hardware breakpoint than to look at cycles and busses to determine when the embedded processor is reaching that point.

Conclusion

In the era of very low double-digit process node technologies, more than ever accelerating time-to-market and improving product quality of embedded SoC designs are mandatory. Verification of hardware and embedded software continues to consume more than 50% of the development cycles, calling for a change from traditional approaches based on different verification tools and characterized by poor verification methodologies, if not an outright lack of them.

Advanced emulation platforms finally break the status quo and bring together the disparate parts of the engineering teams, allowing them to plan more strategically and implement a debug approach based on multiple abstraction levels and not just delve into enormous quantities of data via waveform analysis.

By providing a common platform shared between hardware and software teams, and supporting speed of execution in transaction-based verification unmatched by any other engine, today’s emulators accelerate SoC hardware/software integration well ahead of first silicon, shedding several months off a tight development schedule, and improving product quality.

Reference

1. According to a mathematical model devised by Logic Automation (Logic Automation merged with Logic Modeling in 1992, and in 1994 Synopsys acquired Logic Modeling), in a highly competitive market a delay of one quarter (three months) to market with a chip that has a two-year product life, more than one-third of the revenues is wiped out compared to the revenues potentially collected if the chip had been on time.

Lauro Rizzatti is the senior director of marketing for emulation at Synopsys. He joined Synopsys via the acquisition of EVE. Prior to the acquisition, he was general manager of EVE-USA and marketing VP. In the past, he held positions in management, product marketing, technical marketing, and engineering. He can be reached at [email protected].

About the Author

Lauro Rizzatti

Business Advisor to VSORA

Lauro Rizzatti is a business advisor to VSORA, an innovative startup offering silicon IP solutions and silicon chips, and a noted verification consultant and industry expert on hardware emulation. Previously, he held positions in management, product marketing, technical marketing and engineering.