This file type includes high-resolution graphics and schematics when applicable.

This article collects together 11 myths surrounding embedded benchmarking, as viewed from the perspective of the EEMBC industry consortium and its members. Since EEMBC is represented by a wide range of processor, system, and tool vendors, we thought it would be most interesting to ask some of the participating members to present their unique perspectives on embedded benchmarking.

But to start it off, let’s get to the question “What is embedded benchmarking?” At the highest level, embedded benchmarking can be any form of measurement to quantify the behavior of an embedded system. The most obvious examples are measurement of performance, energy, and memory consumption. However, benchmarking can also include reliability, level of interoperability, and temperature stability.

Historically, embedded benchmarking has focused on processors or microcontrollers. Through evolution, these processors and microcontrollers have become systems-on-a-chip (SoCs) and in turn the embedded benchmark focus has shifted to the system level. Furthermore, the concept of “embedded” has now grown to include many vertical applications ranging from ultra-low-power Internet of Things (IoT) devices to super-high-performance scale-out servers used in data centers.

Like all forms of benchmarking, embedded benchmarking is a combination of science and art. It’s science because the methodologies must be developed to yield consistent and repeatable data. It’s an art because benchmark developers must imagine all of the ways that users might try to thwart the good intentions of the benchmark. As an industry association, EEMBC has 20 years of benchmarking experience, but it’s a myth to assume that EEMBC benchmarks (or any benchmark) can always yield the perfect combination of science and art. Building good benchmarks is hard and requires an enormous collaborative effort.

1. A benchmark is always a benchmark.

Confusing myth? Well, it’s supposed to be confusing to reflect the unlimited number of embedded benchmarks to choose from. But most of what people call a benchmark is really just a workload. The majority of the “benchmarks” used in research or competitive analysis are workloads, not benchmarks.

A benchmark is a tool that allows the user to make fair and equitable comparisons. This means it must be portable across platforms, it must be repeatable, and it requires consistent build and runtime parameters. In other words, a benchmark is really the combination of a workload(s), plus parameters, plus run rules. Often times, in benchmark development, it’s more challenging to select the optimal set of parameters than it is to build the workload itself. (Myth contributed by Markus Levy, president at EEMBC)

2. Benchmarks are not representative of real-world applications.

This myth could also be entitled “the best benchmark is the user’s real application code.” Truly, a good benchmark must be representative of the target applications. There’s a paradox in embedded benchmarking. A benchmark defines a reference framework whereby devices that may be very different can be compared under identical conditions. Typically, this requires a synthetic application, which often doesn’t match any real-world application. If the benchmark result isn’t extendable to other applications, then it’s of little value.

An ideal benchmark would provide a different figure of merit for each target application. However, this is impractical given the almost unlimited variety of applications, notably in the IoT domain. The key to a useful benchmark is, as always, compromise. First, reduce the scope of the benchmark to a manageable subset of applications. Then for each subset, identify common tasks that dominate the targeted performance measurement.

For example, wearable devices are a subset of IoT edge nodes characterized by battery operation, wireless connectivity, data encryption, and analog sensing. Battery operation implies that the key performance indicator is energy efficiency. Therefore, a suitable benchmark for wearable devices measures energy used while performing analog-to-digital conversion, encryption, and wireless transfer (of course, separate profiles or subsets are needed for each wireless protocol). The result is not a real application’s energy consumption, but a good benchmark score implies good performance in any application sharing these characteristics. (Myth contributed by Mark Wallis, system architect at STMicro)

Actually, in some instances, this is not a myth. For example, major portions of the outdated Dhrystone MIPS are susceptible to a compiler’s ability to optimize the work away. Furthermore, library calls are made within the timed portion of Dhrystone. Typically, those library calls consume the majority of the time consumed by the benchmark. With other benchmarks (such as CoreMark), I have personally witnessed how compiler optimizations directly reflect real-world conditions.

The approach taken by your compiler vendor can also have a major impact. To get the best value from benchmarks (which, after all, are designed to be representative of real applications), they should be treated like real applications! If your compiler vendor builds in benchmark-special “defeat devices” to get the best benchmark score (remember the Volkswagen emissions scandal?), you likely won’t see such benefits in your application.

Another aspect by which benchmarks should be treated like real applications is to make sure they’re judged using three axes—performance and size and energy—not just performance! Many compiler vendors obtain high CoreMark (and other benchmark) scores using special knowledge of the benchmark’s control flow, apply extreme inlining/unrolling/function specialization and other techniques that cause extreme codebloat, and consume more energy by requiring more memory accesses. These techniques aren’t going to win in a real application, where your microcontroller has limited memory and energy resources.

Choose your compiler vendor wisely and you will see real value in using benchmarks as nature intended—to give you a representative indication of how your real-world application might benefit. (Myth contributed by Dave Edwards, founder and CEO of Somnium Technologies)

4. Embedded benchmarks are only about MIPS.

Any key performance indicator, such as energy efficiency, temperature stability, and mechanical reliability, may be compared. Benchmarks have been used to compare CPU performance since the early days of computing, with the Whetstone and the subsequent Dhrystone among the most well-known. More recently, the EEMBC consortium has developed benchmarks adapted to the embedded processing industry, such as CoreMark, FPMark (for floating point), MultiBench (for multicore scalability), and system benchmarks such as ANDEBench-Pro (for Android) and BxBench (for the browsing experience).

All of the benchmarks mentioned above essentially compare processing speed (time of execution) or cycle efficiency (number of clock cycles). This is understandable, since for decades the computing industry has been driven by increasing complexity and reduced execution times. However, a recent shift in emphasis has come about with the development of battery-powered mobile devices and the IoT, together with a growing awareness of the need to economize energy and resources. Pure processing speed is no longer the only performance indicator for consumers and developers.

Energy efficiency has become equally important, leading to a demand for benchmarks that compare the amount of energy used to execute tasks. The ULPBench and upcoming IoT-Connect benchmarks from EEMBC attempt to address this growing need. Moreover, the benchmark concept can be extended to any performance indicator of interest, such as RF transmission strength, and mechanical and electromagnetic reliability, already of great importance in industrial and automotive applications. (Myth contributed by Mark Wallis, system architect at STMicro)

5. All CPU instruction clocks are created equal.

Most are aware that CPU instruction cycles vary with the instruction. Some execute in one system clock cycle, while others take two or more. Knowing this, shouldn’t one be able to calculate the MIPS and convert to an accurate μA/MHz number? Wrong! When comparing a BRANCH instruction to a MOVE instruction, the branch consumes significantly more energy due to the amount of logic being switched inside the CPU.

To go a step further, most embedded systems utilize peripherals (e.g., ADC, UART, SPI). Depending on the system architecture, instructions to read/write peripherals can be very power-consuming. Embedded benchmarking helps tie boundaries around these variants and provides a more level playing field for comparing system-level implementations. (Myth contributed by Troy Vackar, manager, applications engineering at Ambiq Micro)

6. System performance is proportional to a CPU’s clock frequency.

In simple processor systems, doubling a processor’s clock frequency can possibly double the maximum performance supported by the system. However, in many modern microcontrollers, the embedded flash memories often have access speed limitations, e.g., within a 30-MHz range. As a result, when the processor’s clock is set to a higher frequency than the flash-memory access speed, wait states must be inserted to the bus system. Consequently, benchmark results such as the CoreMark/MHz figure could be lower when running at higher clock speeds.

A number of microcontrollers have integrated flash access accelerators or cache controllers to avoid such effects. Similar effects can also be found in peripheral systems, with some peripheral subsystems having limited clock speeds. Thus, the performance of I/O intensive tasks is restricted by such constraints rather than the processor’s clock speed. (Myth contributed by Joseph Yiu, senior embedded technology manager at ARM)

7. Performance of a core remains unchanged when the core is used to create an SoC.

Wrong. When architects define instruction set architectures (ISAs) and subsequently design cores utilizing those ISAs, performance measurements are carried out using pre-silicon, validation platforms such as cycle-accurate simulators, RTL simulators, and FPGA-based simulators. On these platforms, the core and the tightly coupled memory are accurately modeled. However, that’s not the case for much of the second- and third-level memory and the bus/interconnect hierarchy.

These platforms frequently offer low throughput, limiting the ability to benchmark complex application scenarios. Hence, IP core vendors provide benchmark scores for rather simple benchmarks like Dhrystone, CoreMark, or a few application kernels such as FIR and IIR filters. The problem arises when marketing materials reference these numbers to size up an SoC for customer applications.

The first step in this exercise is to estimate the application in terms of DMIPS/MHz or CoreMarks/MHz. The next step is to partition the application and allocate the performance to various cores in the SoC. This doesn’t take into account the fact that memory requirements in applications aren’t likely to be met by the tightly coupled memory associated with each core. Memory hierarchy and caching mechanisms must contend with this. In a realistic application, there will also be multiple masters apart from the cores simultaneously trying to access memory and system buses. All of this leads to performance drops as the benchmarking encompasses more and more of the SoC. (Myth contributed by Rajiv Adhikary, senior software engineer at Analog Devices)

8. For benchmarking energy efficiency, sleep power is the most important thing.

Earlier myths were couched as sleep power or active power being the most important thing. “Sleep” was previously used to describe the system’s state. More recently, “sleep’ refers specifically to a CPU state, not the state of the entire device. In modern, low-energy SoCs, many aspects of the device are fully functional while the CPU is asleep. Therefore, sleep functionality is important.

Operations such as automated sensing, peripheral-to-peripheral data delivery, and in the case of wireless subsystems, automated packet encryption and formation as well as decryption, decoding, and address resolution, are high-functionality tasks that can be performed either while the CPU is asleep or performed in parallel while the CPU is working on other tasks. This results in a much lower energy burn rate per operation when compared to systems whose tasks are performed by the CPU. (Myth contributed by Brent Wilson, director of applications engineering at Silicon Labs)

IoT is indeed a different game because of its scale, spanning across almost all aspects of our lives—agriculture, industrial control, healthcare, smart living, etc. Many big companies have made big investments in IoT, but the market is ramping slowly due to the general immaturity of the ecosystem and the discontinuity of smart device and cloud.

However, a revolution will dramatically change our ways of living and working. Since IoT is very much a segmented market, small companies, including startups, shall have opportunities to create disruptive business models and agile solutions in the domains of their expertise. Cloud computing is an essential part of the IoT, and thus the benchmarking could be quite different from ones used for traditional embedded CPUs.

Small companies can contribute to the benchmarking based on their product usage scenarios together with the cloud capabilities. “To be disruptive or to be disrupted”—it is a game for everybody. Embedded CPU benchmarking for IoT is essential even for small companies in the era of IoT. (Myth contributed by Xiaoning Qi, CEO of C-Sky)

10. Benchmarking security for IoT applications is impossible.

Security is IoT’s modern Hydra—the many-headed monster from Greek mythology. IoT applications face many threats, and defeating these requires an end-to-end, systems approach. Security involves hardware and software, algorithm and protocol, life-cycle and process challenges. And IoT applications have an attack surface that includes the entire internet.

So, then this must be impossible to benchmark? Incorrect. Though a single benchmark that covers all security aspects doesn’t exist, many aspects can be benchmarked. Like for safety, there are standards that define how to evaluate security, and there are security labs that perform independent evaluations. An example is the Common Criteria standard.

But it’s not just the level of security that should be benchmarked. The cost of security should also be benchmarked, and security features aren’t free. For cost-sensitive IoT markets, the price today often tips the scale against security. The many cybersecurity breaches in the news, however, are turning around public and government opinion. And efficient security solutions are becoming available for low-cost embedded devices, e.g., SecureShield, TrustZone, and OmniShield.

So how does one balance security and cost, and how do you select between different security solutions? In one example, EEMBC’s upcoming IoT Security benchmark will fill that gap. It’s the industry’s first independent benchmark for quantifying the performance and energy overhead of security for IoT end-nodes. EEMBC won’t be the Hercules who defeats the IoT security monster, but it can act as Hercules’ indispensable nephew Iolaus who cauterized the cut-off heads so they did not grow back. (Myth contributed by Ruud Derwig, senior architect at Synopsys)

11. It's okay to only benchmark the endpoint, without the network or server side.

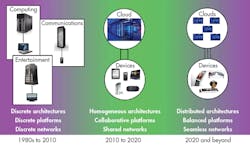

This is just wrong. The embedded market has evolved from standalone systems to machine-to-machine (M2M) networks and now to cloud-enabled IoT. The new IoT model means that endpoint behavior and performance are tightly bound to network and cloud services performance (see figure).

IoT customers don’t want to pay for stranded endpoint performance –endpoint performance that the overall system cannot use. Overpaying on capital expense (CAPEX) for an endpoint, with the hope that the stranded performance might be utilized someday, is proving to be a tough sell. We believe that analytics will move closer to the edge of the network. But, in general, moving analytics closer to the edge is likely to result in smarter gateways, not smarter and more expensive endpoints. The connections between endpoints, aggregation points, and cloud services will have a substantial impact on a system’s operational expenses (OPEX) and performance, as will the performance and scalability of cloud services. That means balancing CAPEX between endpoints, network, and services to reduce the system’s overall OPEX.

Efforts like EEMBC’s IoT benchmark suite are taking the first steps to address this embedded system-of-systems complexity, because endpoints no longer exist in a vacuum (except NASA’s deep space network, but that’s a different definition of “vacuum”). (Myth contributed by Paul Teich, principal analyst at TIRIAS Research)

About the Author

Markus Levy

Director of Machine Learning Technologies, NXP Semiconductors

Markus Levy joined NXP in 2017 as the Director of AI and Machine Learning Technologies. In this position, he is focused primarily on the technical strategy, roadmap, and marketing of AI and machine learning capabilities for NXP's microcontroller and i.MX applications processor product lines. Previously, Markus was chairman of the board of EEMBC, which he founded and ran as the President since April 1997. Mr. Levy was also president of the Multicore Association, which he co-founded in 2005.

Before that, he was senior analyst at Microprocessor Report and an editor at EDN magazine. Markus began his career at Intel Corp., as both a senior applications engineer and customer training specialist for Intel's microprocessor and flash-memory products. Markus volunteered for 13 years as a first responder—fighting fires and saving lives.