Download this article in PDF format.

The telecom industry is undergoing a rapid transformation from the traditional central office to virtualized data centers. This transformation is driven by demand for real-time service delivery and associated CapEx and OpEx savings. The resulting migration from purpose-built systems to disaggregated applications, control, and infrastructure is driving a broader ecosystem that’s increasingly using open solutions—including both open-source software and hardware.

The Open Compute Project (OCP) has a mission to deliver open-source hardware that meets the demands of multiple industries and provides greater choice, customization options, and cost savings. One working group within the OCP is focused on addressing the open-source hardware needs of the telecom industry, leveraging the Open Compute Project base model with applications and optimizations for central offices and service-provider-oriented data centers.

The CG-OpenRack-19 specification, a contribution from Radisys, recently gained OCP-ACCEPTED status. CG-OpenRack-19 is a scalable, carrier-grade, rack-level system that integrates high-performance compute, storage, and networking in a standard rack.

Radisys worked with communications service-provider and manufacturer partners to contribute the specification, thereby bringing open-rack concepts and benefits into service provider networks. While adapted for the telco environment, this open-rack contribution aligns with the principles of OCP, namely efficiency, scale, openness, and impact:

- Efficiency: A key OCP tenant is efficient design. This design must consider power delivery and conversion, thermal efficiency, platform performance, reducing overall infrastructure costs, reducing code weight, reducing latencies, and more.

- Scale: OCP contributions must be scale-out ready. In essence, the technology must be designed for maintenance in large-scale deployments, including physical maintenance, remote management, upgradability, error reporting, and appropriate documentation.

- Openness: While open-source contributions are encouraged, it’s not always possible in every case. However, all contributions at the very least should comply with existing open interfaces or provide one.

- Impact: Any new contribution must create a meaningful positive impact within the OCP ecosystem. In the case of CG-OpenRack-19, the OCP-ACCEPTED specification provides a very high level of modularity and simplicity, thereby reducing system setup time and operator costs, and providing high performance per watt and per rack.

Addressing Telco Requirements

The Open Compute Project grew out of Facebook’s initiative to create an energy- and cost-efficient data center that could scale to meet explosive demand for data. The majority of these Facebook data centers are greenfield operations, meaning they’re built from the ground up.

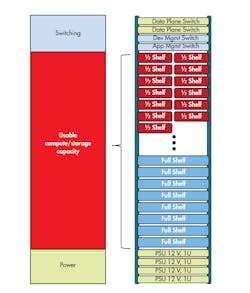

1. This block diagram showcases the components of the CG-OpenRack-19.

When adapting the OCP base model to the telecom environment, though, new requirements needed to be taken into account to accommodate existing central offices. These include the following:

- Physical: The solution has to be suitable for central-office retrofitting and new telco data-center environments supporting standard and readily available dimensions and environmental operating conditions. The rack must be able to support a wide range of power-consumption models to operate in either a large greenfield data center with a state-of-the-art cooling system, or in an environment that could be constrained to a small area with limited power.

- Content/Workload: The hardware should provide heterogeneous compute and storage elements, as seen in current options, plus support a range of brawny and light processing elements and storage technologies.

- Management: While an application-centric data center can be “lightly” managed at the infrastructure level, a service provider network has management needs across different levels of service offerings. A number of viable solutions in the industry can provide the management, both commercial and open source, including open-source software from the CORD (Central Office Re-architected as a Datacenter) project. CORD provides open-source control and management software that’s a good fit for OCP hardware, especially the CG-OpenRack-19 variation.

- Networking/Interconnect: The specification must address connectivity options that meet requirements for the traditional central office as well as new virtualized data centers, including support for multiple top-of-rack (TOR) network switches at different interface speeds.

CG-OpenRack-19 Specification: A Closer Look

Building on the OCP Open Rack platform originally designed for emerging we- scale data-center environments, the CG-OpenRack-19 specification adds capabilities required for telecom applications. It’s delivered in the form of a Rack and Sled interoperability specification. The CG-OpenRack-19 Specification enables the communications service provider to collaborate with Radisys or other vendors to rapidly design and develop sleds that meet their unique needs. The hardware can be developed within an OCP-ACCEPTED CG-OpenRack-19-based ecosystem.

Let’s look at how the specification was developed.

First, when considering the physical aspects or geometry of the solution, it was paramount that it align with standard practices for the telecom industry. The existing OCP Open Rack is wider than standard rack-unit (RU) spacing, and is deeper than some central-office environments will allow, making it problematic for retrofitting.

The new CG-OpenRack-19 specification utilizes a narrower 19-in. rack width with a 450-mm equipment aperture and fits standard RU spacing with a 1000- to 1200-mm cabinet depth, supporting typical GR-3160 floor-spacing dimensions. By creating standard dimensions and normalizing them, the specification provides an easy-to-deploy and retrofit solution. Figure 1 depicts a CG-OpenRack-19 block diagram.

As we tackled content and workload management scenarios, we recognized the necessity for preserving the heterogeneous compute and storage servers, so we’ve assured that this remains in place. The design also supports a range of brawny and light processing elements. The newly accepted specification enables this processor variety by subdividing and/or aggregating the full and half-width building blocks as seen in Fig. 1.

Management of the rack can be accomplished via an Ethernet-based out-of-band (OOB) management network connecting all nodes via a TOR management switch. There are also optional rack-level management options such as Intel RSD or CORD. The management options were purposely kept open so as to allow for greater innovation.

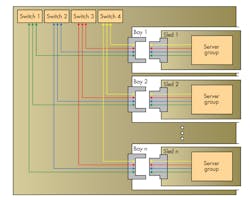

2. In this image, you can see the network connection to the sleds.

The networking and interconnect areas, with so many points of consideration, required quite a bit of focus. Along with the Ethernet TOR networking switches for I/O aggregation to nodes, we developed an innovative fiber cable system that’s cabled from the rear; blind-mate with flexible interconnect mapping (Fig. 2).

Although front-cabled solutions are also possible and acceptable, this new rear blind-mate mapping creates a much cleaner interface that delivers a plug-and-play solution, reducing significant OpEx costs. This blind-mate optical connection isn’t telecom-specific, and can be used in other OCP-based rack solutions as well.

The system remains NEBS optional (L1/L3), but the new design is more suitable for deployment in a telecom environment.

We’ve also incorporated options as part of the architecture for ac (120-250 V) and dc (‒48 V) power to the rack to meet central-office demand as needed. And, recognizing that telcos have a range of environments, some greenfield, others not, we incorporated a wide range of power consumption per rack—scalable from 5 to 35 kW (typical deployments are at 7 to 15 kW)—allowing for a viable solution for small and large space parameters. The fans have also been right-sized to hit the sweet spot between quiet acoustics and high airflow.

Summary

The CG-OpenRack-19 specification combines ideas and concepts that are currently and successfully applied by the Open Compute Project and adds optimization for the telecom industry. One example of an open modular rack and sled architecture based on the specification is Radisys’ DCEngine hyperscale data-center solution. DCEngine is engineered to meet the environment, power, and seismic requirements of the telco industry, and it includes electrical shielding elements to avoid the emissions experienced by current racks.

By leveraging CG-OpenRack-19 for an open hardware solution, communications service providers can rapidly gain the advantages of the data-center infrastructure, while meeting the real-time service requirements of their subscribers.