Energy Efficiency of Computing: What’s Next?

This file type includes high-resolution graphics and schematics when applicable.

Any conversation among semiconductor experts will inevitably drift to the end of Moore’s Law. We all like to speculate about it, but the truth is that no one can put a precise date on when it will end. We do know, however, that we’ve seen a big slowdown in the post-2000 period in the performance and efficiency gains that we’ve been able to achieve year after year. Fortunately, for at least one aspect of computing efficiency, we’ve seen more rapid improvements than expected, and we believe these will continue for at least a few more years.

There are various ways to measure computing efficiency. One of the most telling and easily calculated, though, is the efficiency of computing at peak computational output [1], typically measured in computations per kilowatt-hour.

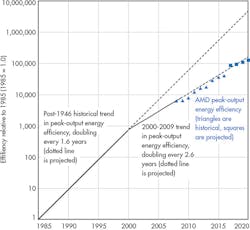

For a long time, processor performance and peak output energy efficiency moved forward together at a rapid clip. The gains started well before 1965, the year Gordon Moore published his first projection [2]. Peak output efficiency doubled about every 1.6 years from the dawn of the computer age [3]. As a result, the energy efficiency of computation at peak computing output improved by ten orders of magnitude from the mid-1940s to the year 2000—a factor of more than ten billion over more than five decades.

By the turn of the millennium, weighed down by the physics of CMOS circuit scaling, the growth in peak output energy efficiency started to slow, so that it was only doubling about every 2.6 years or so [4] (Fig. 1). Chipmakers turned to architectural changes to compensate for the slowdown, such as multiple cores for CPUs, but they weren’t able to maintain historical growth rates of performance and efficiency.

The difference between these two growth rates is substantial. A doubling every year and a half results in a 100-fold increase in efficiency every decade. A doubling every two and a half years yields just a 16-fold increase.

Efficiency Trends in Typical Use

Fortunately, the efficiency gains we’ve already obtained over the last half-century or so were more than sufficient to launch us into entirely new design space [5]. To a large extent, the frontiers of engineering have shifted from creating the fastest CPUs to building ultra-low-power processors. The latter can be used not only inside battery-powered smartphones, tablets, and wearable devices, but also in large-scale computing settings such as data centers.

As a result, maximizing peak output efficiency is no longer the central goal for many chip designers. Instead, the engineering focus has shifted more to minimizing average electricity use, often to extend battery life.

Few computing devices are computing at full output all of the time (with some exceptions, such as servers in specialized scientific computing applications [6]). Instead most computers are used intermittently; typically operating at their computational peak for 1-2% of the time.1 Fully optimized systems reduce power use for the 98%+ of the time a device is idle or off. The best power-management designs make a computer “energy proportional,” in that electricity use and computational output go up proportionally with utilization, and electricity use goes to zero (or nearly so) when the device is idle [7].

Peak output efficiency, the metric for many earlier studies, is an indicator of how efficiently computers run when at full speed. Koomey et al. [3] calculate that metric using Equation 1:

The key inputs are measurements or estimates of computations per hour and electricity consumption per hour at maximum computing output

As discussed above, a metric that’s focused on peak performance says little about how most current-day computers perform in normal use, so the more relevant consideration has become what we might call “typical-use energy efficiency,” or TUEE [1], which would capture the benefits of low-power modes for typical usage cycles for computing devices.

To create such a metric, one could define a standard usage profile for computing devices in a particular application (for example, laptop computers in business use), estimate the number of computations performed over the year, and divide by the number of kilowatt-hours per year of electricity use, as in Equation 2. Such a calculation would account not only for the fact that computers rarely operate at their maximum output, but also for how well and how fast the computer shifts to idle or sleep modes.

The units of this metric would also be in computations per kilowatt-hour, but couldn’t be compared in absolute terms with the Peak Output Energy Efficiency (because both annual computations and energy use are measured differently). The trend over time, however, could be compared.

Unfortunately, no one has yet created a universally accepted usage profile capturing computational output in the field over the course of a year, and great controversy still swirls about the feasibility of creating such metrics. Many researchers have wrestled with this problem in the past, including Knight [8, 9, 10], Moravec [11], McCallum [12], and Nordhaus [13]. There are some cases where this calculation is possible, though (see trends derived from the Spec Power data presented below).

To avoid these issues and derive a meaningful trend in TUEE, one could divide an index of the maximum computing output of a particular computer (normalized to some base year) by an index of the average electric power consumption needed to operate such a computing device over the course of a year (normalized to the same base year). This metric would allow us to account for improvements in the active power, active task time, standby power, transition times, and task frequency of computing devices, while also correctly accounting for the increase in computational output for newer devices. It assumes that processor utilization remains constant over time and that computational output increases at the same rate as maximum processor speed, but doesn’t require measurement of exactly how many computations a computer does over a year (see Equation 3).

The encouraging trend is that in recent years, the rate of improvement in typical-use efficiency has exceeded the improvement rate in peak output efficiency. With the advent of mobile computing and global energy standards that emphasize average power in the last couple of decades,2 improvements in typical-use efficiency have been rapid.

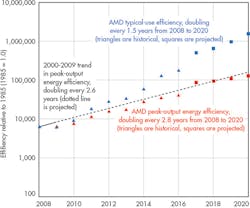

Figure 2 (supported by Appendix Table A-3, which can be downloaded at http://www.analyticspress.com/computingefficiency.html) indicates that typical-use efficiency of Advanced Micro Devices (AMD) notebook products doubled every 1.7 years or so from 2008 to 2016. This trend, obtained by dividing an index of general-purpose compute capability by an index of typical-use energy consumption (as in Equation 3) is very responsive to improvements in power management, low-power modes, accelerator integration, and other approaches that aggressively reduce average power.

Trends in typical-use efficiency are currently much more important than those in peak output efficiency for mobile devices and desktop computers. For servers, the blend of peak output and idle/low-power states that constitute typical use is more weighted toward peak than for mobile devices, but the low server utilization typical in enterprise data centers means that idle mode power can be important for these devices as well.

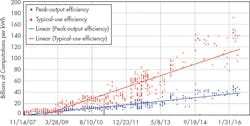

Figure 3 shows the recent trend in server peak-output and typical-use efficiency using the SPECpower benchmark, calculated using Equation 2.3 The improvement rates from 2009 to 2016 are consistent with mobile device trends. The data show doubling in peak output efficiency every 2.9 years, but doubling of typical-use efficiency4 every 1.7 years.

What’s the Potential for Further Improvements?

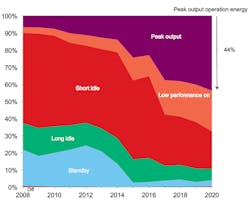

These approaches still appear to have ample opportunity for further progress, which leads us to project an efficiency doubling time of 1.5 years for typical-use efficiency over the whole period of 2008 to 2020. As with all exponential trends, this one also will moderate as the idle power approaches zero and energy use is once again dominated by the small active period power. Fig. 4 shows that with aggressive use of idle states and power reductions thereof, the small peak computation period of operation in laptop computers should again begin to dominate total energy in the next decade.

It’s a hopeful sign that the typical-use efficiency is less dependent on the (decelerating) improvements in peak output efficiency, and more amenable to architectural and power-management-driven gains (fine grained low power modes, fast entry/exit of such modes, and use-case driven optimizations). Key to these gains is reducing or eliminating power that isn’t directly involved in immediate computation. In legacy systems, many elements are powered up, but idle, waiting for something to do. For instance, additional CPU cores in a multicore system often sit idling, waiting for a task. Disk drives may remain powered up waiting for an access request.

Improving efficiency involves aggressively putting these unused elements into a low-power state when possible, and then reducing the latency of exiting that state when required so that there’s no performance impact from waiting for a response. The power used when in the low power state is, of course, an important area for innovation. Designers often use several graduated states that provide lower power, coupled with higher latency for lower power levels [14, 15]. Power management then has the goal of choosing the best state for each system element based on workload and system-usage heuristics.

As shown in Fig. 4, the innovations that enable dramatic efficiency gains in typical use are likely to encounter the same limits that slowed the gains in peak output efficiency. Typical energy usage asymptotically becomes dominated by peak output, which is still hostage to the Moore’s Law slowdown. For this reason, we’ll have to fundamentally rethink how we design computers in the next few decades, because of real physical limits to our current architectures and transistors [5]. Radically new technologies will mean different constraints and thus different rates of efficiency growth. However, in the meantime, we can enjoy the energy-efficiency gains of typical-use optimizations that have put us back on the Moore’s Law curve for at least a few more years.

Conclusions

For the great majority of today’s computing systems, peak output efficiency is not the most important metric when considering how most computing devices are used. Through smart power management, these devices are designed to dynamically self-optimize their operation to improve their efficiency in normal use.

In a sense, they’re reallocating a tiny fraction (0.05% in the case of a 32-bit microcontroller in AMD’s recent “Kaveri” notebook design) of their compute capabilities to optimize efficiency and thereby achieve more rapid efficiency improvements than those of their constituent silicon components. It’s a foretaste of how intelligent devices in the future will break through what we now consider to be the limits to efficiency and performance.

Footnotes:

1. Based on the widely used commercial battery life benchmark Mobile Mark 2014 as a proxy for real usage.

2. For an example, see http://www.energystar.gov/products/certified-products/detail/computers.

4. In this case, we estimate typical-use efficiency with peak performance of each server (in computations per second) divided by the power use (in watts, or joules/second) at 10% utilization (which is typical for servers in enterprise companies, and thus represents average power and energy consumption for servers operated at that utilization level). The peak output efficiency for sophisticated data center operators like Google, Microsoft, and Amazon, whose servers typically operate at much higher utilization levels than do enterprise servers, is growing at rates comparable to the peak output efficiency trends calculated from the Spec Power data.

References:

[1] Koomey, Jonathan. 2015. "A primer on the energy efficiency of computing." In Physics of Sustainable Energy III: Using Energy Efficiently and Producing it Renewably (Proceedings from a Conference Held March 8-9, 2014 in Berkeley, CA). Edited by R.H. Knapp Jr., B.G. Levi, and D.M. Kammen. Melville, NY: American Institute of Physics (AIP Proceedings). pp. 82-89.

[2] Moore, Gordon E. 1965. "Cramming more components onto integrated circuit." In Electronics. April 19.

[3] Koomey, Jonathan G., Stephen Berard, Marla Sanchez, and Henry Wong. 2011. "Implications of Historical Trends in The Electrical Efficiency of Computing." IEEE Annals of the History of Computing. vol. 33, no. 3. July-September. pp. 46-54.

[4] Koomey, Jonathan, and Samuel Naffziger. 2015. "Efficiency's brief reprieve: Moore's Law slowdown hits performance more than energy efficiency." In IEEE Spectrum. April.[5] Koomey, Jonathan G., H. Scott Matthews, and Eric Williams. 2013. "Smart Everything: Will Intelligent Systems Reduce Resource Use?" The Annual Review of Environment and Resources. vol. 38, October. pp. 311-343.

[6] Barroso, Luiz André, Jimmy Clidaras, and Urs Hölzle. 2013. The Datacenter as a Computer: An Introduction to the Design of Warehouse-Scale Machines. 2nd ed. San Rafael, CA: Morgan & Claypool.

[7] Barroso, Luiz André, and Urs Hölzle. 2007. "The Case for Energy-Proportional Computing." IEEE Computer. vol 40, no. 12. December. pp. 33-37. [http://www.barroso.org/]

[8] Knight, Kenneth E. 1963. A Study of Technological Innovation-The Evolution of Digital Computers. Thesis, Carnegie Institute of Technology.

[9] Knight, Kenneth E. 1966. "Changes in Computer Performance." Datamation. September. pp. 40-54.

[10] Knight, Kenneth E. 1968. "Evolving Computer Performance 1963-67." Datamation. January. pp. 31-35.

[11] Moravec, Hans. 1998. "When will computer hardware match the human brain?" Journal of Evolution and Technology. vol. 1,

[12] McCallum, John C. 2002. "Price-Performance of Computer Technology." In The Computer Engineering Handbook. Edited by V.G. Oklobdzija. Boca Raton, FL: CRC Press. pp. 4-1 to 4-18. [http://www.jcmit.com/]

[13] Nordhaus, William D. 2007. "Two Centuries of Productivity Growth in Computing." The Journal of Economic History. vol. 67, no. 1. March pp. 128-159.

[14] Wang, Alice, and Samuel Naffziger, ed. 2008. Adaptive Techniques for Dynamic Processor Optimization: Theory and Practice (Integrated Circuits and Systems). New York, NY: Springer Science + Business Media.

[15] Gough, Corey, Ian Steiner, and Winston A. Saunders. 2015. Energy Efficient Servers: Blueprints for Data Center Optimization. New York, NY: Apress Media.

About the Author

Sam Naffziger

Senior Vice President and Corporate Fellow, AMD

Samuel Naffziger is a Senior Vice President and Corporate Fellow at AMD responsible for technical strategy with a focus on power technology development. He has been the lead innovator behind many of AMD’s low-power features and chiplet architecture. He has over 30 years of industry experience with a background in microprocessors and circuit design, starting at Hewlett Packard, moving to Intel, and then at AMD since 2006.

He received the BSEE from CalTech in 1988 and MSEE from Stanford in 1993 and holds over 120 U.S. patents in the field. He has authored dozens of publications and presentations on processors, architecture, and power management and is a Fellow of the IEEE.

Jonathan Koomey

Lecturer

Jonathan Koomey, Ph.D. is a lecturer at Stanford University and is one of the world’s foremost experts on energy and environmental aspects of information technology. His latest book is Cold Cash, Cool Climate: Science-based Advice for Ecological Entrepreneurs. His website is www.koomey.com.