Software And System Solutions Drive Datacenter Energy Efficiency

Datacenters in the U.S. consume anywhere from 1.5% to 3.0% of the nation’s energy production. IT infrastructure and cloud computing are growing in response to the overwhelming growth in computed, stored, and transferred data. Studies show that the core elements of datacenters (servers, storage, and networking) typically run in low-utilization conditions where they operate at an inefficient ratio of computing performance to energy consumption.

Related Articles

- Hashing Enhancements Enable Dramatic Improvements In Data Center Performance And Flexibility

- Carrier Networking Technology Takes Over The Data Center

- Data Centers Go Green To Reduce Costs, Carbon Footprint

System techniques such as virtualization can increase utilization and allow system operation in the efficient region of the power-performance space. Improved utilization is not always feasible on a sustained basis, though. However, protocol improvements can allow key interface intellectual property (IP) such as PCI Express (PCIe) to operate in an energy-proportional manner and bring tremendous power savings in the idle states.

Datacenter Traffic And Workload Growth

From Google searches to Amazon shopping to the more mundane corporate IT infrastructure, datacenters are a vital part of the everyday technology landscape. Their ubiquity makes how we utilize and optimize them critical.

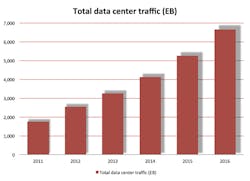

Cisco Systems forecasts that annual datacenter traffic will reach 6.6 zettabytes (1021 bytes) by the end of 2016 with a compound annual growth rate (CAGR) of 31% from 2011 to 2016 (Fig. 1).1 In 1992, daily Internet traffic was of the order of 100 Gbytes. In 2012, more than 12,000 Gbytes of data crossed the Internet every second. This number is projected to triple by 2017.

New applications continue to drive the need for increased performance. The need for faster delivery of greater quantities of data is a key driver of datacenter growth. According to Josh James of Domo.com, nearly 700,000 Facebook updates occur each minute.2 Google receives more than 2 million queries in the same period of time. Cisco Systems says that mobile and video applications are among the biggest drivers of Internet data.3

In parallel, a transformation in the datacenter landscape is causing a rapid shift from traditional datacenters to cloud datacenters. Cloud datacenters offer application and data access on multiple platforms to an increasingly mobile population of business users and consumers. Declining storage costs, coupled with “unlimited” e-mail services and a plethora of cloud-based solutions, have driven up the amount of digital material stored.

Two-thirds of traffic in 2016 is forecasted to be cloud-based, displacing traditional datacenter traffic. While compute workloads on traditional servers will grow by approximately 33%, virtualized workloads on cloud servers are projected to increase by 200%, Cisco Systems says.4 The company’s study also finds that nearly three-fourths of datacenter traffic is internal to the datacenter, inter-server, or between servers and storage.

Impact On Energy Consumption

Online datacenters and cloud computing facilities are designed for handling unpredictable peak usage. While breaking news can bring servers down, e-tailers build out capacity to handle the peak loads of infrequent events such as Cyber Monday. Further, to prevent against downtime in the event of power failure, many datacenters use diesel backups that are significant polluters.

The 2010 Report on Toxic Air Contaminants from the Bay Area Air Quality Management District lists the Bay Area’s refineries as top polluters, followed by utilities and dry cleaners.5 Unbeknownst to most denizens of Silicon Valley, many of the area’s tech titans can be found sandwiched between the refineries, utilities, and dry cleaners on this list.

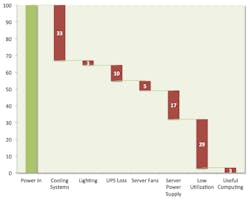

Published research estimates that active servers use less than 20% of datacenter energy.6,7 A significant amount of energy is spent on cooling needs as well as power consumption in idle states (Fig. 2).

The waterfall chart is instructive in providing insight into areas where energy savings might be found.8 Power consumption can be reduced in low utilization states, either through higher utilization (more computing for the same power consumption) or through better design. These savings can have a ripple effect in system cooling costs.

Server energy consumption varies widely. If the average server usage is 850W/h, then average daily consumption is approximately 20 kW/h, or nearly 7.5 MWh/year.9 At average energy costs of 9.83 c/kW/h, this average server costs $731 per year in energy costs. The cooling and infrastructure energy costs for this server make this number significantly higher.

Server Utilization And Energy Consumption

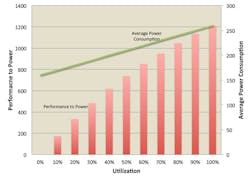

A key study on the topic at Google from Barroso & Hölzle used SPECpower_ssj2008 as a benchmark and found that average power decreases linearly as a function of utilization, but the performance/power ratio (an indicator of efficiency) declines much faster because system power consumption decreases at a slower rate under lower utilization compared to how performance decreases with lower utilization.10

Barroso and Hölzle found that average power declines nearly linearly with utilization, but performance to power (efficiency) degrades much faster (Fig. 3). At utilizations of less than 50%, a significant gap exists due to idle power consumption in servers. For optimal use, you should operate in the region on the right side of the chart.

Barroso and Hölzle further measured server utilization patterns over a period of time and found an interesting distribution. Their measurements showed that servers were never really idle, and most of the time was spent in the low utilization regions in the 10% to 50% range. These studies aim to find ways to achieve “energy proportionality,” i.e., energy consumption that is a linear function of utilization.

The study states: “This activity profile turns out to be a perfect mismatch with the energy-efficiency profile of modern servers in that they spend most of their time in the load region where they are most inefficient.”

The results of the studies cited so far provide some options for designers to consider to reduce energy wastage in servers. Software and operating-system scheduling can be used to improve utilization through virtualization and batching of compute loads. Improving system energy consumption under low utilization is a task for systems designers. This improvement can be aided significantly through improved ASIC designs that enter much lower levels of energy consumption under low utilization conditions.

Raising Utilization

Batch processing is one of the old workhorses of computer technology. For applications that do not need to run interactively or in real time, improved scheduling can create better utilization. However, many applications in today’s connected world require instant gratification. These applications do not lend themselves well to batch processing.

Virtualization is one of the key drivers of cloud computing services. Amazon Web Services, Rackspace, and Force.com are major names in this arena. Their services have achieved widespread adoption. For example, the online movie service Netflix runs on Amazon’s Web servers.

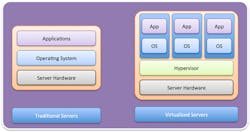

Virtualization targets underutilized servers to reduce energy wastage by moving the operating point to the right along the x-axis in Figure 3. Virtualization allows a single computer to run multiple “guest” operating systems (OSs) by abstracting the hardware resources (CPU, memory, I/O) through a hypervisor.

In a virtualized system, a guest virtual machine (VM) can be migrated from one hardware system to another (Fig. 4). A VM that runs out of resources such as memory during peak usage can be migrated to another server that is currently underutilized. This relocation requires the ability to manage hardware and other resources so the migration is transparent to the VM. Network addresses are a good example of a resource that needs special handling to work correctly when a VM is migrated to a server on a different subnet in a datacenter.

In practice, virtualization increases utilization, but still runs the risk of underutilization at non-peak loads. Further, strict service-level agreements (SLAs) will require providers to guarantee performance levels. SLA requirements may manifest in terms of unused capacity in the average case (and low utilization) as cloud providers must maintain spare capacity for peak usage, although at a lower level than if systems were completely dedicated to single OSs/applications.

Further, datacenter operators must maintain redundant equipment on tap to allow for failures. This set of idle machines adds to the inefficient energy consumption. However, we are still not power efficient unless we can maintain utilization at 70% level or higher (Fig. 3). So, are there solutions that we can apply for low utilization states?

System Solutions

Performance and bandwidth needs drive up the complexity of hardware used in datacenters. Multicore machines increase efficiency of threads/watt and reduce the cost per unit of performance. These designs demand very-high-bandwidth coherency links between sockets. Coherency is required to maintain cache values and consistent data between processors. These designs also require suitable bandwidth in the I/O subsystem to feed the inputs and outputs of the system (Ethernet, storage, etc.).

The main sources of power consumption within a server are the processor cores, memories, disk drives, and I/O/networking. Various techniques have been developed over the years, of which some have more promise than others.11

Lowering power while the CPU is active through techniques like dynamic voltage frequency scaling (DVFS) improves power consumption at some performance cost. As process technology evolves and the gap between circuit nominal voltages and threshold voltages shrinks, DVFS advantages will shrink simultaneously. Deep scaling impacts performance significantly.

CPU clock gating works well within the core, but shared caches and on-chip memory controllers typically remain active as long as any core in the system is active for coherency reasons. Given the nature of workloads in the datacenter, full system idle rarely occurs, so optimizing for complete system idle state offers little practical benefit from a power savings perspective (Fig. 4).

Barroso and Hölzle document power efficiency over the activity range for various subsystems in a server. CPUs have a dynamic range greater than three times. In other words, the power varies three times over the activity range, making it fairly proportional to the usage. By comparison, the dynamic range for memory is of the order of two times and is even lower for storage and networking (1.2 to 1.3 times).

Opportunities exist in the memory and I/O subsystem to leverage active low-power techniques to reduce power consumption. Memory techniques like self-refresh assist in improving memory energy efficiency as well. These techniques allow DRAM refreshes to happen while the memory bus clock, phase-locked loop (PLL), and DRAM interface circuitry are disabled, reducing power consumption by an order of magnitude.

Power consumption from interface links as described above is becoming a major contributor to idle and peak usage mode. Interface IP consists of complex analog circuits and has very restrictive protocol requirements. Interface protocols are evolving to improve power efficiency and take advantage of low-usage or no-usage cycles.

Among the interface protocols, PCIe is a ubiquitous technology used for storage, graphics, networking, and other connectivity applications. The PCI-SIG has actively focused on platform power-reduction enhancements to the specification. These techniques line up with the energy-proportionality concept: to reduce power consumption in response to lower utilization levels.

PCIe ECNs Save Power

The PCI-SIG has updated the PCIe spec with engineering change notices (ECNs) that help improve the system power consumption. These advances help increase the dynamic range for power consumption on PCIe devices based on activity and utilization, improving system energy proportionality.

Latency tolerance reporting (LTR) allows a host to manage interrupt service (by scheduling tasks intelligently) to optimize the time it stays in a low-power mode and yet service the device within the device’s window for tolerating service latency.

Platform power management (PM) policies guesstimate when devices are idle, with approximations from inactivity timers. Incorrect estimation of idle windows can cause performance issues or, in extreme cases, hardware failures. As a result, PM settings often result in suboptimal power savings. In the worst case, PM is sometimes completely disabled to guarantee correct functionality.

With LTR, the endpoint sends a message to the root complex indicating its required service latency. The message encompasses values for both snooped and non-snooped transactions. Multi-function devices and switches coalesce LTR messages and send them on to the root port.

The ECN also allows endpoints to change their latency tolerance when service requirements change— for example, when sustained burst transfers need to be maintained. In an LTR-enabled system, the endpoints provide actual service intervals to the root complex. The platform PM software can use the reported activity level to gate entry into low-power modes. LTR enables dynamic power versus performance tradeoffs with the low overhead cost of an LTR message.

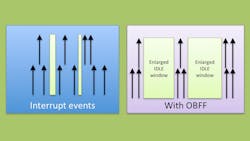

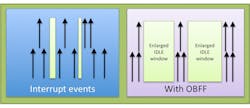

Optimized buffer flush/fill (OBFF) allows the host to share system-state information with devices so devices can schedule their activity and manage to optimize the time spent in low-power states. In a typical platform, PCIe devices in the system do not know the power state of central resources. CPU, root complex, and memory components cannot be optimally managed because device interrupts are asynchronous, fragmenting the idle window.

The premise for OBFF is that devices could optimize request patterns if they knew when they were allowed to interrupt the central system (Fig. 5). This would enable the system to stay in a lower-power state longer by expanding the idle window. The OBFF mechanism broadcasts power management hints to devices. OBFF can be implemented with expanded meanings of the WAKE# signal or with a message.

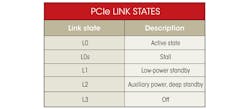

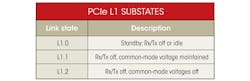

The PCIe protocol defines a number of link power states (Table 1). Driven by market and regulatory needs, the PCI SIG proposed an ECN to lower power consumption in the L1 state. The current low-power state (L1) that is used most often has power consumption in milliwatts. The new ECN, “L1 Power Mode Substates with CLKREQ” (PCI-SIG, 2012), redefines the L1 state as L1.0 and includes two substates defined as L1.1 and L1.2 (Table 2).

The addition of these states allows the standby state to reduce power consumption. IP providers have announced implementation of these states in PCIe controllers and physical layers (PHYs), allowing the standby power consumption to drop by two orders of magnitude to low microwatts while providing compelling transition times between active and idle states.

The two new substates define lower-power states that realize power savings through disabling circuitry not required by the protocol in these substates. L1.1 and L1.2 do not require detection of electrical idle, and CLKREQ# controls them. L1.2 reduces power further by turning off link common-mode voltages.

Exit latencies from these new low-power modes are critical because system performance can suffer from long latencies. Further, there may be functionality issues if LTSSM timers are not honored correctly. PHY designs are being pushed to the limit to reduce exit latency while providing low current consumption simultaneously.

Designing IP For Low Power

Applying techniques that provide coverage for process, voltage, and temperature variation can reduce active-mode PCIe PHY power. Without these techniques, PHYs must be designed with greater overhead that increases power consumption significantly. Through the use of clock gating and power islands, leakage current also is significantly reduced, optimizing static power consumption.

Entering deep low-power states has a deleterious impact on exit times from these states. Using superior PLL design techniques for fast lock times can reduce exit latency significantly, improving the resumption time and user experience.

Cadence has optimized its PCIe controller and PHY to support these new L1 power-saving states. The Cadence implementation of these low-power states is available in x1 to x16 configurations, allowing for all applications that use these devices to benefit from power savings. The 28HPM implementation of the Cadence controller is first to market with a wire-bond PHY option. It also is available in flip-chip designs.

Conclusion

Datacenter growth will continue in the foreseeable future with negative impacts for energy consumption unless we find solutions that allow us to operate systems more efficiently. In absolute terms, engineering design continues to find low-power methods across the spectrum for semiconductor and system implementations. In relative terms, energy proportionality design goals require designs to optimize their usage so idle and low-utilization states are more efficient.

Virtualization enables systems to increase utilization and operate at more efficient operating points. However, virtualization is not a panacea as most systems continue to operate in the sub-optimal low-utilization region of the power-performance spectrum. Protocol enhancements such as the PCI-SIG’s L1 Power Mode Substates with CLKREQ ECN are an example of making low-utilization and idle states more energy efficient.

Finally, such protocol advances can be effective only when chip designs apply innovative techniques to optimize area and power while improving performance. Commercial manifestations of the PCIe PHY, such as Cadence’s low-power IP, make this vision a reality.

References

1. Cisco Systems, “The Zettabyte Era—Trends and Analysis,” May 29, 2013

2. James, J., “How much data is created every minute?” June 22, 2012

3. Cisco Systems, “The Zettabyte Era—Trends and Analysis,” May 29, 2013

4. Cisco Systems, “Cisco Global Cloud Index: Forecast and Methodology, 2011–2016,” October 2012

5. Bay Area Air Quality Management District, “Toxic Air Contaminant Control Program Annual Report,” July 31, 2012.

6. Glanz, J., “The Cloud Factories: Power, Pollution, and the Internet,” The New York Times, September 22, 2012

7. Kay, R., “Taming Datacenter Power Usage,” Endpoint Technologies Associates Inc., Forbes, October 18, 2012.

8. Rocky Mountain Institute of Technology, “Designing Radically Efficient and Profitable Datacenters,” August 7, 2008

9. Hammond, T., Toolkit: Calculate datacenter server power usage, ZDNet, April 8, 2013

10. Barroso, L.A., & Hölzle, U., The Datacenter as a Computer: An Introduction to the Design of Warehouse-Scale Machines, M.D. Hill, Ed., Madison, Wis., Morgan & Claypool, 2009

11. Meisner, D., Sadler, C.M., Barroso, L.A., Weber, W.D., & Wenisch, T.F., PowerNap: Eliminating Server Idle Power, ISCA 2011, San Jose, ACM

Arif Khan is the product marketing director at Cadence Design Systems. He has more than 15 years of design, management, and marketing experience in the semiconductor industry. He has a bachelor’s degree in computer engineering from the University of Bombay, a master’s degree in computer science from the State University of New York, and an MBA from the Wharton School of the University of Pennsylvania.

Osman Javed is a senior manager for product marketing SoC realization at Cadence Design Systems. He received his master’s degree in electrical engineering from the University of Southern California.

About the Author

Arif Khan

Product Marketing Director

Arif Khan is the product marketing director at Cadence Design Systems. He has more than 15 years of design, management, and marketing experience in the semiconductor industry. He has a bachelor’s degree in computer engineering from the University of Bombay, a master’s degree in computer science from the State University of New York, and an MBA from the Wharton School of the University of Pennsylvania.

Osman Javed

Senior Manager for Product Marketing

Osman Javed is a senior manager for product marketing SoC realization at Cadence Design Systems. He received his master’s degree in electrical engineering from the University of Southern California.