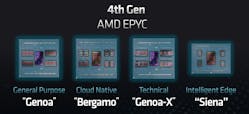

AMD has been busy expanding its fourth generation of Zen 4 server processors to cover virtually every workload in data centers, ranging from general-purpose and high-performance computing to the cloud.

The Santa Clara, Calif.-based company is breaking new ground with a series of power-efficient server CPUs specifically designed for telco, networking, and other systems that operate at the intelligent edge—on factory floors or under cellular base stations, where they’re exposed to the elements and other harsh conditions. These environments are a world apart from cloud data centers and other vast warehouses of servers, where power is widely available and thermal management is less challenging.

The new series of server processors, previously code-named Siena, trades off the faster performance of its general-purpose Genoa CPU in favor of maximizing power efficiency, die area, and overall cost for use outside the data center. AMD said the EPYC 8004 series—the fourth and final member of its Zen 4 family of server processors—comes with eight to 64 CPU cores based on a physically more compact and energy-efficient variant of its Zen 4 core called Zen 4c, which is also at the heart of its Bergamo processor.

The tradeoffs pay dividends in power efficiency and heat dissipation. According to the company, Siena features a fraction of the power consumption of its other server chips, providing a power envelope that tops out at 225 W.

As a result, the U.S. semiconductor giant said Siena meets the needs for telco and other industries that are buying into the concept of edge computing. Here, the preference is to process data in servers and IoT gateways closer to where it’s created instead of sending it all directly to the cloud to be processed by traditional server processors, explained Lynn Comp, VP of server product and technology marketing at AMD.

Engineered for the “Intelligent Edge”

Edge computing is the concept of processing data as close as possible to the device that recorded it. Processors or other hardware are placed physically close to the spot where the data is produced.

Since it prevents the need for moving massive amounts of data throughout a network, it’s critical in any situation that requires real-time processing—whether running the control system on a factory floor or processing vast amounts of video used to manage inventory in a warehouse or store. In these cases, relaying the data to distant cloud data centers can be impractical due to the latency and cost penalties.

Unlike traditional embedded systems, the intelligent edge is equipped with smaller groups of servers, typically ranging from one to 10 boxes, rather than the full racks in cloud data centers. In general, they also require more computing power than even high-end embedded processors from the likes of Infineon Technologies, NVIDIA, NXP Semiconductors, Qualcomm—and even AMD itself—bring to the table.

However, much like embedded systems, these servers are placed in thermally challenging locations where the availability of power and space is limited or where they have to handle harsh environmental conditions.

For its part, telecom equipment leader Ericsson plans to use Siena to support its cloud RAN deployments, in which the software that runs the radio access network (RAN) is split out from its macro base stations.

Dan McNamara, senior vice president of AMD’s server business, insists that the EPYC 8004 is set to “extend AMD leadership in single-socket platforms by offering excellent CPU energy efficiency in a package tuned to meet the needs of space- and power-constrained infrastructure.”

Inside Siena: AMD’s EPYC for the Edge

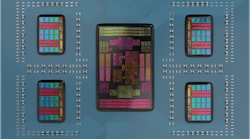

Today, many of the most advanced processors on the market have morphed from being single slabs of silicon to a collection of smaller chiplets that are packaged together to mimic a single monolithic CPU.

One of the advantages of adopting chiplets is that it enables the building blocks of the CPU itself to be manufactured using the process technology that suits each piece best. For AMD, which uses chiplets throughout its Zen 4 family of server chips, the approach allows the mixing and matching of the number of chiplets per CPU to address multiple facets of the data center and different workloads within them.

Siena uses the same chiplet-based architecture as the other server processors in the Zen 4 generation, with the compute chiplets surrounding a central IO die that takes care of the connectivity in the CPU.

The 5-nm server processor leverages AMD's Zen 4c core, which also acts as the backbone of the recently released Bergamo CPU custom-designed for cloud data centers and other huge installations of servers. The Zen 4c cores are based on the same microarchitecture as the base Zen 4 cores crammed in Genoa, or more formally, the EPYC 9004 series. As a result, they integrate all of the same features, including the latest AVX-512 extensions.

But they’re designed to be more physically compact—occupying a 35% smaller area—and more power-efficient than the base Zen 4, where the company was focused on increasing the performance per core. The improvements to physical implementation of the core and cache made it possible to integrate twice as many CPU cores per compute tile—also called compute complex die (CCD)—up to 16. Bergamo uses up to eight of these more tightly bundled compute die for a total of 128 Zen 4c cores.

Siena is more or less a miniature Bergamo. The new server processor can accommodate half as many of these compute tiles in the smaller form factor of AMD’s new SP6 socket that measures 58.5 × 75.4 mm.

When all of the chiplets in the package are fully stocked, the Siena server processor can be configured with up to 64 cores and 128 threads compared with the 96 Zen cores in its top-of-the-line Genoa CPU.

Featuring 1 MB of L2 cache per core, the 16 cores in each compute chiplet are arranged into a pair of eight-core clusters that share 16 MB of L3 memory cache, for a total of 32 MB of L3 per chiplet.

Elevating the Efficiency

Power efficiency is where Siena excels, said Mark Bode, head of server product management at AMD.

Ultimately, the power requirements of the CPUs, which are rated to operate over a wide temperature range from −5 to 85°C, are more akin to its EPYC-class embedded processors than its other server silicon.

The power envelope of the Siena CPU family scales depending on the core count of the server processor, with the thermal design power (TDP) ranging from a minimum of 75 W up to a maximum of 225 W under full load for the 64-core CPU in the family. That delivers significant power savings when compared to the company’s Genoa and Bergamo server chips, which can consume up to 400 W at full power. The smaller power envelope is why Siena works well in situations when power and space are at a premium.

Power efficiency directly influences the total operating cost (TCO) of a system. AMD insists that the higher power efficiency of the EPYC 8004 series can save customers "thousands of dollars in energy costs in a five-year period,” while offering better core density and more throughput than Intel’s Xeon. Depending on the part, pricing for the new Siena series ranges from $490 to $5,450 per chip.

The improved power efficiency, heat dissipation, and die area of the Siena CPU come at the expense of clock speeds. The base frequency of the intelligent edge EPYC ranges from 2 to 2.65 GHz, while the boost frequency of the processor spans from 3 to 3.1 GHz. Even though the emphasis is on power efficiency, AMD claims Siena gets the best of Intel's latest Xeon processors in terms of performance.

Based on the SPECpower benchmark, the company said the 64-core Siena server CPU can deliver up to double the performance per watt at the system level compared to Intel’s leading 52-core Xeon CPU for networking workloads.

DDR5 and PCIe Gen 5 Connectivity Details

Despite the differences with the other chips in the Zen 4 family, Siena features the same 6-nm IO tile that powers the connectivity features, including AMD’s Infinity Fabric, of all its server processors.

The CPU incorporates up to six channels of DDR5 memory with a maximum frequency of 4800 MHz, along with a memory capacity of up to 1.15 TB, compared to the 12 memory channels in its Genoa CPU. While limited to single-socket configurations, Siena also adds 96 lanes of PCIe Gen 5 connectivity with speeds of up to 32 GB/s, compared to 128 lanes of the connectivity in the Genoa and Bergamo chips.

For more flexibility, up to 48 lanes can be configured for Compute Express Link (CXL) 1.1, an open cache-coherent interconnect for accelerators, processors, and memory that runs on top of the PCIe interface.

While it’s set to become the dominant CPU interconnect for the data center, CXL is widely used for memory expansion via PCIe. That enables you to expand the memory footprint of a system as needed.

A dedicated security subsystem with a hardware root-of-trust is also housed on the central IO die.