Open Robotics and AI Software Deliver Novel Apps Faster than Ever

What you’ll learn:

- What models are available to engineers for prototype development using AI? (There are more than you think!)

- In situations where voice control or keyboard or touchscreen commands aren’t practical, are hand signal commands viable if AI can interpret and deliver them to a robotic motion system?

- Can the modules needed to assemble a working robotic system be easily integrated, or is it a time-consuming, laborious job?

Artificial intelligence (AI) is moving rapidly into many parts of life, from home to industry, thanks to the huge amount of research and development into deep learning that’s been conducted around the world over the past decade.

A less obvious, but equally tangible benefit, of this intense research into AI is the ready availability of models that engineers can use to build and deploy prototypes and early production systems quickly and easily. Coupled with high-performance hardware optimized for rapid prototyping and early production, engineers now have a way to conceptualize, implement, and test AI and robotics systems faster than ever.

Some of the AI technology comes from areas one might not expect. Diffusion models, for example, were driven by applications in photorealistic rendering. But thanks to the flexibility of many of these models, it’s easy to find novel uses for them, such as providing more computationally efficient but accurate approximations of complex fluid flows. This flexibility makes systems accessible to a much wider range of users and potentially drives systems to be more responsive in certain environments.

Robotic Vision Tackles American Sign Language

An example with direct relevance to embedded control and robotics comes from a competition launched by Google on Kaggle in 2023. The company wanted to find AI models that could reliably translate hand signals captured by cameras into text. To help contestants build their solutions, Google uploaded a dataset of three million characters in the fingerspelling dictionary of American Sign Language (ASL) (see figure).

One of the intentions behind the Kaggle ASL project was to ease interaction with devices such as smartphones, many of which have built-in accelerators that support widely used AI languages like TensorFlow Lite and PyTorch. Fingerspelling is just one part of ASL. It uses hand shapes that represent individual letters, but is often utilized to communicate names, addresses, phone numbers, and similar information. Experiments with fingerspelling in ASL have found that it’s often faster than typing keystrokes on a phone or tablet interface.

The same techniques can be employed in signals to robotic vehicles in a noisy shopfloor environment. This can be a situation where voice control isn’t practical and where operators aren’t able to interact with a keyboard or touchscreen interface because of dirt, dust, and grease contamination.

>>Check out this TechXchange for similar articles and videos

Hand signals provide a mechanism for controlling these vehicles in a simple and efficient way. A combination of open software and hardware can bring the AI support needed to interpret hand signals made in front of a camera and deliver commands to a robot’s motion systems.

Thanks to projects that include Google’s dataset for ASL, the source material is often readily available. Users have the option to take models derived from these challenges or use the datasets to train models with architectures appropriate to their use cases as long as the materials are provided in the form of free or open-source software.

Tools such as the AMD Vitis Unified Software Platform make it easy to access both the processing performance and software environments that can host this open-source code, which is often written in TensorFlow Lite, PyTorch, or other open AI development environments.

The Vitis platform lets engineers develop C/C++ application code, as well as IP blocks that target AMD’s multiprocessor system-on-chip (MPSoC). The solution can then be deployed using off-the-shelf or custom single-board computers (SBCs), such as the Tria ZUBoard 1CG, which is based on the Zynq UltraScale+ MPSoC.

These high-performance devices combine multicore processors based on the Arm Cortex-A architecture with an array of programmable-logic cells. The resulting hardware not only delivers the performance needed to run robotics applications that need AI support, it also incorporates programmable logic that’s highly suited to implementing sophisticated motor-control algorithms.

Open-Source Robots via the Robot Operating System (ROS)

Support for robot control is just as accessible now thanks to the creation of the Robot Operating System (ROS). Originally pioneered by a group at Stanford University, it’s now managed by the Open Source Robotics Foundation (OSRF).

The release of ROS2 saw a change in capabilities that makes the software a viable choice for industrial control and commercial drone operation due to its inclusion of features for real-time motion processing and security. AMD ported the ROS2 code to the PetaLinux operating system that runs on the MPSoC hardware to ease integration for customers.

With ROS2, developers build robotics applications using easy-to-understand graphs arranged in a publisher-subscriber flow, a design pattern that’s commonly applied to industrial and automotive control systems. In these graphs, providers of data and inputs are treated as publisher nodes that pass information on specific topics to subscriber nodes acting on them.

The structure allows for the easy integration of the modules needed to assemble a working robotic system. Cameras attached to standard MIPI interfaces can deliver to nodes running image-processing software, such as OpenCV that’s able to adjust brightness and contrast and perform other enhancement functions, a succession of high-quality frames to an image-classification model. The model then publishes textual outputs that are consumed by the motion-control software employed by the robot.

In the case of this ASL-based application, fingerspelled letters tell the robot to turn, advance, and stop. Once assembled, compiled, and downloaded to the robot, the application need not be regarded as static.

The rapid pace of development in open-source AI and robot-control software provides opportunities for developers to optimize their systems. Tria’s original demonstration of an ASL-controlled robot used a hand-signal classification model based on the VGG-16 architecture and trained on Google’s dataset.

A decade ago, researchers in the Visual Geometry Group at the University of Oxford developed this popular deep convolutional neural network (CNN) to provide good accuracy on a wide range of image-recognition tasks. A key advantage of VGG-16 lies in its stack of convolutional layers followed by a series of max-pooling layers of reducing dimensions but higher depth. This helps the model learn hierarchical representations of visual features. Such an attribute is suitable for hand-sign recognition, where the model needs to first detect a valid hand shape in the camera frame and from that determine the arrangement of the fingers representing an ASL character.

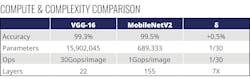

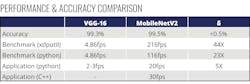

Though VGG-16 offers good performance and demonstrates the viability of the prototype, any embedded platform benefits from higher efficiency. Tria’s developers obtained this by swapping VGG-16 for the more recent MobileNet V2 classifier. It’s an architecturally more complex stack of over 100 convolutional, pooling, and so-called bottleneck layers (Table 1).

Yet, MobileNet V2 achieves higher accuracy on the ASL classification task than VGG-16 with far fewer computational operations per image (Table 2). This allows the model to run on the Tria ZUBoard 1CG at more than 20 frames per second compared to just two or three with VGG-16.

The example shows how powerful the combination of open hardware, software, and AI can be when implemented in novel systems concepts. By choosing hardware that has a high degree of compatibility with the development environments now available for robotic and AI applications, developers can gain a significant advantage in time-to-market for groundbreaking ideas.

>>Check out this TechXchange for similar articles and videos

About the Author

Jim Beneke

Vice President, Tria Technologies

Jim Beneke is a vice president for Tria Technologies, the new name for Avnet’s business dealing in embedded compute boards, systems, and associated design and manufacturing services. He has close to 40 years of experience in technology management, business and strategy development, technical marketing, research and development, and design engineering.

During his 30 years at Avnet, he has held executive positions as vice president of products and emerging technology and vice president of global technical marketing, where he successfully led efforts to increase demand for Avnet’s offerings. Beneke earned a BSEE from Bucknell University and an MSEE from Villanova University. He also serves on the board of directors and as a technical advisor for Otava.