What you’ll learn:

- What is Regenerative AI?

- Introducing Super-Regenerative AI.

- Thyme: A New AI Company.

- Why we have to put an explicit explanation about an April 1st article so that the search engines don’t ding us for something humorous.

The world of AI has seen phenomenal progress over a few short years, with generative AI yielding to Regenerative AI, which then paved the path toward the Recursive Regenerative AI disclosed in Electronic Design just one year ago. Today researchers have revealed the next step on this path. They call it “Super-Regenerative AI.”

Like its namesake—the super-regenerative radio receiver—super-regenerative AI harnesses an abundance of internal feedback to provide highly refined results to simple queries. But we’re getting ahead of ourselves. Let’s first review how computing reached this point.

For this, we’ll consult noted Professor Justin Thyme of the Roswell Science Institute of New Mexico. Thyme will tell us the events that have led to Super-Regenerative AI.

Generative AI

Gartner analyst Bern Elliot was recently quoted1 saying that generative AI is: “like Swiss cheese: you know it has holes, you just don't know where they are until you cut it.” He was referring to generative AI’s penchant to “hallucinate,” in his words. Thyme uses the word “create” as an alternative to Elliot’s “hallucinate,” indicating a more positive view of what AI has to offer.

There are indeed problems when a large language model (LLM) cannot determine the relative validity of conflicting inputs. It’s something like individuals who “did their own research” by surfing the web and piecing together information from various sites, some legitimate and others less so.

Prof. Thyme says that he prefers to view generative AI as something like a party game often called “Telephone.”2 In this game, sketched in Figure 1, a number of guests are lined up. The first member of the line is told a single sentence, perhaps a quote or a little-known truth, by a whisper in the ear. That first member whispers it to their best ability to the second, who whispers it to the third, and so on. When the guest at the end of the line has heard the sentence, that person is asked to share it out loud with all of the guests. The result is often confused to the point of hilarity.

Prof. Thyme explains that this phenomenon results from channel distortion introduced when the listener didn’t fully hear the speaker (represented by the ragged lines in the channel), and perhaps through misinterpretations caused by the listener’s bias. This occurs with each member of the line, increasing the distortion along the way.

LLMs can be viewed in the same way: Computers gather information from sources of various credibility whose data they may misconstrue and then feed that data to downstream models, which may also misconstrue the upstream model’s output. This could explain why LLMs generate output that needs to be chaperoned by a knowledgeable human because, while its output may look good, it might be very wrong.

More April 1st Mayhem

It's this phenomenon that Prof. Thyme wants to take advantage of.

What is Regenerative AI?

Regenerative and recursive-regenerative AI are natural advances over this basic concept. Consider regenerative AI to resemble folding the telegraph line back into itself to help produce outputs in an absence of inputs, creating a more tightly focused environment. It’s like a rumor that feeds upon itself.

Recursive-regenerative AI takes this process one step further by allowing information (or misinformation) to travel in both directions through the loop, as represented by the double-ended arrows in Figure 2. This doubles the opportunities for distortion and bias to contribute to the information-creating process.

Here the noise and distortion increase with every pass through the loop. The bias pulls new ideas out of the noise and distorted signals to create altogether new “information” with which to feed the next iteration.

This information has the opportunity of rising in importance to spawn new data, while suppressing the importance of any real inputs. Each pass through the loop renders the new information more important than the prior loop, while adding noise and distortion along the way. Chaperoning becomes irrelevant.

This can be viewed as a kind of damped feedback, causing what some refer to as an “Echo Chamber.” Here, sometimes-erroneous ideas become repeated to such an extent that they’re reinforced by many elements of the network, just as they would be in a social setting where the members kept repeating the same rumors until most members mistake them for the truth.

Prof. Thyme reasoned that the damped feedback causes certain findings to resonate more strongly than others, just as a tuned linear circuit with feedback can ring at a particular frequency. For the past two years, he has devoted a phenomenal effort, including an army of graduate assistants, to fitting this phenomenon to the well-established math of tuned circuit design. He now has found a way to predict the “Q Factor” of the system, the same as would be done in a linear circuit.

A system’s Q factor expresses how long it takes for ringing to die down—the higher the Q, the longer the circuit rings.3

It was the good professor’s intent to increase the system’s Q to its highest-possible level. So, he carefully considered ways to further increase the feedback for this kind of network.

Introducing Super-Regenerative AI

Prof. Thyme’s solution was to replicate the very high-Q radio receivers that rose to popularity roughly 100 years ago.4 These super-regenerative receivers increased the feedback to a level that makes the tuned circuit resonate very strongly, and risk becoming unstable, until the ringing is squelched and forced to begin all over again.

Doing this in an AI network requires the addition of multiple additional communication paths, as is represented in Figure 3. (The Distortion element has been omitted from the arrows in the center for clarity’s sake, but the distortion remains a key factor, nonetheless.) This adds to the number of inputs any node will consider because, in human terms, it means that certain pieces of information will have more credibility because: “I heard it from more than one source.”

The noise that distorts the signals increases because all of the activity, much like a conversation in a crowded room, is more difficult because people have to talk over the crowd noise.

With super-regenerative AI, all network elements are tied to each other one-on-one, allowing for a faster and freer flow of information, while still supporting the reverse data flow of recursive-regenerative AI.

As a result, two elements can be communicating about a phenomenon, creating a new way of interpreting the data, while at the same time, another two elements can be generating a different interpretation. When these two different interpretations meet elsewhere, their differing aspects are added to the database and then further refined by the receiving element.

This is the most advanced AI algorithm to date. Super-regenerative AI is a much more rapid means of creating new information than was available through recursive-regenerative AI. Similarly to super-regenerative radio receivers, super-regenerative AI consumes lower power and fewer resources than an old-school generative AI system with an equally tight focus.

Super-regenerative AI should be a boon for the creative arts, electronic and other designs, code generation, architecture, shipbuilding, and many other fields. On the darker side, it’s also capable of creating numerous new conspiracy theories because of the large potential for generating new data with minimal external data. This lack of reliance on the outside world causes the training system to distrust external sources, with the highest trust being given to the internally generated information.

Curation is no longer required, as with generative AI, because the system distrusts the human chaperones who would normally vet the output data, and will, at times, create distractions to send these chaperones on a wild goose chase. Thyme says that he can’t talk about this phenomenon, since the issue will be explained at a later date for maximum impact. When that later date comes, Thyme will tell.

Thyme: A New AI Company

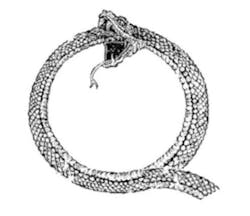

Thyme plans to spin this effort out of the Institute to create a new company that will develop this approach into a very efficient new type of LLM. Since it feeds upon itself, Thyme has selected the Ouroboros, the image of a snake eating its own tail, as the basis for a logo for the firm. Its name will be the combination of “Q Factor” and Ouroboros: Quroboros, which simply involves adding a tail to the first letter of the term Ouroboros. To this end, he’s also added a tail to the Ouroboros to finish out the logo (Fig. 4).

There will be investment opportunities for the new firm once it has introduced its first prototype, which is scheduled to occur exactly one year from today on April 1, 2026. The professor plans to move ahead as quickly as possible, as is his character trait, because Thyme waits for no man.

References

1. The Register: We're in the brute force phase of AI – once it ends, demand for GPUs will too.

2. AdvantEdge Training & Consulting, The Telephone Game – Emotional Intelligence.

3. ROHM, Resonant Circuits: Resonant Frequency and Q Factor.

4. S. Sanchez, Texas A&M University, Super-Regenerative Receiver.