Could Sagence AI’s Analog Inferencing for GenAI be NVIDIA’s Downfall?

What you’ll learn:

- NVIDIA’s (NASDAQ:NVDA) days may be numbered due to analog computer-based GenAI inferencing from Sagence AI being superior in cost and power consumption.

- Nuclear power may see a resurgence as utilities refuse to provide grid power to data centers.

- A small 5-MW nuclear power station in a shipping container will be available in the next few years from NANO Nuclear Energy (NASDAQ:NNE).

Poor NVIDIA...

Analog Computing rules—

Less power, size, cost

About a month ago, I wrote about how Silicon Valley Venture capital thrives on the hype cycle. How getting in early on technologies was readily fueled by hubris and the irrational exuberance of investors, and how Generative AI was one of those technologies that seems to promise significantly more than it can deliver.

AI data centers are requiring hundreds of megawatts of power apiece, at such growth rates that most power companies are refusing to provide such capacity from the public grid. This means the likes of Amazon, Google, Microsoft, and others have to scramble to secure unconventional “green” power sources privately.

Tech Giants Go Nuclear

Microsoft, for example, has announced siting a data center in Indiana, welcomed by a utility with 59% of its generating capacity derived from coal, despite the PR of having clean energy goals. They have also secured 750 MW of nuclear generating capacity from one of the mothballed reactors at the infamous Three Mile Island location, shown when it was active in Figure 1. Utility-scale reactors using highly radioactive fuel, while not producing CO2, are still someone else’s problem when it comes to corporations treating the environment like an open and free sewer.

Google has agreed to purchase energy produced by small modular reactors (“SMRs”) under a deal that will support the first commercial deployment of Kairos Power's reactor by 2030 and a fleet totaling 500 MW of capacity by 2035.

Amazon, enlisting a consortium of state public utilities, will enable the development of four advanced SMRs. The reactors will be constructed, owned, and operated by Energy Northwest. They’re expected to generate roughly 320 MW of capacity for the first phase of the project, with the option to increase it to 960 MW total.

Amazon is also investing in X-energy, a leading developer of next-generation SMR and fuel; X-energy’s advanced nuclear reactor design will be used in the Energy Northwest project, too. The investment includes manufacturing capacity to develop the SMR equipment to support more than 5 GW (!!!) of new nuclear energy projects utilizing X-energy’s technology. There’s always the promise that these crazy power numbers will somehow benefit the public (when, in reality, the tech promises the exec fantasy of taking your job from you).

At the heart of these data centers are thousands of processors that are sourced from NVIDIA, or, in some cases, operators like Google create their own silicon. NVIDIA’s next-generation GPU, the B200, recently posted a doubling of performance on some tests versus today’s workhorse NVIDIA chip, the H100. NVIDIA's next-generation Blackwell B200 AI GPU uses up to 1,200 W of power, 500 W more power than Hopper H100 AI GPU. Training GenAI on datasets is where the atrocious amounts of power are consumed/dissipated.

There are tricks, however, when inferencing after the training has completed, where floating-point precision can be traded for integer math without severely degrading the result. This creates higher memory density and reduces power consumption. Despite these desperate measures by the 1s and 0s “bitbangers,” some new kids in town seem likely to topple the AI darling of Wall Street from its “to infinity and beyond” stock valuations—they’re resorting to using the lost art of the ancients.

Analog Computing Reawakens

There was a method to my madness, a grand scheme that I had in mind, in (re)introducing everyone to analog computing here on Electronic Design a few months ago. The full series ran the gamut from the K2-W vacuum tube op amp that formed the basic building block of early analog computers, through to a modern day “laptop” analog computer that was child’s play to program.

We saw that we could use variable resistors (potentiometers) to “multiply” the static coefficient, entered as a wiper position on the pot, by the applied voltage, to yield a multiplication product in zero time. We also had adders (“summers”), comprised of several resistors converging on a single inverting op-amp input, being summed in zero time (OK, admittedly the op amp does have delay, but if you think about it, the summing node at its input does so in almost zero time).

We could take several coefficients (weights) multiplied by the applied voltage and sum them in very, very, simple, low-power circuits. The only limitation was the linearity and noise floor of the devices in the analog circuit.

Let’s take THAT little laptop analog computer that my 8-year-old minion and I were playing with this past summer, thanks to Anabrid, and apply good old ASIC tricks to its key components. There’s a reliable rumor that Anabrid is actually doing THAT, creating a hybrid (digital controls) analog computer on a chip. What pieces do we have in ASIC design that enables those analog-computing functions?

AI: Analog Inferencing

The way Generative AI works is that a network is “trained” on a massive database, where weighted input information is summed up to arrive at an output. This training to determine those weights is performed infrequently, but it requires that massive amount of compute power described earlier. Once we have those weights, though, then any arbitrary inputs can have those trained weights applied by multiplying them by the inputs and then summing all of the weighed values.

Recall, in the analog computer, the way we input coefficients (“weights”) is with a variable resistor. A resistor is simply a constant ratio of applied voltage to observed current. Changing that ratio (the slope of the V-I curve) changes the resistance. It’s highly desirable to have the ratio stay constant, “linear” over a wide range of applied voltages.

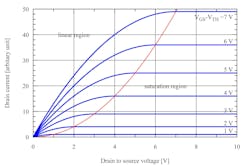

Looking at the characteristic curve of VDS vs. ID of a MOSFET (Fig. 2), we can see a series of slopes that result from varying the gate voltage (VGS) of the MOSFET. For very low VGS, the change in VGS results in a proportional change in the VDS/ID slope...we have a variable resistor.

Just as we have a set and forget in the analog computer for pot positions, we can do the same with a floating-gate MOS device. A VGS is applied and the connection is severed, like a “floating-gate” NVM (non-volatile memory), holding the VGS at a “setpoint” that represents a coefficient or weight as analog, or as a set resistance value. Because the VGS is held in a disconnected gate, no power is dissipated to create the weighted multiplier function that’s identical to the set position of the analog computer’s coefficient potentiometer.

Cool. Now, if we set up an array of these floating-gate “multipliers,” where we can apply “inputs” to the drain of each floating-gate FET, we can sum all of their weighted values at a summing node. It’s a junction of all device sources in a memory row.

This is the “compute in memory” functionality – an input is multiplied by a weighted value, this occurs for multiple inputs and weights, and these are summed at the common node input of an op amp (Fig. 3). The summed value of the op-amp output is then converted by an analog-to-digital converter (ADC) to yield the resulting integer output from all of this concurrent integer math that’s been happening in the memory core.

Realize that with dozens or hundreds of nodes being summed, each of the “multipliers” must be normalized to a very tiny output. Thus, it has to be used in the very deep subthreshold region of operation, just above the noise floor.

We now have implemented the “inferencing” phase of GenAI—using the training-derived weights to compute outputs based on new inputs.

Summing It Up

This “COMPUTE IN MEMORY” architecture is something we’ve already seen from Mystic, though they appear to be primarily focused on enabling GenAI in devices like drones, robots, AR/VR, and surveillance cameras. However, another stealth startup is having its coming-out party today, November 19, 2024—one that was seed funded by the likes of Vinod Khosla, Atiq Raza, and Andy Bechtolsheim: “Sagence AI.”

Though Sagence AI, too, plans to AI-enable devices similar to Mystic’s product strategy, they appear to be developing a complete ecosystem of silicon, packaging, coprocessors, and software to take on the likes of NVIDIA in data-center inferencing applications. Their benchmarking, using Llama2-70B with reference to the NVIDIA H100, is impressive, particularly in price and power (Fig. 4).

I was briefed on their technology a couple of weeks ago, under embargo. But now that they’ve come out of the (server) closet, I’m happy to share that presentation by Sagence AI with our readers:

I also asked candid questions, which they didn’t get to see beforehand, which is captured in this second video:

We had some technical problems at my end of the Zoom call, so I had to re-record my end of the question-asking, which is why I now sound like a robot, reading from the transcript of what I originally asked in the call. Tom Cruise clearly is safe from me jumping into his job as a fine actor.

One Ping Only, Please...

Even though Sagence AI poses a serious threat to NVIDIA’s GenAI dominance, in my personal opinion, the data-center power levels being 10X lower are still awfully high. While we’re no longer talking about needing utility-scale, Three Mile Island levels of electrical power (and cooling for both the reactors and the data centers), we’re still in the dozens of megawatts range of power levels.

I think wind and solar, combined with battery, are serious contenders for a zero-CO2 generating solution for analog-inferencing data centers, but there will be constraints, whatever they might be, regarding their installation and use. This is where the SMRs shine, in my opinion. Modest levels of power can be obtained from a fuel-once reactor architecture, and the power levels would be so modest that the possibility of fueling with spent utility fuel rods may be very real.

I was curious about SMRs, in particular micro-reactors, so I caught up with James Walker, CEO of NANO Nuclear Energy (NASDAQ:NNE) in a recorded Zoom call a few weeks ago. Again, we had a casual conversation, though I did surf the company’s website to get an idea of what they were up to before the call.

I also had technical difficulties in the recording (hey, I’m still learning this stuff). My open mic (how else do you have a natural conversation with someone vs. doing walkie-talkie button pushing with the mute button like a nine-year-old under the bed covers?), so our Media Editor may have had to cut some of the content—I apologize for that beforehand. The echo connection from the computer speakers to the mic was more than the one ping that the Zoom developers seemed to care about canceling out. I’m not going to spoil that ping reference apart from reminiscing about us learning about MHD pumps in our engineering class—oops, a second spoiler.

The recording is a fascinating listen about NANO Nuclear’s plans to produce a fully contained 5 MWt electric power station (1.5 MWe), powered by a factory-fueled nuke that’s good for 25 to 30 years of deployment in the field (Fig. 5). We chat about all kinds of cool stuff, so hopefully the good stuff didn’t wind up on the cutting room floor.

Great for remote mine sites, villages where there’s six months of darkness, and possibly for these energy-efficient analog-computer-based datacenters to be deployed by Sagence AI.

The Inside Electronics podcast (it should play on your laptop or phone without any special app) is available here. Please have a listen on your commute or as you’re chasing down that egg salad sandwich with a YooHoo.

All for now,

AndyT

Andy's Nonlinearities blog arrives the first and third Monday of every month. To make sure you don't miss the latest edition, new articles, or breaking news coverage, please subscribe to our Electronic Design Today newsletter.

About the Author

Andy Turudic

Technology Editor, Electronic Design

Andy Turudic is a Technology Editor for Electronic Design Magazine, primarily covering Analog and Mixed-Signal circuits and devices. He holds a Bachelor's in EE from the University of Windsor (Ontario Canada) and has been involved in electronics, semiconductors, and gearhead stuff, for a bit over a half century.

"AndyT" brings his multidisciplinary engineering experience from companies that include National Semiconductor (now Texas Instruments), Altera (Intel), Agere, Zarlink, TriQuint,(now Qorvo), SW Bell (managing a research team at Bellcore, Bell Labs and Rockwell Science Center), Bell-Northern Research, and Northern Telecom and brings publisher employment experience as a paperboy for The Oshawa Times.

After hours, when he's not working on the latest invention to add to his portfolio of 16 issued US patents, he's lending advice and experience to the electric vehicle conversion community from his mountain lair in the Pacific Northwet[sic].

AndyT's engineering blog, "Nonlinearities," publishes the 1st and 3rd monday of each month. Andy's OpEd may appear at other times, with fair warning given by the Vu meter pic.