This article is part of TechXchange: AI on the Edge

Machine learning (ML) is just one of the tasks that the Dynamically Reconfigurable Processor (DRP) from Renesas can take on. DRP will be found in Renesas platforms like the RZ/A2M microprocessor, which hosts a Cortex-A9, with DRP doing the heavy lifting for IoT edge-based ML applications.

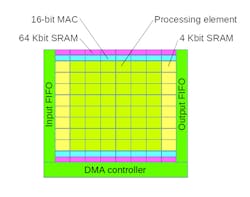

The DRP consists of an array of processing elements (PE) designed to efficiently handle 8- and 16-bit data that’s common in ML neural-network inference chores (Fig. 1). The system also has multiple MACs. A set of FIFOs manage the flow of data through the system and the DMA controller handles movement of data and configuration information to and from system memory that’s shared with the host processor.

1. Renesas’ Dynamically Reconfigurable Processor includes an array of processing elements, MACs, and memory blocks; streams of data are moved to and from main memory by the DMA controller.

Renesas tools take C code and compile it to configure the DRP. The challenge for most ML applications is that the models will be too large to be handled in one pass. The trick is that the DRP is designed to quickly change from one configuration to another.

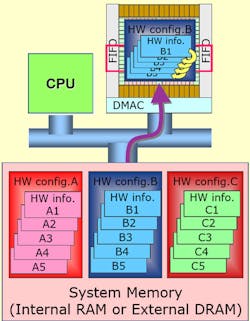

It actually works at two levels: The first switches within 1 ns (Fig. 2), using data contained within local memory of which there are a couple levels. Such a change typically modifies a fraction of the system, allowing multiple configurations to be contained in local memory. The other type of switch moves configuration data from system memory. This switch typically takes less than 1 ms, but can load multiple configurations.

2. The DRP can switch its configuration in 1 ns when the information is contained in local memory. A full firmware switch takes less than 1 ms where the configuration is brought in from system memory.

The first type of context would allow a layer within a neural network to be processed a section at a time, since the processing for many of these layers would not fit in some DRP implementations. The short switch would occur between sections.

Handling ML models is only part of what the DRP can be programmed to do. It’s able to manage many stream processing chores and will typically be needed for many pre- and post-processing chores. Having these in system memory will allow them to be swapped in as processing proceeds.

Developers will have to take timing into account when using the DRP, but its flexibility will enable the system to handle a wider range of applications. The system can’t handle every application because of timing and capacity limitations, but the ability to switch quickly allows it to address many that are out of the scope of less flexible systems.

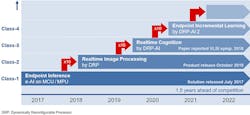

3. Renesas’ AI roadmap shows DRP giving edge nodes a 10X performance boost, with future versions providing similar improvements.

The RZ/A2M is just the first of a host of DRP-based solutions from Renesas. The Cortex-A9 will configure and support the DRP, but the DRP will be used to provide high-speed stream processing. This puts DRP technology in the forefront of Renesas’ AI support, with the current iteration providing a ten-fold improvement over its software-based, e-AI support (Fig. 3).

The ability to reconfigure the system should allow Renesas developers to handle current and most future neural-network models. The RZ/A2M series will be able to support many ML applications that would bring most other Cortex-A solutions to a standstill.

Read more articles on this topic at the TechXchange: AI on the Edge