As a species, we are obsessed about time. Our technological exploits rely on an increasingly exact knowledge of time. For example, some communications systems require frequency uncertainty <10-11 throughout the network. Even social drinking is constrained by the time it takes and the time it must end.

In a remote rural community, for example, the bar clock might be set manually to agree with time announcements provided by the local radio station. Of course, if the clock has a quartz movement, it simply may be left to run until the battery dies, all the while serving as the undisputed arbiter of opening and closing times.

A bartender in a university town has more problems. In addition to rowdy students, he caters to a few science, humanities, and engineering professors. The 0.01% accuracy of a cheap, quartz movement-wall clock is positively Neolithic to this segment of customers.

For example, one particularly intense engineering faculty member pointed out that his $50 clock radio, synchronized to the NIST WWVB signal, provided a time check every second accurate to better than 100 µs. The 60-kHz carrier itself is accurate to 1 part in 1012, and the time is directly related to coordinated universal time (UTC).

Then there is the high-tech-science-park bartender offering unibrew, a drink claimed to be computer-blended at the molecular level to match the taste and consistency of any beer in the world. Reservations at the bar are placed by e-mail, and the Bluetooth-enabled entryway registers all visitors, their charge-card numbers, and beverage preferences as they walk in. There’s really no point in considering anything less than a full-blown, eight-channel global positioning system (GPS) timing receiver when dealing with this clientele.

Making High-Accuracy Time Measurements

Generally, you have two choices when making time and frequency measurements. Either you can use an instrument that inherently provides high accuracy or you can attach a highly accurate reference source to an instrument with lower accuracy.

Digital storage oscilloscopes (DSOs) are useful for making moderately accurate measurements for two reasons: they use crystal oscillator time bases, and they can count. For example, by counting the exact number of reference markers on one channel, you can perform a very accurate measurement of an unknown oscillator frequency on another channel, perhaps to 1 ppm or better.

Direct timing measurements are limited by the typical time-base accuracy of only 100 ppm or so, although accuracy varies with instrument price. Some of the Tektronix Series 7000 scopes specify time-base accuracy as a range-dependent offset plus 10 to 15 ppm of the reading. Similarly, the horizontal (timing) accuracy of LeCroy’s WavePro Series is specified as <10 ppm. So, about the best timing accuracy you can buy in a DSO is one part in 105.Some DSOs have external clock inputs, and a much more accurate external clock could be used to give a more accurate time base. But even if the external source were perfect, internal scope time-base divider and range selection circuitry would limit performance. The scope circuitry jitter will introduce uncertainty about the position of any one sample. On average, the time base will be nearly as accurate as the external source, assuming the scope contributes random rather than systematic errors.

If the scope resynchronizes the external clock to its asynchronous internal clock, as some do, no improvement in accuracy will result from using an external clock. For this type of scope, the external clock only provides synchronization to a changing or odd-value clock source, such as a stream of pulses from a shaft encoder.

Timer/counters are another class of instrument that can be used to measure time and frequency accurately. Agilent Technologies offers a range of general-purpose 531xx models that measures frequency up to 12.4 GHz. They are available with several different oven-stabilized time bases. The best has less than 1 part in 1010 drift in a day. This compares to the standard time-base stability of about 3 parts in 106.

A related instrument, a time interval analyzer (TIA), operates in a slightly different manner, giving it significant advantages. Both the TIA and a timer/counter can measure the interval between start and stop events. A timer/counter provides successive, independent estimates of a source’s frequency or pulse width, but it stops between measurements. The TIA can measure start/stop periods with no dead time between periods; in other words, it times from event to event with no gaps.

The TIA also time-tags each measurement referred to the first event, and it can do this across several channels simultaneously. This means that, for example, you can determine period length by subtracting successive measurements from each other. Plotting the results of further subtracting the successive periods shows deviation vs. time. If you have been using the TIA to measure the period of an oscillator, then this plot shows timing jitter. Typical TIA accuracy is 1 ppm, although short-term stability can be much higher.

For calibrating oscillators or delays, a counter/timer could be the answer unless you require even better accuracy. What do you do, for example, if you’re manufacturing counter/timers and require a reference at least 10× or 100× better than 1×10-10 accuracy? The answer is to use a time base with better accuracy and stability than an oven-stabilized quartz crystal oscillator, such as rubidium or cesium oscillators—so-called atomic clocks.

There are major differences between the operation of quartz crystal oscillators and atomic clocks. Quartz is cut along particular crystallographic axes to provide thin slabs with good temperature characteristics. The slab actually vibrates mechanically at the oscillator frequency. For frequencies above about 30 MHz (AT cut) or 50 MHz (BT cut), overtone crystals are used because the slab becomes too thin and fragile to use for high-frequency fundamental oscillators.

A tuned tank circuit causes the circuit to oscillate at typically three, five, or seven times the fundamental crystal frequency. The most stable quartz crystal oscillators hold the temperature of the crystal constant by placing it in a miniature oven.

The transition of electrons from one energy state to another is the physical process upon which rubidium and cesium oscillators are based. A rubidium time base relies on a small change in the optical characteristics of gaseous rubidium when excited at a frequency of 6.834682612 GHz. When subjected to microwaves at this frequency, rubidium atoms absorb energy, and light transmission through the gas drops by about 0.1%. A feedback loop tunes the microwave oscillator to produce the maximum dip in light output.

In cesium time bases, higher energy atoms are separated from lower energy ones. A count of the higher energy ones serves as the feedback signal, in this case driving an oscillator to 9.192,631,770 GHz. The standard second is defined as 9,192,631,770 cycles of the natural frequency of cesium atoms.

Unparalleled Accuracy Anywhere

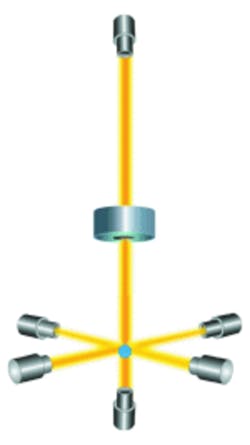

The GPS comprises at least 24 satellites, each with four rubidium or cesium atomic clocks on board. A GPS receiver processes position information broadcast by the satellites. All satellites transmit signals on the same two frequencies, L1 = 1.57542 GHz and L2 = 1.2276 GHz, but each satellite is identified by its own pseudorandom code.

The time differences among signals are used to determine the receiver’s position to within <100 m. Actually, now that the U.S. Department of Defense, which controls and operates GPS, has turned off the selective availability feature, position can be determined to within 10 m.The U.S. Naval Observatory (USNO) uses many cesium clocks in its own facilities and related organizations such as the NIST to derive an average time signal toward which a master UTC clock is steered. UTC(USNO) and UTC(NIST) are within 100 ns of each other. The frequency difference is <10-13.

When working with the small uncertainties exhibited by atomic clocks, long-term averaging is required as well as more specialized statistical data processing. As an example, Agilent Technologies specifies the time-domain stability of the Model 5071A Cesium Primary Frequency Standard as varying from 1 part in 109 when averaged for 1 ms to 1 part in 1014 over 106 s (11.5 days).

Control signals are sent to the satellites to minimize the differences between the clock frequencies and that of the master UTC clock. So, when you receive a satellite signal, there is direct traceability to UTC. A receiver intended to provide time and frequency information can be compensated for antenna, antenna-cable, and receiver delays to produce a time signal synchronized to within <100 ns of UTC.Statistical data associated with the differences between UTC and each satellite’s broadcast time is logged daily and made available on gpsmonitor.timefreq.bldrdoc.gov/scripts/

gpsview.exe. For example, on June 5, 2001, satellite 13 was viewed for 340 minutes, had a mean-time offset from UTC of -15.75 ns, exhibited a range of 10.15 ns peak-to-peak around the mean, and showed a time deviation of 1.4 ns (deviation among many 10-min averages) and a frequency offset of <1×10-13 from UTC.

As important as the GPS system is, it doesn’t solve every timekeeping problem. For example, Errol Shanklin, a senior marketing specialist with the precise time and frequency products group at Agilent Technologies, mentioned several weaknesses: the airlines’ need for better vertical position accuracy, GPS’ vulnerability to jamming, and the inherent dependence on a military distribution system.

There also are requirements for GPS-independent, stand-alone timekeeping. Smaller size, lower power, and faster acquisition than GPS are needed for mobile tracking and communications applications.

A recent development based on the GPS timing receiver is the GPS-disciplined oscillator (GPSDO). As its name suggests, this instrument contains a separate, very stable oscillator that can be steered to closely track GPS-derived frequency. The advantage of a GPSDO is high accuracy at relatively low cost.

A GPS timing receiver may track several satellites simultaneously, providing the average of the time and frequency signals. Over a period of a day or more, the accuracy of a GPSDO can be 1 part in 1014 or better. The downside of a GPSDO is lower, short-term accuracy similar to that of the internal oven-stabilized quartz or rubidium oscillator.

The Fluke 910/910R GPS Controlled Frequency Standard has added traceability to the benefits of a GPSDO. According to Dave Postetter, a product marketing manager at Fluke Precision Measurement, “Traceability is achieved by integrating a timer/counter into the circuitry of the frequency reference. This continuously measures the errors and maintains an internal database of frequency offset over a period of up to two years.”

The so-called common-view technique improves accuracy by eliminating most of the errors associated with a single-receiver GPS measurement system. Two receivers, separated by no more than a couple of thousand miles, track one or more of the same satellites, determine time and frequency, and then compare their results. Because they experience similar ionosphere- and troposphere-induced errors and because they use the same satellite data, the remaining differences between their time and frequency results are small.

As an example of the frequency accuracy that soon will be available commercially, consider a prototype system recently built at NIST. A multichannel common-view receiver has been integrated with a PC and coupled to the Internet. A similar system could be sent to a distant location and connected to a GPS frequency standard there.

Data from the remote receiver and the NIST-based reference receiver is uploaded to a central web server. Software can automatically process the sets of data to provide the current uncertainty of the remote-site frequency relative to UTC. On-line traceability to UTC(NIST) with data no more than 10 minutes old is about as good as it gets.

How About an IRIG Clock?

A related term often encountered in instrumentation, particularly data acquisition and recording systems, is interange instrumentation group (IRIG). Serial time-code formats are described in IRIG Standard 200-89, published by the Range Commanders Council of the U.S. Army White Sands Missile Range. IEEE 1344-1995 references this standard and describes the IRIG time-code format B in some detail (Figure 1, see the September 2001 issue of Evaluation Engineering).

This is a 74-bit time code that includes 30 bits of binary-coded decimal (BCD) time-of-year information (7 bits for seconds, 7 for minutes, 6 for hours, and 10 for days), 17 bits of straight binary seconds of day, and 27 bits for control functions (8 bits for the last two digits of the year in BCD format, 2 for daylight savings, 2 for leap second adjustment, 6 for local time offset from UTC, 4 for time quality, 1 for parity, and 4 not used).

IRIG time coding is applicable to any source, and the 4-bit time-quality field occupying control bits 20 to 23 indicates time accuracy or synchronization relative to UTC. Codes increment in decades of uncertainty from 1 ns to 10 s of deviation from UTC, including the extremes of 1111 (time not reliable) and 0000 (time locked to GPS/UTC). IRIG is not a time signal, but rather the coding format used to present timing information from a clock source, and used to time-tag data.

Subdividing the Picosecond

Before the NIST F-1 cesium fountain clock became operational last year, cesium clocks used hot atoms. Cesium gas was excited by microwaves at approximately 9.192 GHz, and the atoms with electrons in a higher energy state were detected by a spectrometer. Tuning the microwave oscillator to achieve the maximum number of excited atoms locked the oscillator’s frequency to cesium’s natural frequency.

In a radical departure from previous designs, the cesium fountain clock uses lasers to cool the cesium atoms to a few millionths of a degree above absolute zero. At this temperature, they move much more slowly and can be observed for a longer time. As a result of cooling and slowing the atoms, a more distinct feedback signal can be generated, leading to a more accurate estimation of the natural frequency.

In Figure 2c, all the lasers have been turned off, and gravity causes the atoms to fall back through the cavity. On both passes through the cavity, some atoms will change state. Finally, in Figure 2d, another laser triggers higher energy atoms to fluoresce, producing the detected output signal.

References

- Lombardi, M. et al, Time and Frequency Measurements Using the Global Positioning System (GPS), NIST, Time and Frequency Division, Measurement Science Conference, January 2001.

- IRIG Serial Time Code Formats, www.eestech.com/eestech/irig.html

- IEEE Std 1344-1995 Excerpts Applicable to Control Bits for Date Encoding, www.accuratetime.com/data_files/ieee1344.pdf, W. Clark & Associates, Ltd., masterclock.com

Return to EE Home Page

Published by EE-Evaluation Engineering

All contents © 2001 Nelson Publishing Inc.

No reprint, distribution, or reuse in any medium is permitted

without the express written consent of the publisher.

September 2001