Hardware vendors have accelerated data collection rates so quickly and enabled engineers and scientists to break through data -rate and -resolution barriers so rapidly that they have ultimately triggered a new wave of software consequences: Engineers now are faced with massive volumes of data to sift through to isolate the information they seek. While technology has facilitated faster and richer data retention, managing and making good use of this data have become the real challenges. It’s not about who can collect the most data—it’s about who can quickly make sense of the data they collect.

Today’s complex products require data acquisition throughout the complete design, development, and verification process. Engineers continue to face the challenge of testing increasingly complicated designs with ever-shrinking timelines and budgets to meet consumer demand for higher quality products at lower prices. From the cost of simulation systems, data acquisition hardware, and automation systems to the associated personnel required to perform and analyze tests, companies make a significant investment in the data they collect.

Display of Data Acquired Using NI CompactDAQ

Depending on the application, data volume can become a problem in two scenarios: collecting a little data over a long period of time or collecting a lot of data in a short time. Many engineers won’t label themselves a part of either group, but should.

Consider the fact that 32-bit Windows operating systems allocate a maximum of 2 GB of memory to an application. This means that engineers using IEPE microphones collecting just four channels of 24-bit audio data at CD-quality sampling rates (44.1 kS/s) won’t be able to open all of their data at one time after little more than 30 minutes of acquisition.

The volume of data acquired is a simple equation multiplying rate, resolution, and duration by the number of acquisition channels. Many applications dictate several of these parameters, which means that engineers may not have control over the volume of data they’re collecting and will wind up drowning in their data whether they like it or not.

By following a few simple guidelines, the data problem can be simplified on three fronts: data storage, data mining, and data processing.

Storing Data for Efficiency

When building a data acquisition system, the last thing an engineer wants to think about is how to appropriately save that data. Engineers often end up selecting a storage technique that most easily meets the needs of the moment. Yet data storage can have a sizeable effect on the overall efficiency of the acquisition system, the postprocessing options for the data, and the ultimate scalability of an operation. Storage considerations must include format, size, organization, and context.

Traditionally, measurement data has been stored in either files or databases. Each yields its own advantages and disadvantages. For most engineers, the easiest and fastest way to store data for later sharing or reuse is to save data to files.

Files offer the convenience and flexibility to tailor a format specific to an application’s requirements. Engineering system design software typically has a variety of choices of integrated support for file I/O, usually including options that also allow for the creation of both custom ASCII and binary file formats. Learning to read or write data files requires no extra effort beyond the initial investment of learning the design software’s programming paradigm. This means that engineers can quickly, easily, and cheaply write applications that collect data and store it to a file for later reference.

Additionally, files typically are easy to exchange with other engineers as long as any customized file formats in use do not demand a specialized reader application. The use of ASCII file formats, for example, can be sent to colleagues and opened in common software, such as Notepad, available on most computers.

Unfortunately, this luxury comes at a cost. ASCII file formats are the first mistake engineers make because these files require significant storage overhead, making them extremely inefficient for storing time-based measurement data. Instead, engineers should opt for a binary file format.

As advantageous as they seem, the trouble with files is that what engineers gain in flexibility, they usually lose in organizational efficiency. Since files are flexible in storage format (the *.XLSX format, for example, can be organized in thousands of different ways), data often gets stored differently from one engineer to another and from one project to another. In many cases, engineers simply will pick the first file format they come across, not knowing which is best for a particular type of measurement application.

Each file format introduced over time adds layers of complexity to an organization, especially when teams need to share information. Instead of each engineer reinventing the wheel by storing data differently, teams should opt for a file format that has both implicit and scalable structure.

Selecting a binary file format with implicit structure is not a silver bullet. The constraints of an application may still force engineers to acquire more data than can comfortably be processed. Further storage decisions must be made that balance the size of data files with the number of files saved. For example, engineers continuously collecting data for a month could store all of that data to one single file or segment data into 43,200 files representing one-minute chunks. Which is best?

There is no one correct answer. A single file representing one month of data may be fine if the application collects one channel of data sampled at 1 Hz. Conversely, data segmented into one-minute chunks may still prove unwieldy if data is collected over 10 channels sampled at 1 MS/s. Again, data storage is a delicate balance of rate, resolution, duration, and channel count.

Engineers facing a cumbersome data volume should begin by segmenting data storage based on the lowest time resolution at which they will want high-level insight into their data. For example, in the application that stores data continuously for one month, engineers may want to visualize the entire month’s data set but really only care about seeing data points graphed at each hour. In that case, engineers should segment files so each file corresponds to one-hour increments of time.

For each file, descriptive statistics such as minimum and maximum (to account for extrema) and mean (to trend) then should be calculated and saved to the file as metadata to provide context for the file’s time segment as a whole. This makes it much easier to view the measurement at a high level but then dig into any one-hour increments that are cause for concern or further exploration.

Data With Context

Engineers often have to go to great lengths to acquire and save measurement data. Unfortunately, even after all of this effort, raw measurement data still winds up as just a series of numbers in a file. What happens to the context surrounding the measurement that can provide valuable additional insight?

By storing raw data alongside its original context, it becomes easier to accumulate, locate, and later manipulate and understand. Consider a series of seemingly random integers: 5125550100. At first glance, it is impossible to make sense of this raw information. However, when given context—(512) 555-0100—the data is much easier to recognize and interpret as a phone number.

Descriptive information about measurement data context provides the same benefits and can detail anything from sensor type, manufacturer, or calibration date for a given measurement channel to revision, designer, or model number for an overall component under test. In fact, the more context that is stored with raw data, the more effectively that data can be traced throughout the design lifecycle, searched for or located, and correlated with other measurements at a future date by dedicated data postprocessing software.

One example of a flexible data storage format designed to store time-based measurement data is the industry standard Technical Data Management Streaming (TDMS) file format. The TDMS format is binary (low disk footprint, fast saving and loading) but portable and can be opened in software such as Microsoft Excel or NI DIAdem. Even more important, TDMS has inherent storage constructs for saving unlimited descriptive information together with measurement data, and these properties can be searched using data-mining techniques to quickly locate pertinent data sets or examine data in different ways.

Data Mining

Once data has been stored on disk including descriptive contextual information, it becomes significantly easier to locate using that descriptive information. Instead of relying on complex file-and-folder naming conventions, metadata can quickly locate all data sets saved during a particular time frame, all data sets concerning devices under test by a specific operator, or even all measurements that exceeded a given threshold. The key to data mining is simple: The better data is documented, the more flexibility engineers have in leveraging that metadata for efficient mining of data sets.

Initially, custom databases seem like an attractive data storage and management option because they are searchable by nature. Scientists and engineers can query data and isolate only the data sets that match specified criteria. However, databases are extremely expensive and complex and can take months or years of planning and programming to implement correctly. Traditional databases also require IT interaction for installation and maintenance and need modification to the database structure when application specs change.

Figure 1. NI DIAdem With DataFinder Technology

Since most engineers store data in files, file indexing solutions such as the NI DataFinder technology from National Instruments unite the convenience and flexibility of file-based data storage with the searchability of a database. If data is already stored in files with descriptive context, these solutions can extract this meta information from any file format and build a self-scaling, -adapting, and -configuring database that is abstracted from the user and the IT department.

As shown in Figure 1, this information then can be queried to isolate only pertinent data from within larger data sets. This technique applies not only to metadata at the file level, but also at the individual measurement channel level. Engineers can use search conditions to hone in on only the individual channels that match criteria and only load those relevant measurement channels into postprocessing software instead of being burdened by the rest of the data set.

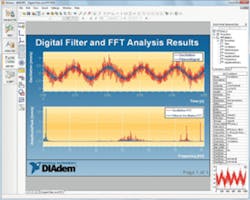

Figure 2. NI DIAdem With Digital Filter Technology

Processing Large Data Sets

Choosing the right postprocessing software can drastically simplify interaction with large data sets. One of the biggest mistakes engineers make is assuming that their current software tools such as Microsoft Excel will be sufficient when dealing with volumes of data. Engineers should seek out software specifically designed to process time-based measurement data, which produces unique challenges that traditional tools weren’t designed to overcome. Just because software can feasibly load a large data set doesn’t mean that it is optimized to process it once data is loaded.

Selecting a software tool designed for the challenge will yield additional processing efficiencies. For example, several engineering postprocessing solutions feature data-reduction mechanisms, which allow engineers to reduce data sets to make postprocessing more effective. These tools also should be able to easily concatenate data sets when loading to stitch together files that previously had been segmented into smaller time divisions for storage efficiency.

As a further return on your data investment, today’s dedicated engineering data-processing software should integrate directly with your data management and storage solution. For example, using a template-based reporting technique like that found in DIAdem and demonstrated in Figure 2, you can use variable content placeholders that populate reports automatically using descriptive contextual information from your data file (for instance, to populate graphs, labels, and descriptions automatically).

In a world overwhelmed with data, investment in the right data management processes makes all the difference.

About the Author

Derrick Snyder is a product manager for NI DIAdem Software at National Instruments. He holds a bachelor’s degree in computer engineering from Vanderbilt University. [email protected]