Putting an FPGA on a PCI Express card isn’t new, but when the likes of Xilinx and Intel do it, then developers start to notice. Likewise, these heavyweights don’t get involved in small projects. What’s significant, though, is that FPGA boards will likely be as common as GPGPUs in the cloud.

All of this activity is being driven by machine-learning (ML) applications and the desire to run deep neural networks (DNNs) faster while using less power. They compete with hardware that specifically targets ML applications. However, these tend to be less flexible when it comes to other applications or new ML techniques that differ in terms of implementation.

Alveo (Fig. 1) is Xilinx’s latest platform for enterprise ML applications. The board comes in a number of flavors, including the high-end U250 that has a 16-nm Xilinx UltraScale FPGA with 1,341,000 lookup tables (LUTs) and 54 MB of internal SRAM. The U200 is a lower-end FPGA with 892K LUTs and 35 MB of SRAM. Both versions have 64 MB of off-chip DDR4 memory. SRAM bandwidth is 38 TB/s, while DRAM bandwidth stands at 77 GB/s. The boards have a x16 PCIe Gen3 interface plus a pair of QSFP28 sockets for networking applications.

1. Alveo U250 can process 4100 images/s using the GoogLeNet v1 benchmark.

The Alveo U250 can deliver 33.3 INT8 teraoperations/s (TOPS) and the U200 delivers 18.6 INT8 TOPS. The boards, which use 225 W of power, come in active- and passive-cooled versions. The latter is designed for rack-mount systems that have flow-through air cooling. Active-cooling boards will typically be employed in workstations. Pricing for the U200 starts at $8,995.

While ML is a prime application, the FPGA boards target a range of applications, such as database search and analytics, genomics, storage compression, financial computing, and video processing and transcoding. FPGA acceleration provides impressive performance gains compared to CPUs and even GPUs. Low latency and tight integration are advantages that FPGAs bring to the table, in addition to their flexibility.

Intel open-sourced Open Programmable Acceleration Engine (OPAE) runs on top of the FPGA Interface Manager (FIM) for use with its Programmable Acceleration Cards (PACs). Xilinx has a similar stack and interface between the operating system and the Alveo boards, but has yet to give it a name to clearly identify the layers of the framework. The company provides the framework to developers for free, and some are customizing the upper layers for their environments.

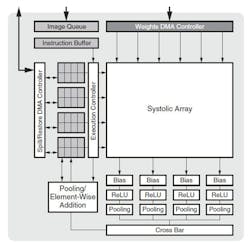

Many features within the framework have been available from Xilinx, including its xDDN hardware architecture (Fig. 2). This targets ML applications and supports the major ML frameworks like TensorFlow and Caffe. The xDDN engine, which can be optimized for throughput or latency, supports command-level parallel execution. The system has built-in hardware-assisted image tiling support to address applications with large images or networks with large activation sizes.

2. Xilinx’s xDDN hardware architecture delivers a custom machine-learning system in an FPGA.

Among the tools provided with the xDDN framework is the ability to split parts of the processing across FPGAs and CPUs. The systolic array is built using Xilinx’s SuperTile DSP macros. The xfDDN compiler and framework are part of the Alveo software support.

Alveo will be found in the cloud on platforms like AWS and Alibaba. Xilinx has garnered support for on-premises deployment working with the likes of IBM, Dell EMC, Fujitsu, and Hewlett Packard Enterprise.

The company also worked with AMD to pack eight Alveo boards into a system with AMD’s latest EPYC server CPUs (Fig. 3). The system motherboard features a Supermicro H11DSi-NT and 32-core EPYC 7551 CPUs. The system delivered 30,000 images/s for the GoogLeNet convolutional-neural-network benchmark.

3. AMD and Xilinx combine resources to pack eight Alveo boards into an EPYC server.

The Alveo doesn’t support the emerging CCIX interface, although that’s in the works. It would require matching support for other processors on the system. CCIX is fostered by the CCIX Consortium.

The key difference between the prior releases of PCIe FPGA boards and Alveo and PAC is software, specifically operating-system integration. Future upgrades to the Alveo line will likely include the ACAP Versal technology announced at the same time as Alveo. Versal isn’t available yet, but it would make a good match for the applications currently targeted by Alveo.

Many developers will be utilizing these boards using third-party IP—it’s downloaded by the operating system that will also handle data flow to and from the FPGA. This more or less mirrors how GPGPUs are used in the cloud and servers these days. Though custom application development for FPGAs can still be done, it still requires FPGA development expertise that deployment does not. Tools for converting applications written in C/C++ and OpenCL are available as well.