Machine Learning: Building the Bigger Picture by Leveraging Data

What you’ll learn:

- Different types of machine learning.

- Learn about types of supervised and unsupervised machine-learning approaches.

Machine learning (ML) is a method of data analysis that automates analytical model building. It’s a branch of artificial intelligence (AI) based on the idea that systems can learn from data, identify patterns, and make decisions with minimal human intervention. ML algorithms build a model based on sample data, or training data, to make predictions or decisions without being programmed to accomplish any given task.

Such algorithms are used in myriad applications, including medicine, autonomous vehicles, speech recognition, and machine vision, where it’s difficult or unfeasible to utilize traditional algorithms to perform the required tasks. It’s also behind chatbots and predictive text, language-translation apps, and even the shows and movies recommended by Netflix.

When companies employ artificial-intelligence programs, chances are they’re using machine learning. So much so that the terms are often used interchangeably and sometimes ambiguously as an all-encompassing form of AI. This sub-field aims to create computer models that exhibit intelligent behaviors similar to humans, meaning they can recognize a visual scene, understand a text written in natural language, or perform an action in the real world.

Forms of Machine Learning

ML is related to computational statistics, which focuses on making predictions using computers, but not all ML is statistical learning. Some implementations of ML use data and neural networks in a way that mimics the working of a biological brain.

The study of mathematical optimization provides methods, theory, and application domains to ML. Data mining is another related field of study, focusing on exploratory data analysis through unsupervised learning.

To that end, learning algorithms function on the basis that strategies, algorithms, and interpretations worked well in the past, so they’re likely to continue working well in the future. These inferences can be obvious, such as “since the sky is blue today, it will most likely be blue tomorrow.”

They also can be nuanced, meaning that, although the platform may be the same, there can be subtle differences within the subset. For example, if X number of families have geographically separate species with different color variants, there’s a good chance that several Y variants exist.

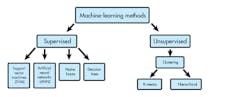

ML Methods

Machine learning utilizes a decision-making process that produces results based on the input data, which can be labeled or unlabeled. Most are equipped with an error function that evaluates the prediction of the model.

If there are known examples, an error function can make a comparison to assess the accuracy of the model. If the model can fit better to the data points in the training set, then weights are adjusted to reduce the differences between the known example and the model estimate. The algorithm will repeat the evaluation and optimization process, updating weights autonomously until a certain level of accuracy has been met.

The methods (see figure above) used to attain that accurate outcome fall into four major categories:

Supervised learning

Supervised learning is defined by its use of labeled datasets to train algorithms that classify data or accurately predict outcomes. The learning algorithm receives a set of inputs and the corresponding correct outputs, and the algorithm learns by comparing its actual output with correct outputs to find errors. It then modifies the model accordingly. A cross-validation process is then used to ensure that the model avoids overfitting or underfitting.

Supervised learning helps organizations solve various real-world problems at scale, such as classifying spam in a separate folder from your inbox. Some methods used in supervised learning include neural networks, naive bayes, linear regression, logistic regression, random forest, support vector machines (SVM), and more.

Unsupervised learning

Unsupervised learning is used against data with no historical labels, meaning the system isn’t told the correct answer and the algorithm must figure out what’s being shown. The goal is to explore the data and find some structure or pattern hidden within. This method works well on transactional data.

For example, it can identify segments of customers with similar attributes who can then be treated similarly in marketing campaigns. Or it can find the main attributes that separate customer segments from each other.

Popular techniques include self-organizing maps, nearest-neighbor mapping, k-means clustering, and singular value decomposition. These algorithms also are used to segment text topics, recommend items, and identify data outliers. On top of that, they’re used to reduce the number of features in a model through the process of dimensionality reduction, principal component analysis (PCA), and singular value decomposition (SVD). Other algorithms applied in unsupervised learning include neural networks, probabilistic clustering methods, and more.

Semi-supervised learning

This approach to ML offers a happy medium between the supervised and unsupervised methods. During training, it uses a smaller labeled dataset to guide classification and feature extraction from a larger, unlabeled dataset.

This type of learning can be used with methods such as classification, regression, and prediction, and can solve the problem of not having enough labeled data (or not being able to afford to label enough data) to train a supervised-learning algorithm. It’s also helpful when the cost associated with labeling is too high to allow for a fully labeled training process. Examples of semi-supervised learning include facial and object recognition.

Reinforcement learning

Reinforcement learning is often associated with robotics, autonomous vehicles, gaming, and navigation. This method enables the algorithm to discover, via trial and error, which actions produce the most significant rewards.

Three primary components are associated with this type of learning: the agent (the learner or decision-maker), the environment (everything the agent interacts with), and actions (what the agent can do). The objective is for the agent to choose actions that maximize the expected reward over a given amount of time. The agent can reach the goal quickly by following a good policy. Thus, the goal in reinforcement learning is to learn the best policy.

Dimensionality reduction

Dimensionality reduction is the task of reducing the number of features in a dataset. Often, there are too many variables to process in ML tasks, such as regression or classification. These variables also are called features—the higher the number of features, the more difficult it is to model them. Moreover, some of these features can be redundant, adding unnecessary noise to the dataset.

Dimensionality reduction lowers the number of random variables under consideration by garnering a set of principal variables, which can then be divided into feature selection and feature extraction.

Applications

Many real-world applications take advantage of machine learning, including artificial neural networks (ANN), which are modeled after their biological counterparts. These consist of thousands or millions of processing nodes that are densely interconnected to handle many tasks, including speech recognition/translation, gaming, social networking, medical diagnoses, and more.

With Facebook, for example, ML personalizes how a member’s feed is delivered. If the member regularly stops to read posts from certain groups, it will prioritize those activities earlier in the feed.

Moreover, ML is used in speech applications, including speech-to-text, which uses natural language processing (NLP) to convert human language into text. It also can be found with digital assistants such as Siri and Alexa, which use voice recognition for application interaction. Automated customer service, recommendation engines, computer vision, climate science, and even agriculture are among the many other applications.