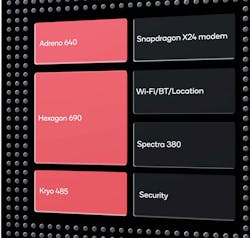

System-on-chip (SoC) solutions continue to get more complex as more specialized hardware is added to optimize the SoC for new applications. Qualcomm’s latest Snapdragon 855 (Fig. 1) highlights this change. The 855 includes a number of blocks including the Snapdragon X24 cellular modem and wireless Wi-Fi, Bluetooth, and GPS support from the Adreno 640 GPU, the Hexagon 690 DSP, the Kryo 485 processor cluster, and the Spectra 380 image signal processor (ISP).

1. Qualcomm’s Snapdragon 855 SoC includes the Adreno 640 GPU, the Hexagon 690 DSP, the octal core Kryo 485 processor cluster, and the Spectra 380 image signal processor, along with wireless communication and security support.

The 64-bit Kyro 485 includes one high-performance “Gold Prime” 2.84-GHz core with a 512-kB L2 cache, three 2.42-GHz cores with 256-kB L2 caches, and four 1.8-GHz cores with 128-kB L2 caches designed for low-power operation. The Gold Prime core targets single-threaded, user-interface applications like web browsers.

The Hexagon 690 DSP is where the most change has occurred. It includes a Tensor accelerator and new vector extensions with support for INT8 and INT16 datatypes. The enhancements highlight the machine-learning (ML) support of the 855. The Kyro 485 also includes new dot product instructions with INT8 and single precision floating point that can also be useful with ML applications. Many chip designers are enhancing the DSP with ML support since DSP architectures already lend themselves to ML applications.

The 855 targets premium smartphones and other high-end applications while its sibling, the Snapdragon 8cx, targets laptops with a higher-performance GPU. ML is playing a part in applications like image processing and computer vision for facial-recognition support. The ISP is designed to provide data to ML image applications.

Seth DeLand, Product Marketing Manager of Data Analytics at MathWorks, notes that “Companies will increasingly use machine learning algorithms to enable products and services to ‘learn’ from data and improve performance. Machine learning is already present in some areas: image processing and computer vision for facial recognition, price and load forecasting for energy production, predicting failures in industrial equipment, and more. In the coming year, it can be expected that machine learning will be increasingly present as more companies are inspired to integrate machine-learning algorithms into their products and services by using scalable software tools, including MATLAB.”

More Machine Learning

ML support is showing up at all levels. MediaTek’s Helio P70 is built around an octal core big.LITTLE configuration with four 2.1-GHz Arm Cortex-A73s and four power-efficient, 2-GHz Cortex-A53s. There’s also a 900-MHz Arm Mali-G72 GPU and a dual-core AI processing unit (APU). The APU is designed to handle chores like human pose recognition in real-time as well as augmentation to still images and video. It can deliver 280 GMACs.

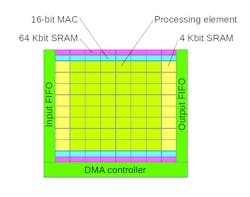

Moving further down the scale is Renesas’ RZ/A2M with DRP. It’s designed to support human machine interfaces (HMIs) including systems with cameras. It has an Arm Cortex-A9 along with Renesas’ Dynamically Reconfigurable Processor (DRP) that provides ML support. The DRP is programmable in C and has optimized DMA support to minimize data movement (Fig. 2). The DRP is designed to be reconfigured every clock cycle, allowing for implementation of innovate algorithms.

2. Renesas’ Dynamically Reconfigurable Processor includes an array of processing elements, MACs, and memory blocks. Streams of data are moved to and from main memory by the DMA controller.

ML hardware acceleration is becoming the norm. However, it’s possible to run more functionally constrained ML applications on microcontrollers like the Cortex-M7 that have no ML hardware acceleration. It helps to have DSP-style support.

Many platforms, like Renesas’ RZ family, include incarnations without ML acceleration. More often than not, though, ML will be an option available to developers. Much of this support targets image and video processing, but the hardware tends to be applicable to almost any ML inference application.