Evolving Data Centers Face Cabling Installation Challenges

Download this article in PDF format.

Greater investments in data-center cabling installations, the use of artificial intelligence (AI) and convergence technologies, and edge computing are major trends in the ongoing transformation of the data center. Their use is providing an impetus to advanced hyper-scale cloud-computing environments.

According to optical researchers, the global hyper-scale data-center market size is expected to grow from $25.08 billion in 2017 to $80.65 billion by 2022. Established businesses competing with web-scale businesses can’t afford to be constructed by legacy technologies. To remain competitive, they must build new platforms and invest in the next-generation Internet Protocol (IP) infrastructure.

Major industry giants like Google, Microsoft, Facebook, Apple, Alibaba, and Tencent are getting that message and preparing for this trend. To get a sense of this shift, one need only look at the incredible growth of Gigabit Ethernet switch port shipments being cited for 25 to 100 Gigabit Ethernet (GBE).

At this year’s Optical Networking and Communication Conference and Exhibition (OFC), experimental fiber channels using 100G multimode optical transceivers over multimode dispersion fibers were shown. The work demonstrates that OM4 multimode fibers with dispersion compensation can achieve similar or longer reaches than OMS multimode fibers.

Sponsored Resources:

- Triton Busbar Solutions Provide Power Delivery and Power Distribution For Data Centers

- LumaLink Provides Cable Illumination from Origin to Endpoint for Easy Identification

- Ultimate Cable Performance Efficiency for Next-Gen Data Centers

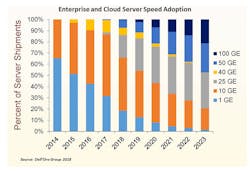

A review and forecast of server shipments over the last several years by the Dell’Oro Group showed that 1 GbE connections are gradually replaced by 10 GbE connections in the small medium enterprises (SME) and that the High-end Enterprise and Cloud markets are adopting 25 GbE connections. Even faster Ethernet connections, such as 50 and 100 GbE, are expected to be deployed to the top four cloud service providers – Google, Amazon, Microsoft, and Facebook – over the next two to three years as these companies upgrade their networks.

Edge Computing Gains Traction

The edge-computing paradigm brings computer data storage closer to the location where it’s needed. Computation is largely or completely performed on distributed device nodes. The aim is that any application or general functionality needs to be closer to the source of the action where distributed systems technology is performed in the physical world. Although edge computing doesn’t need contact with any centralized cloud, it may interact with one.

Edge computing is driving efficient bandwidth utilization and minimizing connections and physical reach (distance), which introduce latency into the infrastructure. Together with other data growth areas, edge-computing applications will generate petabytes of data, daily, by 2020. Systems that intelligently process data to create a business advantage will be essential to future prosperity.

The evolution of more sophisticated and advanced data centers is presenting data-center managers with greater challenges in how to select and maintain the correct cabling setup. That’s the opinion of Ron Gruen, senior enterprise specialist and Russell Kirkland, senior systems engineer at Corning Inc.

They recognize that technology in the data center is changing, making it tougher to choose the right cable and cabling system, and realizing that fiber-optic cables are becoming the choice for interconnecting data centers. Molex and other cable and connector firms are working closely with optical fiber manufacturers to achieve this goal.

Ingenious Testing

Molex was asked by a leading wireless telecommunications company to help develop a method for testing and managing high-density MPO optical cable assemblies. Its ultimate solution allows for the testing, cable identification, and management of high-density interconnects—and honors the customer’s request to illuminate the cable runs to a specific cable.

The LumaLink Optical Trace Cable Assembly illuminates the cable, combining optical cables and an electroluminescent wire. But this method was also faced with the challenge of providing power to the cable to illuminate it. To solve that challenge, a magnetic power connection illuminates the cable, from origin to endpoint, making specific cables easily and quickly identifiable, without any increase in cable diameter

Another challenge was to keep the cable’s diameter the same to make it easier to fit into its existing location and faster for technicians to trace them. This simplifies cable identification and management, especially for density interconnects (Fig. 1).

1. Molex’s LumaLink optical trace cable assembly, which illuminates the cable, combines optical cables and an electroluminescent wire.

There’s a financial angle to this—lost revenue is one large factor. Nearly all companies have websites. Yet delays in web user response equates to an 11% loss of page views and 7% fewer conversions in merging 5-GBE systems (Fig. 2).

2. Global server systems are growing in speed with 100 GE on the horizon. (Source: Dell’Oro Group)

As can be seen, 1G connections are quickly becoming relics, and soon 10G will all but disappear. 25G transceivers currently have a foothold in the market, but they should be eclipsed by 50G over the next few years. In addition, many hyper-scale and cloud data centers are expected to be early adopters of 100G server speeds. These higher server speeds can be supported by either two-fiber transceivers of equal data rates or parallel-optic transceivers of 40, 100, 200 and 400G at the switch, utilizing parallel optics and breakout capabilities.

Most major data-center owners and managers will agree that power is the single largest expense in a hyper-scale data center, as well as the cost of the power to cool the data-center electronics. And using denser cabling links also helps drive down the total operating cost, which also includes maintenance. Having everything in a hyper-scale location instead of in the cloud goes a long way to simplifying everything. But that doesn’t mean cloud computing is dead. It will have a role in a converged and hyper-converged infrastructure once we look at the total future data-center scenario.

One element to be seriously considered is the rapid growth of solid-state storage devices that are replacing hard-disk drives. Also called flash-memory devices, they dissipate quite a bit less power than hard-disk drives and are much faster in operating speed, making their use highly desirable in hyper-scale data centers. This translates into lower heating and cooling costs and fewer data bottlenecks.

To be sure, there are broad initiatives to bring cable-based 10-Gbit systems for internet connectivity, spearheaded by the National Cable Television Association (NCTA), CableLabs, and Cable Europe. Part of the initiative are many U.S. cable operators that represent 85% of all activity, which has increased over the last several years and is expected to continue (Fig. 3).

3. Spearheaded by the NCTA, the development of broadband communications will enable a vast number of IoT applications through advances in cable-based, 10-Gbit broadband communications. (Source: NCTA)

Recently, Intel showed off its Ethernet 800 series network interface cards (NICs) that accompany the firm’s XeonScalable CPUs. It has multiple programmable features for customization—one can add new protocols to the ASIC and connect dedicated queues to filter key application traffic into it to improve throughput and latency (Fig. 4).4. Intel’s XeonScalable CPU board has multiple programmable features for customization. New protocols can be connected to its ASIC as well as dedicated queues to filter key application traffic into it to improve throughput and latency. (Source: Intel)

Getting Higher Data Rates

Transceiver manufacturers use several different technologies to achieve the need for ever-increasing data rates. Whether the connection between transceivers is multimode or single-mode fiber, these different technologies rely on the same basic tools. The simplest method is to increase the baud rate. In other words, it’s just how fast you can turn the laser on or off. This first method works well for lower data rates, like 10G, but becomes problematic at higher data rates where the signal-to-noise ratio starts to become an issue.

Or you can use more fibers. A pair of fibers can create 10G or 25G connections instead of using eight fibers to create a 40G or 100G connection using several pairs in a parallel transmission scenario. Anther method is to increase the number of wavelengths. Whether the fiber used is single mode or multimode, and the architecture is duplex or parallel, all paths lead to either a two- or eight-fiber solution.

Another method for achieving higher data rates is to change the format of modulation. Instead of using a simple non-return-to-zero (NRZ), the transceiver can use pulse amplitude modulation (PAM4) to carry four times the amount of data in the same time slot. Either way results in the use of a two- or eight-fiber setup.

Higher data rates, whether they use NRZ or PAM4, require some sort of forward-error-correction (FEC) algorithm. Because noise has a much higher impact on PAM4, it requires a more-complex FEC algorithm.

Summary

Let’s not forget that increased automation is fast approaching, and AI has a role to play here. What’s needed is the construction of more efficient and agile data networks using simplified designs and flexible platforms. And lower-cost cabling and less cooling power are key objectives in staying competitive in the hyper-scale data-center and cloud-computing worlds. Experts agree that we must keep our options open for wider opportunities.

Sponsored Resources:

- Triton Busbar Solutions Provide Power Delivery and Power Distribution For Data Centers

- LumaLink Provides Cable Illumination from Origin to Endpoint for Easy Identification

- Ultimate Cable Performance Efficiency for Next-Gen Data Centers

Related References: