I was talking with a friend recently about artificial intelligence (AI) and machine learning (ML), and they noted that if you replaced AI or ML with the word magic, many of those discussions would be as useful and informative as before. This is due to a number of factors, including misunderstanding about the current state of affairs when it comes to AI, ML, and more specifically, deep neural networks (DNNs)—specifically, what ML models are actually doing and not comprehending how ML models are used together.

I hope that those who have been working with ML take kindly to my explanations, because they’re targeted at engineers who want to understand and use ML but haven’t gotten through the hype that even ML companies are spouting. More than half of you are looking into ML, but only a fraction is actually incorporating it into products. This number is growing rapidly though.

ML is only a part of the AI field and many ML tools and models are available, being used now, and in development (Fig. 1). DNNs are just a part; other neural-network approaches enter into the mix, but more on that later.

1. Neural networks are just a part of the machine-learning portion of artificial-intelligence research.

Developers should look at ML models more like fast Fourier transforms (FFTs) or Kalman filters. They’re building blocks that perform a particular function well and can be combined with similar tools, modules, or models to solve a problem. The idea of stringing black boxes together is appropriate. The difference between an FFT and a DNN model is in the configuration. The former has a few parameters while DNN model needs to be trained.

Training for some types of neural networks requires thousands of samples, such as photos. This is often done in the cloud, where large amounts of storage and computation power can be applied. Trained models can then be used in the field, since they normally require less storage and computation power as their training counterparts. AI accelerators can be utilized in both instances to improve performance and reduce power requirements.

Rolling a Machine-Learning Model

Most ML models can be trained to provide different results using a different set of training samples. For example, a collection of cat photos can be used with some models to help identify cats.

Models can perform different functions such as detection, classification and segmentation. These are common chores for image-based tools. Other functions could include path optimization or anomaly detection, or provide recommendations.

A single model will not typically deliver all of the processing needed in most applications, and input and output data may benefit from additional processing. For example, noise reduction may be useful for audio input to a model. The noise reduction may be provided by conventional analog or digital filters or there may be an ML model in the mix. The output could then be used to recognize phonemes, words, etc., as the data is massaged until a voice command is potentially recognized.

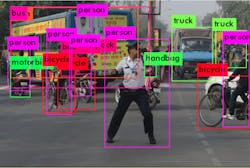

Likewise, a model or filter might be used to identify an area of interest in an image. This subset could then be presented to the ML-based identification subsystem and so on (Fig. 2). The level of detail will depend on the application. For example, a video-based door-opening system may need to differentiate between people and animals as well as the direction of movement so that the door only opens when a person is moving toward it.

2. Different tools or ML models can be used to identify areas of interest that are then isolated and processed to distinguish between objects such as people and cars.

Models may be custom-built and pretrained, or created and trained by a developer. Much will depend on the requirements and goals of the application. For example, keeping a machine running may mean tracking the operation of the electric motor in the system. A number of factors can be recorded and analyzed from power provided to the motor to noise and vibration information.

Companies such as H2O.ai and XNor are providing prebuilt or customized models and training for those who don’t want to start from scratch or use open-source models that may require integration and customization. H2O.ai has packages like Enterprise Steam and Enterprise Puddle that target specific platforms and services. XNor’s AI2Go uses a menu-style approach: developers start by choosing a target platform, like a Raspberry Pi, then an industry, like automotive, and then a use case, such as In-cabin object classification. The final step is to select a model based on latency and memory footprint limitations (Fig. 3).

3. Shown is the tail end of the menu selection process for XNor’s AI2Go. Developers can narrow the search for the ideal model by specifying the memory footprint and latency time.

It’s Not All About DNNs

Developers need to keep in mind a number of factors when dealing with neural networks and similar technologies. Probability is involved and results from an ML model are typically defined in percentages. For example, a model trained to recognize cats and dogs may be able to provide a high level of confidence that an image contains a dog or a cat. The level may be lower distinguishing a dog from a cat and so on, to the point that a particular breed of animal is recognized.

The percentages can often improve with additional training, but changes usually aren’t linear. It may be easy to hit the 50% mark and 90% might be a good model. However, a lot of training time may be required to hit 99%.

The big question is: “What are the application requirements and what alternatives are there in the decision-making process?” It’s one reason why multiple sensors are used when security and safety are important design factors.

DNNs have been popular because of the availability of open-source solutions, including platforms like TensorFlow and Caffe. They have found extensive hardware and software support from the likes of Xilinx, NVIDIA, Intel, and so on, but they’re not the only types of neural-network tools available. Convolutional neural networks (CNNs), recurrent neural networks (RNNs), and spiking neural networks (SNNs) are some of the other options available.

SNNs are used by BrainChip and Eta Compute. BrainChip’s Akida Development Environment (ADE) is designed to support SNN model creation. Eta Compute augments its ultra-low-power Cortex-M3 microcontroller with SNN hardware. SNNs are easier to train than DNNs and their ilk, although there are tradeoffs for all neural-network approaches.

Neurala’s Lifelong-DNN (LDNN) is another ML approach that’s similar to DNNs with the lower training overhead of SNNs. LDNN is a proprietary system developed over many years. It supports continuous learning using an approximation of lightweight back propagation that allows learning to continue without the need to retain the initial training information. LDNN also requires fewer samples to reach the same level of training as a conventional DNN.

There’s a tradeoff in precision and recognition levels compared to a DNN, but such differences are similar to those involving SNNs. It’s not possible to make direct comparisons between systems because so many factors are involved, including training time, samples, etc.

LDNN can benefit for AI acceleration provided by general-purpose GPUs (GPGPUs). SNNs are even more lightweight, making them easier to use on microcontrollers. Even so, DNNs can run on microcontrollers and low-end DSPs as long as the models aren’t too demanding. Image processing may not be practical, but tracking anomalies on a motor-control system could be feasible.

Overcoming ML Challenges

There are numerous challenges when dealing with ML. For example, overfitting is a problem experienced by training-based solutions. This occurs when the models work well with data similar to the training data, but poorly on data that’s new. LDNN uses an automatic, threshold-based consolidation system that reduces redundant weight vectors and resets the weights while preserving new, valid outliers.

ML models can address many tasks successfully with high accuracy. However, that doesn’t mean all tasks, regardless if they’re conventional classification or segmentation problem, can be accommodated. Sometimes changing models can help or developing new ones. This is where data engineers can come in handy, though they tend to be rare and expensive.

Debugging models can also be a challenge. ML module debugging is much different than debugging a conventional program. Debugging models that are working within an application is another issue. Keep in mind that models will often have an accuracy less than 100%; therefore, applications need to be designed to handle these conditions. This is less of an issue for non-critical applications. However, apps like self-driving cars will require redundant, overlapping systems.

Avalanche of Advances

New systems continue to come out of academia and research facilities. For example, “Learning Sensorimotor Control with Neuromorphic Sensors: Toward Hyperdimensional Active Perception” is a paper out of the University of Maryland’s engineering department. Anton Mitrokhin and Peter Sutor Jr., Cornelia Fermüller, and Computer Science Professor Yiannis Aloimonos developed a hyperdimensional pipeline for integrating sensor data, ML analysis, and control. It uses its own hyperdimensional memory system.

ML has been progressing like no other programming tool in the past. Improvements have been significant even without turning to specialized hardware. Part of this is due to improved software support to optimizations that increase accuracy or performance while reducing hardware requirements. The challenge for developers is determining what hardware to use, what ML tools to use, and how to combine them to address their application.

It’s worth making most systems now rather than waiting for the next improvement. Some platforms will be upward-compatible; however, others may not. Going with a hardware-accelerated solution will limit the ML models that can be supported but with significant performance gains, often multiple orders of magnitude.

Systems that employ ML aren’t magic and their application can use conventional design approaches. They do require new tools and debugging techniques, so incorporating ML for the first time shouldn’t be a task taken lightly. On the other hand, the payback can be significant and ML models may often provide support that’s unavailable with conventional programming techniques and frameworks.

As noted, a single ML model may not be what’s needed for a particular application. Combining models, filters, and other modules requires an understanding of each, so don’t assume it will simply be a matter of choosing an ML model and doing a limited amount of training. That may be adequate in some instances, especially if the application matches an existing model, but don’t count on it until you try it out.