Habana Labs has emerged onto the machine-learning (ML) stage with its Goya HL-1000 processor (Fig. 1). The x16 PCI Express Gen 4 board has a 200-W TDP and comes with 16 GB of DDR4 ECC DRAM. It’s aimed at ML inference chores with a forthcoming Gaudi processor targeting ML training. In the meantime, Goya can take advantage of trained deep-neural-network (DNN) models to handle inference.

1. Habana Labs’ Goya HL-1000 is based on a VLIW SIMD vector core with Tensor addressing support.

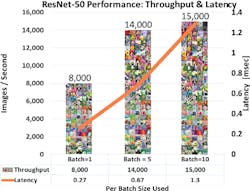

The HL-1000 is designed to manage high-throughput chores with low latency (Fig. 2). Its performance scales well, handling thousands of images per second for standard ML test applications such as ResNet-50. It can process this model at over 15,000 images/s with a 1.3-ms latency while dissipating only 100 W of power. Typical latency in the industry at this point sits at around 7 ms. Keeping power requirements low in an enterprise setting with many boards is critical to minimizing overall system costs. Habana delivers passive as well as active cooled models.

2. The Goya’s performance and low latency are impressive.

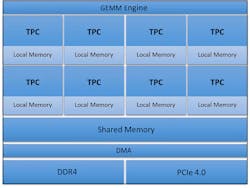

The architecture is based on a Tensor Processing Core (TPC) that’s fully programmable in C and C++ using an LLVM-based compiler. The HL-1000 processor is built on a cluster of eight TPCs (Fig. 3). As with most ML systems, the processor includes hardware general-matrix-multiply (GEMM) acceleration. There are special functions in dedicated hardware along with Tensor addressing and latency hiding support.

3. Multiple, fully programmable Habana Tensor Processing Cores populate the Goya HL-1000.

The system exploits on-die memory that’s managed by software along with centralized, programmable DMAs to deliver predictable, low-latency operation. Although targeted at TensorFlow applications, it works equally well with other framework models. The TPC handles 8-, 16- and 32-bit integers as well as 32-bit floating point.

Habana’s SynapseAI software transforms standard AI models to applications that run on the HL-1000 (Fig. 4). The tools can import models from MXNet, Caffe 2, Microsoft Cognitive Toolkit, PyTorch, and Open Neural Network Exchange Format (ONNX). It can also utilize user-supplied libraries; a Python-based front end helps automate system operation. The SynapseAI runtime manages resources on the processor. IDE support includes a debugger and simulator. Among its tools are real-time tracing and performance analysis that can be graphically presented.

4. Habana’s SynapseAI software transforms standard AI models to applications that run on the HL-1000.

Habana will have lots of competition in this space. Thus, its high performance, lower power, and low-latency characteristics will be key factors in staying ahead of the pack.