Nvidia Pushes DPUs to Take Over More Tasks in Data Centers

Nvidia rolled out the first in a family of processors that it wants to bring to every server in the world to offload more of the networking, storage, security, and other infrastructure management chores in data centers. Thus, it enters another battleground with Intel and other rivals in the market for server chips.

The Santa Clara, Calif.-based company introduced its line of data processing units, or DPUs, that can move more of the infrastructure in data centers into a chip. The DPU combines programmable Arm CPU cores with a high-performance networking interface on a system-on-a-chip (SoC). The chip adds accelerators that can offload functions—from coordinating with storage to sweeping the network for malware—that have become a major drag on the performance of the CPU in the server.

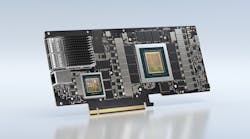

The BlueField DPUs are incorporated on a PCIe Gen 4-based server networking card called a SmartNIC that can be slapped on any server in cloud data centers and private computer networks. Nvidia said that it has started supplying the family's first generation of chips, the BlueField-2, to early customers and it should be rolled out in servers from leading manufacturers in 2021.

The move—announced by CEO Jensen Huang at the company's annual GPU Technology Conference (GTC)—fits into Nvidia's strategy of expanding its footprint in the data-center market. The chips are based on networking chips from Nvidia's $7 billion deal for Mellanox Technologies and central processing units (CPUs) based on blueprints from Arm. Nvidia agreed to buy Arm for $40 billion last month.

Mellanox released its BlueField-2 DPU last year before it became part of Nvidia. The DPU is designed to compute, secure, and store data as it moves in and out of the server at the speed of the network.

Nvidia has long led the market for graphics processing units, or GPUs, used in high-end personal computers and consoles for gaming. But in the last decade, it has also started selling advanced server processors to run artificial intelligence in the largest cloud data centers, where its chips are the current gold standard. Top cloud service providers, including Amazon, Microsoft, and Alphabet's Google, use Nvidia chips to pack more AI performance into data centers they rent out to other firms.

Nvidia GPUs have thousands of tiny processors used to carry out computations in parallel. That gives them the brute force to run AI tasks far faster and more efficiently than Intel's chips. The chips are added to data centers—vast warehouses of servers, storage, networking switches, and other hardware—to offload the AI tasks that can overexert the CPU in servers.

Nvidia, which has outstripped Intel as most valuable US semiconductor company, is trying to take over more of the computational chores in data centers. That could hurt Intel, which commands more than 90% of the market share in CPUs for servers, which can cost thousands of dollars each. Intel has fallen behind in the race to roll out more advanced artificial intelligence chips.

"The data center has become the new unit of computing,” Huang said in a recent statement. “DPUs are an essential element of modern and secure accelerated data centers in which CPUs, GPUs and DPUs are able to combine into a single computing unit [that is] fully programmable, AI-enabled and can deliver levels of security and compute power not previously possible.”

The challenge the DPU is trying to address is that more of the infrastructure management chores in modern data centers have been swapped out for software that runs inside the CPU in the server. Most of these chores once ran on standard network interface cards (NICs) and separate bundles of server hardware. But a major disadvantage is that all of the software in the server taxes the resources in the CPU. By offloading these functions, the CPU can focus on other workloads.

Nvidia estimates that data management drains up to 30% of the central processing cores in data centers. He said that all of the software infrastructure in data centers is a huge drag on a server's performance. "A new type of processor is needed that's designed for data movement and security processing," Huang said at the GTC. "The BlueField DPU is data-center infrastructure on a chip."

Nvidia said the first product in the family, the BlueField-2, supports the same level of performance for networking, storage, security, and infrastructure tasks as 125 CPU cores. The resources saved in the process can be used for other services, lifting the maximum possible performance of the server. The DPU is supported by major operating systems used in data centers, including Linux and VMware.

“Offloading processing to a DPU can result in overall cost savings and improved performance for data centers,” said Tom Coughlin, an independent technology analyst at Coughlin Associates, in a blog post.

The chip combines eight programmable cores based on the Arm Cortex-A72 architecture with its high-performance ConnectX-6 Dx network interface. The DPU, which can be added to any server in data centers, has a pair of VLIW accelerators for offloading storage, networking, and other management workloads with up to 0.7 trillion operations per second, or TOPS, of performance. The BlueField-2 also has 1 MB of L2 cache that can be shared by pairs of the CPU cores and 6 MB of L3 cache.

The BlueField-2 incorporates a 200-Gb/s or dual 100-Gb/s networking port(s) for Ethernet or InfiniBand so that it can be used for networking as well as storage workloads, including Non-Volatile Memory Express or NVMe. The chip also includes isolation, root of trust, key management, and cryptography to prevent data breaches and other attacks. It features up to 16 PCIe Gen 4 ports to communicate with the CPU and GPU on the server and up to 16 GB of onboard DRAM.

The company is trying to stand out from rivals by adding more potent AI to its Bluefield DPUs. It is also rolling out the BlueField-2X, which adds a GPU based on its new Ampere architecture to the same hardware as the BlueField-2, in 2021. The DPU supports up to 60 TOPS of AI performance that can be used to improve networking, storage, and other chores in the data center. Using AI, the DPU can detect abnormal conduct in the network and block it before data is stolen.

Huang said that many major server manufacturers, including Dell Technologies, Supermicro, Lenovo, Asus, Atos, Gigabyte, and Quanta, plan to integrate Nvidia DPUs in servers that they sell to corporate customers.

He also revealed the company's roadmap for future generations of the SmartNICs. Nvidia plans to roll out its BlueField-3 and BlueField-3X with more computing power and 400-Gbps networking links in 2022. It also plans to introduce the BlueField-4 in 2023 with major gains in computing and AI and place the Arm CPU and Nvidia GPU on the same die for the first time in the product lineup.

The Silicon Valley company is also rolling out a set of programming tools and software stack called DOCA to complement its DPU products. DOCA makes it easier for developers to build software-defined, hardware-accelerated apps that run on Nvidia DPUs, moving data from server to server in data centers as well as securing and storing it all. Nvidia said the DOCA software is analogous to CUDA, the set of software tools it gives developers to get more performance out of its GPUs.

"DOCA is central to enabling the DPU to offload, accelerate, and isolate data-center services," Ariel Kit, general manager of product marketing in Nvidia's networking business, said in a blog post. "DOCA is designed to enable you to deliver a broad range of accelerated software-defined networking, storage, security, and management services running on current and future BlueField DPU family," he added.

The BlueField DPU family has also been backed by top players in the market for software deployed in data centers, including VMware, Red Hat, Canonical, as well as Check Point Software Technologies.