Associative Processing Unit Focuses on ID Tasks

What you’ll learn:

- What is an associative processing unit (APU)?

- In what ways are APUs applied?

Artificial intelligence and machine learning (AI/ML) are certainly hot topics these days, but the nuances and details are often lost in the hype and high-level views of the solutions. Even dropping down a level in the view of these solutions highlights the different types of neural networks and identification methods used for disparate applications. Often solutions like autonomous robots and self-driving cars require multiple AI/ML models that use different types of networks and identification methods.

One aspect of these solutions is to identify a pattern. The trick for AI/ML applications is that the pattern is usually simple, but the amount of data that needs to be matched is large. This is where GSI Technology’s associative processing unit (APU) comes into play.

Those familiar with associative memories, or ternary content-addressable memory (TCAM), will appreciate the difference between these and the APU. Associative memories have been available for a long time, though they tend to be used in specialized applications due to size and operation limitations.

Essentially, an associative memory pairs comparators with memory, allowing parallel comparisons to take place by providing a key to all of the comparators simultaneously with the other input coming from the memory. It was the original parallel processor. When they first came out, they were hyped with the ability to do lots of comparisons at one time. That still makes them useful but somewhat limited.

The APU uses a similar structure that combines computation with words in memory. The APU is more flexible in that it can handle masking operations and works with variable length words and comparisons. It’s more programmable, but not on par with systems that pair full CPU cores with a block of memory.

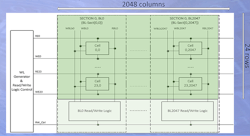

The basic building block is a 2048-bit by 24-row/word bit logic system (Fig. 1). The sections are stacked in turn, with each operating in parallel when a search is performed. It essentially includes two million bit engines in one chip.

Unlike TCAMs that can only perform basic matching, the APU provides associative and Boolean logic. This allows for cosine distance computation; the system can handle artificial-neural-network (ANN) searches of large databases, too. The APU is able to perform math problems using only Boolean logic such as SHA1 processing. It can also handle variable-length data.

The Leda-G board is the first to incorporate a 400-MHz Gemini APU (Fig. 2). It also has an FPGA on-board to handle host interfacing. The forthcoming Leda-E will up the frequency while the Gemini-II, still in development, will eliminate the FPGA in addition to doubling the speed and increasing the memory by a factor of eight.

The Gemini APU is a specialized unit that handles a large subset of search and ANN applications. It’s not a general-purpose computing unit like CPUs or GPUs, but the APU can augment these platforms when problems it can handle are part of a solution. The Gemini is extremely power-efficient, especially given the orders of magnitude of the performance gains. The system can be scaled by adding chips in a fashion similar to memory, which can be made larger and wider.

GSI Technology provides libraries and it integrates with existing applications and tools like Biova pipeline database and Hashcat. It can be used for database searches and even face recognition. The company has a tool that can parse Python code to extract aspects that can be accelerated by the Gemini APU. Developers should contact the company to see how their application may fit with the Gemini APU and what libraries and tools may already be applicable.