Wrong Flash Storage: The 5 Most Common Trouble Spots

What you'll learn:

- The two main factors in SSD failure.

- What are the five top purveyors of NAND flash storage problems?

Over the last decade, NAND flash storage has become the favorite device to store and access all kinds of data, from video recordings and streaming, personal storage, and OS provision to data logging, application acceleration, and much more. The innovation rate has increased both speed and storage capacity by multiple factors.

The only aspect that has decreased, at least generally speaking, is reliability. With very short new product introduction cycles of just a few months, the time to fully test and verify the complex functionalities is no longer spent. As a result, immature products reach the market, which later depend on multiple firmware updates in the field to eliminate the issues identified by customer testing.

In most cases, this goes unpublished and issues with NAND storage aren’t shared outside the affected company, unless the damage affects the wider public. Tesla, for example, recently had to recall 134,000 cars due to early failure of an under-dimensioned embedded MultiMediaCard (eMMC).

With regard to solid-state disk (SSD) failure, we need to consider two main aspects: hardware and firmware.

The hardware defines the raw bit-error rate (percentage of block reads with bit errors before they passed the error correction unit), the data retention of the cells, and the supported temperature range. The firmware needs to manage an equal wear out of the flash, perform bit-error correction, and mitigate temperature data effects and power loss issues.

What follows are the five top instigators when it comes to NAND flash storage issues.

1. Wrong NAND quality.

NAND flash is a commodity and needs to maintain a low cost per gigabyte. Many developments (3D NAND, QLC) are mainly driven by this goal. For use in cell phones and personal PCs/laptops, consumer-quality NAND is sufficient. That’s not true for more demanding applications like enterprise storage or industrial/networking and communication applications.

The standardization consortium JEDEC has defined two main usage cases and their respective quality requirements:

- Client use case: PC user type workload, 8 hours/day, 40°C, uncorrectable error rate (UBER) < 10-15

- Enterprise use case: Database type workload, 24 hours/day, 55°C, uncorrectable error rate (UBER) < 10-16

Both 10-15 and 10-16 seem to be extremely low numbers, but the difference means that a client drive will fail 10 times more often than an enterprise drive. With the high throughput of modern SSDs, the probability of an SSD fail is no longer negligible.

The raw bit error rate of today’s NAND flash is in the range of 10-2 for lower-grade and 10-3 for higher-grade technology. Various levels of error correction reduce the UBER rate to the requested UBER levels. The flash-quality grade and level of error handling has direct impact to the sales price. As a general rule: Don’t put a cheap commercial-grade SSD in an application that requires a low error rate.

2. Wrong NAND design.

3D NAND cells are a highly complex stackup of many layers. Currently some devices have more than 140 layers. The manufacturing requires etching of very thin, yet very deep, holes into a sandwich layer of hundreds of polysilicon and silicon-oxide depositions. Due to the nature of the etching, the lower part of the hole is much narrower than the upper part, resulting in different electrical properties of the transistors. This makes reading different cells reliably very challenging. Adding temperature changes between read and write adds a dimension of variances.

Not every NAND design is made to deliver sufficiently good data when the temperature changes between write and read. As long as the SSD product resides in a thermally well-controlled system—for example, in personal PCs, laptops, servers, or handhelds—the temperature variation is too small to cause problems.

For industrial or NetCom applications, the requirements for the NAND increase significantly and both the NAND design and supporting firmware need to support wide temperature fluctuations. A wrong choice of the flash product can cause multiple problems once the system must operate under fluctuating temperature conditions.

3. Wrong mechanical stability.

Ever heard of thermal-mechanical stress? This happens when temperature fluctuations affect structures that combine elements with different thermal expansion factors, i.e., some parts extend more at the same temperature change than others.

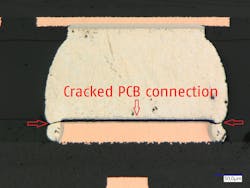

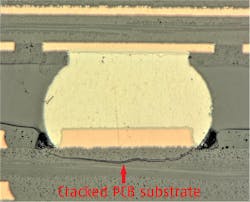

An SSD consists of a PCB with soldered-down flash packages, a controller, connector, and small passives. All of them behave differently with changing temperatures. Since the packages are soldered to the PCB, the differing expansion causes mechanical stress, which finally leads to broken interconnects (Figs. 1 and 2).

This damage happens after hundreds to thousands of temperature cycles and may even take years. But it matters a lot when it comes to industrial systems that are in the field for a long time.

4. Power fail robustness.

For a laptop that always shuts down gracefully, power fail robustness is no issue. For a medical device that’s simply unplugged, or a NetCom router in an environment with an unstable power supply, sudden power loss must not lead to a broken system.

A sudden loss of power supply can occur at any time—during an external write to the SSD, during internal garbage collection, during firmware updates, even during recovery from a previous power loss. If the firmware doesn’t manage power loss correctly will impact the severity of loss of data. Best case, it’s only the last written data (data on-the-fly); worst case, the firmware is corrupt, and the SSD no longer works. In many mission-critical applications, losing even a few bits of data is simply not acceptable.

Swissbit has tested commonly available SSDs in the market and has seen all types of failures happen under power-off tests.

5. Wrong firmware architecture.

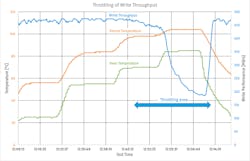

Speed matters, at least for consumer drives. In addition, speed tests are usually performed when the drives are new, empty, and freshly formatted. What’s often not considered is how much performance remains when the drive is 100% full, many times overwritten, or maybe running at high temperatures. Many existing firmware architectures focus on performance specifications, but not on highest endurance or retention or sustained performance over the complete operation range.

Choosing an SSD that’s not optimized for long-term use may lead to bad surprises once the early life of the drive has passed (Fig. 3).

Conclusion

Selecting the right SSD or NAND flash product depends on many criteria. Particularly when it comes to industrial usage or demanding applications, the following aspects should be included in the decision-making process: Selection of the right components, mechanical construction, firmware architecture, and power fail robustness. Doing so is the best way to find a reliable data-storage device to store and retrieve data for a long lifetime.