Intel’s latest crop of 14 nm Xeon processors target a wide range of applications, from the cloud and enterprise clusters to embedded applications. This generation of CPUs was codenamed Skylake-SP for Skylake Scalable Processor. Intel continues to pack even more instructions and hardware acceleration into each generation, with the latest emphasis on more cores.

The new chips employ a mesh architecture (Fig. 1) that moves away from the older, ring architecture. The new approach is designed to provide more efficient dataflow between chips in a multisocket environment connecting up to eight chips. An eight-chip system can run 448 threads simultaneously.

The top end supports up to six DDR4, 2,666-MHz memory channels, as well as 48 PCI Express Gen 3 lanes. These are shared among all cores, allowing virtual machine (VM) support to scale while having access to these resources. There are system memory options to support up to 1.5 Tbytes/socket.

The cache system has been redesigned. Each core has its own 1 Mbyte L2 primary cache. The shared L3 cache is now non-inclusive so data in the L2 caches are not replicated there. There is 1.3 Mbytes/core of L3 cache on each chip.

Intel’s Advanced Vector Extensions 512 (AVX-512) support provides ultra-wide vector processing from small integers up through double precision floating point. Chips are available with up to two AVX-512 units per core.

The Xeon family is now divided by metal-derived names instead of numbers (Fig. 2). The families now include Platinum, Gold, Silver and Bronze. Platinum supports up to 28 cores that can run 56 threads simultaneously. The Platinum and Gold chips include up to three 10.4 Gtransfers/s UltraPath Interconnect (UPI) links for combining chips into a cluster. Silver and Bronze target single chip solutions, including many embedded applications.

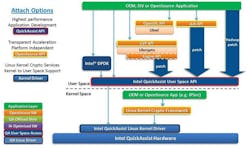

The Xeons are paired with Intel’s Platform Controller Hub (PCH) that incorporates Intel’s QuickAssist Technology (QAT). The QAT stack (Fig. 2) highlights the Linux hooks into security-related, C62X chipset acceleration hardware. QAT hardware also supports data compression applications as well.

The new Xeon processors have improved support for artificial intelligence (AI) machine learning (ML) algorithms. The latest chips improve performance by two orders of magnitude when compared with older Xeon E5 v3 platforms. Part of the change is optimization and instructions to handle smaller data sizes common to ML deep neural network (DNN) applications.

High-end systems can now take advantage of native, 100 Gbit/s Omni-Path Fabric interfaces (Fig. 4). Native Omni-Path support has been available on Xeon Phi chips, but now these server CPUs can be part of that mix. Omni-Path switches allow linking together hundreds of chips or more into very large systems.

The PCH also has up to four X722 10GbE/1GbE Ethernet ports. These provide iWARP RDMA support that can also handle NVM Express over Fabric solutions. High-speed Ethernet support can be critical in handling VM migration.

Intel has made a massive overhaul of its server line with significant performance and feature improvements. Much of it targets the cloud and enterprise, but most features will benefit embedded developers as well.