Intel continues to deliver high-performance Xeon server chips, but its latest Xeon will incorporate an Arria 10 FPGA (Fig. 1). The company has also released an FPGA PCI Express board. It is all tied together with a software framework that allows developers to easily develop and deploy FPGA accelerated solutions in a standard fashion.

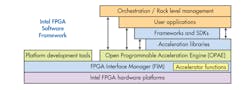

Let’s back up a moment and take a look at Intel’s FPGA framework (Fig. 2), which is based on the open-source Open Programmable Acceleration Engine (OPAE), available on GitHub. OPAE runs on top of the FPGA Interface Manager (FIM), which in turn manages the interface to the underlying FPGA hardware. It handles configuration of the FPGA based on FPGA IP files that also contain metadata about the hardware requirements and acceleration functions made available to the software.

User applications, including orchestration and rack level management tools, can access OPAE directly, or they can make use of frameworks or acceleration libraries. Intel provides a start point for these, but developers and third parties can provide their own as well.

Generating FPGA IP is still a chore that may require a bit more expertise in tackling the design, but OpenCL to FPGA IP development tools can greatly simply this job. Likewise, using package FPGA acceleration code is now no more complex than using a conventional runtime library. The FIM handles configuration and management of the underlying FPGAs since many applications may be vying for the FPGA. It manages data movement and the system can handle streaming data. At this point it does not handle multiple applications using the FPGA simultaneously since that would be a security nightmare. It is also more challenging in terms of managing and loading FPGA IP that is typically assumes use of a complete FPGA. Stay tuned for enhancements in the future as users demand more functionality.

The FPGA configuration files are designed for a specific target, so an accelerator would need two files to address the two hardware platforms that Intel just delivered. On the other hand, this is no different than having specific driver files or configurations for hardware platforms running operating systems like Linux. This becomes important in all areas, including the enterprise where identical hardware is not necessarily the norm or a requirement. The approach allows applications to run on any collection of hardware assuming the base requirements are met.

Now back to the hardware announcements.

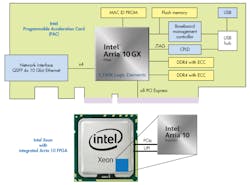

The Intel Programmable Acceleration Card (Fig. 3) includes an Arria 10GX FPGA with 1150K logic elements and SERDES link to four QSFP 10 Gbit/s Ethernet ports and an x8 PCI Express interface. The FPGA has a pair of DDR4 memory controllers that have access to on-board memory that has ECC support. This configuration is not new. There are a wide variety of third party FPGA boards that are already in use. In theory, these could also be supported by the Intel FGPA framework. Of course, the amount of work required to do so will vary.

The really new item in the mix is the Xeon with an integrated FPGA. The Arria FPGA is tied to the processor complex using PCI Express and the Intel UltraPath Interconnect (UPI). UPI is in Intel’s latest Xeons, and some Xeon Phi implementations have it as well.

This not Intel’s first whirl at a CPU/FPGA combination. The Intel E600C combined an Arria II FPGA with and Intel Atom core back in 2010. It didn’t quite take the world by storm, as the FPGA tools and a platform like this latest one were missing. The chips did address some interesting applications, but this was a case of the hardware being ahead of the software.

Developers already using Intel FPGAs and FPGA development tools should find migration to this platform relatively easy. Intel is targeting the enterprise at this point, but the framework is applicable to all embedded applications. Military and avionics would be an ideal space, but certifications would need to include the software framework. Likewise, application areas like automotive and medical will have similar requirements. Still, there are plenty of industrial and commercial applications where access to an FGPA will enhance a product. Even the latest artificial intelligence craze could benefit from easier FPGA integration. Neural network and machine learning accelerators, like Intel’s own Myriad X, provide significant speed-ups, but typically address a subset of the computation. An FPGA could incorporate more of the computation chores or provide a new approach that fixed hardware could not adapt to. For example, hardware that targets deep neural networks (DNN) may not be as suitable for approaches like spiking neural networks (SNN) that are starting to emerge.

2018 is when the boards and chips will be showing up. Of course, a select few will be playing with these before then. The software and documentation is coming online now.

There have been other FPGA frameworks in the past but they lacked the backing of a behemoth like Intel that also has a vested interest in the hardware. This is likely to spur the same type of renaissance that GPUs did when they were opened to developers. Prior to this, a GPU was a closed platform for driving graphic displays. These days, a GPU is as likely to be a compute-only platform as it is to handle display chores. FPGAs could become as common. Standalone FPGA usage has risen significantly; there are large and small FPGAs being used in devices where they tend to be hidden from view and never highlighted, since they often contain the secret sauce for the product.