Download this article in PDF format.

Artificial intelligence (AI) is fast becoming one of the most important areas of digital expansion in history. The CEO of Applied Materials recently stated that “the war” for AI leadership will be the “biggest battle of our lifetime."1 AI promises to transform almost every industry, including healthcare (diagnosis, treatments), automotive (autonomous driving), manufacturing (robot assembly), and retail (purchasing assistance).

Although the field of AI has been around since the 1950s, it was not until very recently that computing power and the methods used in AI have reached a tipping point for major disruption and rapid advancement. Both of these areas have a tremendous need for much higher memory bandwidth.

Computing Power

Those familiar with computing hardware understand that CPUs have become faster by increasing clock speeds with each product generation. However, as material physics will tell you, we’ve reached a limit as to how far we can go to improve performance by increasing clock speeds. Instead, the industry has shifted toward multicore architectures—processors operating in parallel.

Modern data-center CPUs now feature up to 32 cores. GPUs, traditionally used for graphics applications, were designed from the start with a parallel architecture; these can have over 5,000 simpler (more specialized) cores. GPUs have become major players in AI applications primarily because they’re very fast at matrix multiplication, a key element of machine learning. The shift toward more cores underscores the growing demand for data to be fed into these cores to keep them active—driving the need for much greater memory bandwidth.

Methods Used in AI

The fastest-advancing area within AI has been machine learning, and more specifically, deep learning. Deep learning is a subset of machine-learning methods based on learning data representations, as opposed to task-specific algorithms. In the past, AI used hand-coded "rules" to make decisions. This had very limited success.

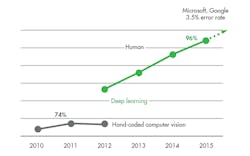

1. Deep neural networks improve the accuracy that’s vital in computer-vision systems. (Source: Nvidia, FMS 2017)

A breakthrough came five years ago when deep-learning techniques (using deep neural networks, or DNN) were implemented. Google Translate, for one, became much more accurate when Google deployed a DNN platform last year, yielding more improvements than in the previously 10 years combined. Moreover, as shown in Figure 1, image recognition improved dramatically when DNNs have been applied versus previous hand-coded techniques.

DNN involves training machines with vast amounts of data. Once trained, an AI system is able to make better predictions based on new input. This training process involves millions of matrix multiplications, a task that’s more suited for parallel, multicore compute architectures. This is why much greater memory bandwidth is needed for AI.

The Need for High Bandwidth Memory

The main memory used in today’s computers is DRAM (dynamic random access memory), which has a top system memory bandwidth of 136 GB/s. GPUs, by their multicore nature, utilize a special form of DRAM called GDDR (graphics double-data-rate DRAM) that can deliver system bandwidth at up to 384 GB/s. But the data-hungry, multicore nature of the processing units needed for machine learning requires even greater memory bandwidth to feed the processing cores with data. This is where high bandwidth memory (HBM) is beginning to make a critical contribution that will grow by orders of magnitude over the next several years.

HBM is a specialized form of stacked DRAM that’s integrated with processing units to increase speed while reducing latency, power, and size. These stacks are known as memory "cubes," each of which can have up to eight DRAM die.

HBM is now an industry standard via JEDEC (Joint Electron Device Engineering Council) and its current iteration, HBM2, can support 8 GB of memory per cube at 256-GB/s throughput. This will improve system performance and increase energy efficiency, enhancing the overall effectiveness of data-intensive, high-volume applications that depend on machine learning, parallel computing,and graphics rendering.

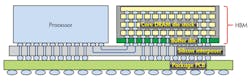

2. Shown is how a high-bandwidth-memory structure connects to processor. (Source: Samsung)

When four HBM2 cubes per processor are interconnected through use of a silicon interposer, that configuration will provide 32 GB of memory with a bandwidth of 1 TB/s. Figure 2 provides an example of an HBM structure, as well as how it connects to a processor.

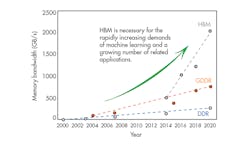

HBM is continuing to rapidly evolve to meet the needs of machine learning. The technology has already gained significant traction in the market, going from virtually no revenue a couple of years ago to what will likely be billions of dollars over the next few years. The next iteration, expected to become available in about three years, is now projected to enable a system bandwidth of 512 GB/s per cube. Figure 3 compares the memory bandwidth that’s anticipated with HBM technology to that of GDDR, the next fastest memory.

3. The graph tracks memory bandwidth progression over time, and how HBM stacks up against GDDR and DDR in the coming years. (Source: Samsung)

Smarter, Faster Networks

Not only is HBM being used to increase the speed at which local data is fed to processors, designers are also looking at ways to leverage it to speed movement of data between computing systems.

HBM2 is being designed into high-end switching ASICs (application-specific integrated circuits) that can operate at terabit speeds, to create a significant edge in routing performance. On top of that, new breeds of data-center ASICs used in servers are leveraging HBM, suggesting routing capability much closer to the optimum AI compute node than traditional networks. Expect smarter, faster networks in the near future that will play a pivotal role in the life-enhancing expansion of machine intelligence.

Doorway to Deep Learning

We are in the “gold rush” era of AI. Recent developments in deep neural networks are enabling major improvements in usable machine intelligence across the globe. Computer vision has already reached a milestone now that machines are able to, on their own, learn how to recognize a cat just by being given lots of images—without needing to know the distinguishing features of a cat.

Computer vision and language represent the tip of the iceberg. Other applications leveraging machine intelligence range from high-frequency trading in the financial sector to tailoring product recommendations in eCommerce.

HBM is an essential technology to drive the AI revolution. Entire industries are about to be transformed, creating huge opportunities now for businesses that stay ahead of the curve. Make no mistake—the technology is complex. Only a couple of today’s vendors are able to supply HBM2 memory in volume, as well as possess the expertise to provide future AI/HPC generations with increasing speeds and densities that will meet the growing market demand for deep learning.

However, that’s sufficient to move us into the world of AI in grand fashion. When the stakes are this high, leading corporations know to obtain the memory technology that will give their AI-impacted, networked systems the most competitive edge.

Tien Shiah is Product Marketing Manager for High Bandwidth Memory at Samsung Semiconductor Inc. In this capacity, he serves as the company’s product consultant, market expert, and evangelist for HBM in the Americas, focused on providing a clear understanding of the tremendous benefits offered by HBM in the enterprise and client marketplaces. He brings more than 16 years of product marketing experience from the semiconductor and storage industries, and has presented at a number of industry conferences, such as Flash Memory Summit, the Storage Developer Conference, and Dell EMC World. He holds an MBA from McGill University (Montreal, Canada), and an Electrical Engineering degree from the University of British Columbia.

Reference:

1. Interview with Jim Cramer, CNBC, 9/28/17.