PCI Express (PCIe) architecture is the ubiquitous Load/Store IO technology. Nonetheless, a host of myths and misunderstandings hold numerous engineers back from applying PCIe technology as broadly and effectively as possible. We’ll take a look at some of the common myths and shine a light on this flexible and efficient IO technology.

1. The PCIe architecture is only for PCs.

The PCIe architecture has been used in all types of computing platforms since its inception, ranging from PCs through mainframes, as well as many varieties of embedded and industrial platforms. Its value has been recognized in phones to match their increasing performance needs. PCIe-based products are available in many form factors, ranging from an 11.5- × 13-mm BGA SSD for small mobile and embedded platforms, to card-form-factor modules capable of delivering hundreds of watts of power to high-performance devices.

Several other myths have sprung from this one, as we’ll discuss further below.

2. PCIe architecture is solely an electrical/physical interface.

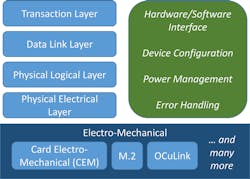

1. PCI Express specification layering.

The PCIe Base Specification defines the foundation of PCIe architecture, including detailed specifications for electrical signaling at multiple data rates and widths. Additionally, in a layered architecture (Fig. 1), the specification defines how PCIe technology operates through the software level and beyond.

- The Physical Logical and Electrical layers define how the physical configuration of the link is established and when needed, re-established.

- The Data Link Layer defines how reliable communication is established and maintained.

- The Transaction Layer defines packet formatting and processing, addressing, and error handling, and supports architectural mechanisms for configuration, power management, and error handling. It also has many other capabilities including optional advanced features such as virtual channels, precision time measurement, and more.

In addition, all major operating systems support “Class” drivers for many different types of devices. Finally, there are numerous electro-mechanical form factor specifications, including the many sizes of add-in-cards (e.g. Card Electro-mechanical [CEM] and M.2) and cables (e.g., OCuLink).

3. PCIe-based technology consumes a lot of power

The PCIe architecture can supply very high bandwidths, but also supports idle power levels in the tens of microwatts. It can also support intermediate power states that enable trading off resume latency for power consumption, according to the requirements of a specific use.

PCIe technology power-management features operate cooperatively through both autonomous hardware mechanisms and software-managed mechanisms. This enables systems and devices to carefully optimize power consumption and adapt to changing requirements within microseconds. Multiple vendors provide PHY IP solutions and report active power consumption of less than 5 mW/Gb/lane and standby power of less than 10 µW/lane.

4. PCIe architecture cannot support complex topologies.

To establish a robust and simple architecture for most use cases, the baseline topology for the PCIe architecture is a “tree” structure connected to a “Root Port.” However, mature and well-supported techniques enable more complicated structures to be supported—both for improved RAS (reliability, availability, and serviceability) and for higher performance. With moderate development, systems can be implemented with virtually any type of topology to connect computing, storage, and other IO elements. Multiple vendors provide products capable of supporting redundant links and arbitrarily complex topologies.

5. PCIe technology won’t fit into smaller devices.

At its heart, the driving force behind the original development of PCIe architecture was to provide the greatest possible bandwidth from the smallest possible number of wires. This, in turn, enables small devices and connectors an efficient use of board routing space. At its highest current data rate of 16 GT/s, the PCIe architecture supplies a raw bandwidth of 8 Gb/s, per high-speed signal pin.

2. A 16- × 20-mm BGA for solid-state storage on PCIe.

The PCI Express M.2 specification defines a range of removable modules down to about 5 cm2 and soldered-down units as small as 11.5- × 13-mm BGA for storage applications (see the 16- × 20-mm BGA in Fig. 2). PCIe technology has rich architectural support for power budgeting and management, enabling optimized thermal management essential in constrained systems.

6. The PCIe specification is difficult to implement.

The PCIe ecosystem has a rich support structure, including scores of IP providers, test-equipment manufacturers, component vendors, and verification/simulation tools. In addition, the PCIe architecture has been fine-tuned over the last couple of decades and through thousands of platform implementations. The PHY Interface for the PCI Express (PIPE) specification provides a fully defined modular interface between the controller and PHY IP blocks—greatly simplifying system-on-chip (SoC) integration.

PCI-SIG holds DevCons around the world annually that help educate our members on PCIe implementation. It also offers a mature and thorough compliance program through which products that have successfully completed rigorous testing procedures are listed on the PCI-SIG Integrators List.

PCIe architecture was specifically developed to enable broad deployment with minimal development costs for entire end-to-end solutions, including considerations such as board routing, electromagnetic interference, power delivery, and thermal management up the stack through operating system and device driver software. “Plug and Play” device discovery and configuration was built into the PCIe specification from the outset and is supported in all major operating systems. “Plug and Play” enables the rapid composition of components from multiple vendors into a functioning system with minimal engineering.

7. PCIe technology has a lot of baggage.

On the contrary, the PCIe architecture provides unequaled flexibility, but with the ability to draw upon mature technology elements appropriate for any need. The PCIe architecture provides flexibility in run-time operations and within a carefully constructed framework enabling “it just works” solutions for common use cases.

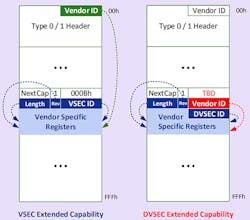

The PCIe specification provides a complete interface definition at all levels of the stack (Fig. 1, again); the non-recurring costs associated with assembling a platform level solution can be significantly reduced and even eliminated. Specific platform needs not satisfied within the PCIe architecture itself can be cleanly accommodated through an architectural framework for vendor- and implementation-specific capabilities. The PCIe architecture also provides the Designated-Vendor-Specific Extended Capability (DVSEC), which gives the flexibility for a vendor to define registers and a programming interface that other vendors can incorporate into their silicon (Fig. 3).

3. Comparing the Vendor-Specific Extended Capability (VSEC) with the Designated-Vendor-Specific Extended Capability (DVSEC).

8. The PCIe architecture is just another IO technology.

Virtually all IO technologies in the industry today require a host controller between a processing element and the IO itself. PCIe uniquely is a “load/store” architecture, different from controller-based IOs, and enables more flexibility and efficiency. A load/store architecture maps directly to the fundamental operations of most processing and memory elements. That means simple operations can complete in a few hundred nanoseconds, while at the same time allowing arbitrarily complex operations to be implemented efficiently.

For example, a PCIe-based product can pass a complex data structure stored in central memory from one processing element to the next by simply passing a pointer to that structure. Alternately, the PCIe can support operation in systems that have no system memory available (i.e. when system memory is disabled to save power).

The PCIe architecture also provides mechanisms for robust and high-performance address space protection and virtualized addressing. Alternately, PCIe technology offers a variety of highly efficient and lightweight mechanisms, perfect for message-based communication paradigms.

9. PCIe technology doesn’t support peer-to-peer communication

The ability for processing elements to communicate directly with each other without the need to pass through a central point—often called “peer-to-peer” communication—has always been supported by PCIe technology and is implemented in many different types of systems.

PCIe-based switches are required to implement full peer-to-peer support and most PCIe technology host silicon (the “Root Complex”) also provides support for peer-to-peer communications. Both address-based and ID-based mechanisms support memory read/write and message communication paradigms. Architecturally defined mechanisms exist to provide system-level robustness by detecting and blocking inappropriate peer-to-peer communication.

In addition, the PCIe architecture supports Quality of Service (QoS) management through a system of Traffic Classes, which can be mapped onto independent Virtual Channels. This enables, for example, control-plane traffic and data-plane traffic to flow over the same physical links without interfering with each other.

10. The PCIe specification isn’t robust.

At this point you may be thinking “Great, so PCIe is really flexible, but all that flexibility must make it fragile!?” Whenever practical to do, errors have been handled fully at the hardware level; error detection and handling have only been passed through the software layer when truly necessary.

PCIe technology provides reliable data transport in hardware and requires little-to-no software involvement to detect and correct most transient link errors. Hardware/software protocols extend the baseline of reliable transport to maintain logs of observed errors. For example, PCIe technology identifies unreliable or failing elements in a system using architecturally defined capabilities such as Advanced Error Reporting and protocol-specific mechanisms to address the issue. The PCIe architecture is a proven technology, operating reliably in all types of environmental conditions and applications around the world.

11. PCIe technology has long latencies.

What precisely is meant by “latency?” Usually, it encompasses the critical need for application data to be processed as soon as it’s available. Virtually all other IO technologies—including networking technologies—require network interface controllers (NICs). NICs are typically connected through the PCIe architecture; therefore, by definition, the PCIe architecture offers lower latency.

For workloads such as accelerator offload, it’s helpful to put things in context by considering that CPU to GPU communication was one of the driving applications in the development of PCIe technology. PCIe technology has been and continues to be the primary technology used in this space, providing tens of gigabytes per second of bandwidth at an extremely low latency, typically in the low hundreds of nanoseconds to service a read request.

The PCIe specification is a load/store IO technology, defined in a clean, layered structure, with very broad industry support. PCIe technology provides a rich and flexible set of capabilities that can be tailored to provide quick, efficient, and cost-effective time-to-market solutions. Hopefully this article has helped break down some of the myths that may have been holding you back from using PCIe technology in your applications.

Visit www.pcisig.com for more information.