Download this article in PDF format.

A secure system is only as strong as its weakest component, and every step in the manufacturing process is a component in that system. While much has been written about the security of wireless protocols, ICs, and deployed systems, securing the manufacturing process for those systems is often forgotten.

Let’s examine how we might attack an embedded system using a smart lock as an example. If we’re serious about attacking this system, we probably don’t want to compromise just one lock. We want to create a systematic exploit that can be used against any lock and then sold to others who want to bypass one specific lock in the field.

The lock manufacturer has anticipated our attack and spared no expense creating a secure product. From multiple code reviews to anti-side-channel-attack hardware to extensive penetration testing, the product is well-designed and protected. This would be a challenge if we were going to attack the lock itself, but we have another option. Attack the contract manufacture (CM) that assembles and tests the lock.

It’s almost universally required for firmware images to be transferred, stored, and programmed in plain text. All we need do is bribe one of the CM employees to give us the image, and then swap it out with an image we modified. The firmware will be nearly identical, but with a backdoor we can exploit whenever we wish. The CM will then be manufacturing compromised devices for us.

Our exploit requires no special hardware and only a moderate amount of sophistication to develop, making it extremely cheap to create. It also completely bypasses all the time and effort the manufacturer spent to secure its product.

Protecting Firmware Integrity

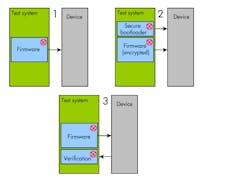

The fundamental problem in manufacturing is that with current embedded processors, it’s very difficult to guarantee the integrity of a firmware image. If the firmware is programmed in plain text, we can easily modify it on the test system as shown in block diagram 1 of Figure 1, where the red maker indicates code vulnerable to attack.

1. Multiple points of attack exist in firmware programming.

If the manufacturer decides to encrypt their code and load it via a secure boot loader, we attacked the boot loader, which had to be stored and programmed in plain text, as shown in block diagram 2 of Fig. 1. If the manufacturer uses external test hardware to verify the firmware after it’s programmed, we attacked both the firmware and the code that checks it, as shown in block diagram 3 of Fig. 1. No matter how many layers are added, we ultimately reached something that had to be programmed in plain text and can be attacked.

Manufacturing isn’t the only time code that can be modified. For example, an exploit that results in arbitrary code execution becomes much more valuable if it can permanently install itself by reprogramming the device. A complete solution to the problem of code integrity in manufacturing also addresses other source of firmware image corruption.

Protecting Firmware Confidentiality

In addition to ensuring that a system is programmed with the intended firmware, it may be necessary to protect the firmware’s confidentiality. For example, to ensure competitors can’t access a proprietary algorithm, we need to ensure that the code can’t be obtained by simply copying a file from our CM test/programming system.

Implementing firmware confidentiality can be done in a variety of ways and benefits from other hardware-based security features. However, any confidential boot-loading process that takes place at an untrusted CM will ultimately follow the same pattern.

First, the device is locked so that an untrusted manufacturing site can no longer access or modify the contents of the device. Then the device performs a key exchange with a trusted server using a private key that the manufacture never has access to, normally generated on the device after it’s locked. Once the key exchange is complete, information can be passed confidentially between the trusted server and device.

Confidentiality requires integrity. If attackers can modify the device’s firmware to generate a known private key, then they can trivially decrypt the image sent to that device.

Secure Debugging

Another historic challenge in the manufacturing process is the ability to diagnose issues in the field or when products are returned. The IC manufacturer and system developer both need to gain access to locked devices to perform this analysis. Traditionally, this is done by introducing backdoor access, which is a security hole.

The most common solution is to allow “unlock + erase,” whereby a device can be unlocked, but all flash is erased during the unlock process. This poses several drawbacks. In some cases, access to flash contents may be needed for debug purposes and will not be available. This also opens a security hole for attacks centered on erasing and reprogramming the device with modified code.

Other approaches provide an unrestricted backdoor that unlocks without erasing, or offer a permanent lock that will protect the part but makes debug of failure impossible. Both options have well-understood drawbacks.

Firmware Integrity Half Measures

We can do many things today to address this problem and make attacking manufacturing processes more difficult and less profitable.

Sampling

The simplest solution is to implement a sampling authentication program in another site. For example, we could pick systems at random and send them to an engineering/development site to read and validate the firmware. If someone tampers with our CM, this sampling indicates it has happened. To circumvent this check, the attacker must either compromise our engineering site in addition to the CM or know which devices will be sent for verification and exclude them from the attack.

There’s still a technical problem. To authenticate the code at our engineering site, we need to read that code out. Typically, MCUs are locked after production to ensure that memory can’t be modified or read out, which also prevents us from checking that the contents are correct.

Our checking method should assume any device code may be compromised. One option is to have a verification function that computes a simple checksum or hash of the image that we can read out through a standard interface (UART, I2C). Unfortunately, that option relies on potentially compromised code to generate the hash. If an attacker has replaced our image, they can also replace our hashing function to return the expected value for a good image instead of re-computing it based on flash contents.

To make this authentication work, we need to find an operation that can only be accomplished if the entire correct image is present in the device. One solution is to have our verification function simply dump out all of the code. Even better, our function can generate a hash of the image based on a seed the test system randomly generates and passes in. Now the attacker can’t simply store a precomputed hash because the hash value changes based on the seed. To respond with the correct result, the attacker’s code must now have access to the entire original image and correctly compute the hash.

Dual-Site Manufacturing

Similar to the sampling program, board assembly and programming could be carried out at one site and then tested at another. This approach catches an attack immediately and prevents compromised units from being shipped. It also has the drawbacks of the sampling method since it requires some way to authenticate the firmware during the test phase. It’s also more expensive to implement than the sampling method.

It’s tempting to program but not lock the device during manufacturing and then lock after test. This eliminates the need for special verification code, since the contents of the device can simply be read out. However, for most embedded processors, leaving debug unlocked also leaves programming unlocked. Attackers could then compromise only the second (test) site and program their modified firmware there.

Over-the-Air Field Updates

Another way to mitigate an attack on a connected system is to implement and use over-the-air (OTA) updates or some other periodic style of firmware update. In most OTA systems, manufacturing time modifications will be discovered or overwritten with the next OTA update. Systems that regularly roll out updates greatly reduce the value of a factory compromise if it’s only available for that short time.

A Full Solution for Firmware Integrity

The fully secure solution relies on hardware containing a hard-coded public authentication key and hard-coded instructions to use it. For this purpose, ROM is an excellent solution. Though ROM is notoriously easy to read through physical analysis, it’s difficult to modify in a controlled, non-destructive way.

Firmware loaded into the device must then be signed. Out of reset, the CPU begins execution of ROM and can validate that the flash contents are properly signed using the public authentication key, which is also stored in ROM. If an attacker attempts to load a modified version of the firmware, authentication will fail, and the part will not boot. To get a modified image to boot, the attacker must provide a valid signature for their modified firmware, which can only be generated using a well-protected private key.

With a real IC, security measures are more complex to support numerous use cases and to avoid security holes. The hard-coded public key (manufacturer public key) will be the same for all devices since it is not modifiable. This makes it incredibly valuable, providing the root of trust for all devices. The associated private key (manufacturer private key) must be closely guarded by the IC manufacturer and never provided to users to sign their own code.

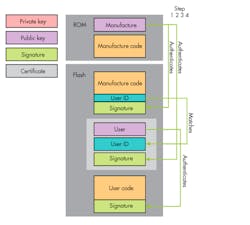

When booted, the manufacturer public key will be used to validate any code provided by the IC manufacturer that resides in flash. This ensures the code or other information provided by the manufacturer isn’t tampered with as shown in step 1 of Figure 2.

2. Public keys are implemented and authenticated in the secure-boot process.

Device users will need their own key pair (user private key and user public key) for signing and authenticating firmware images. To link the user public key into the root of trust, the IC manufacturer must sign the user public key with the manufacturer private key, creating a user certificate. A certificate is simply a public key and some associated signed metadata. When booted, the part authenticates the user certificate using the manufacturer public key, as shown in in step 2 of Fig. 2. The user firmware can be authenticated with the user public key in the known-valid user certificate, as shown in step 3 of Fig. 2.

An additional step is required to lock a device to a specific user. The system described in steps 1-3 can only ensure that the user certificate was signed by the IC manufacturer. While this prevents a random person from reprogramming the part, another legitimate customer could write their code and their legitimately signed certificate onto the part, and it would boot. This effectively means that if an attacker can convince the IC manufacturer they are a legitimate customer and is able to generate a signed user certificate, they can get the device to boot their code.

To lock a part to a specific end user, the user certificate must contain the user public key and a user ID so that changing either the key or the ID invalidates the certificate. The IC manufacturer will program the user ID into the manufacturer code area where it’s protected by the manufacturer public key. At boot time, the boot process verifies the user certificate signature and compares the user ID in the certificate against the one in the manufacturer code, as shown in step 4 of Fig. 2.

Here’s what happens when someone attempts to modify each part of the system.

- If the user identifier in the manufacturer code is changed, the signature of that space is no longer valid, and the part doesn’t boot.

- If the user ID in the user certificate is changed, it will not match the one in the manufacturer certificate, and the part will not boot.

- If either the user public key or the user ID in the user certificate is modified, the certificate is invalid, and the part will not boot.

- If the user firmware image is changed, the signature is invalid, and the part will not boot.

We now have a system that will only boot firmware properly signed by the customer who ordered the part from the IC manufacturer. The entire system relies on only two secrets: the manufacturer private key and the user private key, both of which are only accessed to sign new images and are well-protected due to the infrequency of that process.

Additional Mitigations

Even in this system, correct construction of the certificate system is required. The manufacturer private key is extremely valuable as it applies to every device built by the IC manufacturer. It’s also accessed too frequently—it’s constantly being used to sign user certificates.

This can be addressed by creating a different manufacturer public/private key pair for each die so that compromising one key only exposes that die. Similarly, instead of directly signing user certificates with the manufacturer private key, a hierarchy of sub-keys can be developed and used for that operation whereby the sub-key can be revoked by a manufacturer code update if compromised.

Provide Secure Unlock

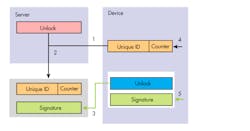

Providing secure debug unlock is a simple task. Each system developer generates a key pair for debug access and programs the public debug key onto the device. The key’s integrity can be established in the same manner as the user’s firmware, preventing anyone from tampering with the public debug key, as shown in step 5 of Figure 3. Each device also receives a unique ID, which is almost universally available on MCUs today.

3. Proving secure debug unlock is a simple, multi-step task.

To unlock the part, its unique ID is read out (1) and signed with the private debug key (2), creating an unlock certificate, which is then fed into the device for authentication against the public debug key (3). If it authenticates, the part is unlocked. This ensures only those with access to the private debug key may generate an unlock certificate, and only those with an unlock certificate may unlock the part.

The private debug key can be stored and well-protected on a secure server. Since the device ID doesn’t change, the process of generating an unlock certificate happens only once, and that certificate may be used to unlock the part as long as required. A benefit of this method is that it generates unlock certificates on a per-device basis. That means it’s possible to grant unlock privileges to field service personnel or the IC manufacturer only on the device to be diagnosed.

A drawback to this method is that once a valid unlock certificate is created, anyone with access to that certificate may unlock the device. To mitigate this risk, a counter can be added to the end of the unique ID so that after an unlock certificate is no longer needed, it can be revoked by incrementing the counter via a debugger command. This will cause a new ID to be generated, and the old certificate will no longer be valid.

The more devices a private key gives access to, the more valuable it becomes. As a result, system developers should change debug unlock keys periodically to limit the number of devices affected in case a private unlock key is compromised.

Other Manufacturing Considerations

Test-Based Security Holes

It’s extremely common for manufacturing requirements to result in the intentional or unintentional introduction of security holes. For example, a system manufacturer may forget to disable the debug interface as part of their board test and ships units with a wide-open debug port. Intentional security holes are even more common. A developer, for instance, may want to provide a way to reopen debug access after locking and put in a secret command or pin state to unlock the part. If discovered, this gives any attacker the same unlock capability in the field. Developers should always take care to implement manufacturing and test processes in a secure way. This includes avoiding intentional security holes and conducting reviews to catch unintentional ones.

The Offshore Process

Servers and test programs are also part of the manufacturing flow and may be vulnerable to attack. Having a secure system and secure manufacturing process won’t help if files are transferred to the CM through an FTP or email server that hasn’t been patched in years. Every place files are stored should be considered part of the system and secured.

Product Development

Just as product manufacturing is often a secondary consideration, product development tends to be overlooked. The measures we’ve examined will not be helpful if an attacker can commit unnoticed changes to the source code repository. Sometimes this takes the form of an external penetration (electronically or physically walking into the building) or by compromising an employee.

Standard IT system security practices and standard coding practices play a huge role in preventing this type of attack. These practices include ensuring all PCs automatically lock when not in use, requiring user logins to access code repositories, performing code reviews on all repository commits, and performing test regressions on release candidates.

Conclusion

Security is increasingly important in embedded systems. Products that were once standalone are now part of a network, increasing both their vulnerability and value. While much has been published in recent years about securing IoT devices, insufficient attention has been paid to ensuring security throughout the design and manufacturing processes.

We’ve demonstrated how historical manufacturing processes can be easily compromised, and we’ve explored simple steps to take today in design and manufacturing to make attacking a CM or engineering site more difficult and less profitable. We’ve also presented hardware improvements that can ensure firmware integrity and provide secure access for failure analysis and field debugging.

Effective security requires everyone, from silicon vendors to design firms to OEMs, to work together to ensure that supply chain security receives the time and attention it deserves. The good news is that new hardware features are being developed to address these issues, and system developers can start implementing simple measures today to create a more secure manufacturing environment.

Josh Norem is Assistant Staff Systems Engineer at Silicon Labs.