Way back when the 5-GHz band was opened for unlicensed Wi-Fi usage, many predicted the death of 2.4 GHz. The 5-GHz band enabled much faster speeds thanks to the increased amount of bandwidth available at the higher frequency. Channels as wide as 160 MHz were introduced (and the industry is mulling the introduction of 320-MHz channels), whereas the entire 2.4-GHz band is only about 80 MHz wide.

As the FCC and other regulators are looking to open the 6-GHz band for Wi-Fi usage, there’s no doubt that consumer appetite for higher bandwidth and faster speed will be served at the higher frequencies. However, Internet of Things (IoT) devices don’t care about bandwidth.

With relatively little information to transfer, power consumption is their prime consideration. After all, the labor costs associated with battery replacement of millions and billions of IoT devices installed on every imaginable product is prohibitive.

To conserve power and prolong battery life, active sensors attempt to reduce the time in which they transmit, as their power amplifier is consuming more power than anything else in the system. They also reduce the transmission power as the power consumption increases with the output power of the radio.

Thus, IoT networks prefer lower frequencies that propagate further. They typically rely on mesh architectures to ensure that transmissions need to reach a fairly nearby receiver—the next hop in the mesh. And they absolutely prefer to avoid unnecessary retransmissions as these accelerate the battery drainage.

Retransmissions and Interference

Why would an IoT device need to retransmit? It would need to retransmit if a sent packet doesn’t reach its destination. If this happens because the destination is too far away, this is simply a poor design of the network. More likely, packets would get lost due to interference.

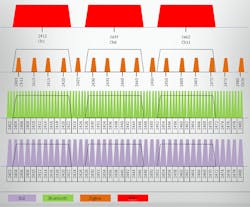

The 2.4-GHz band is extremely crowded with Wi-Fi access points and other devices emitting energy (Fig. 1). Yet, frequency-hopping techniques, channel selection, modulations, waveforms, error correction at the protocol level, and other mechanisms are designed to counter the effect of interference. Nevertheless, none of these techniques would help if the interferer is too strong.

1. The 2.4-GHz band is extremely crowded with Wi-Fi access points, Bluetooth, and other devices emitting energy.

Regulators impose restrictions on maximum power levels that a device can emit at the unlicensed (ISM) bands. This is done exactly to avoid the monopolization of the spectrum by a single device (and to ensure that the device doesn’t fry us humans). So external interference, while it certainly exists and has the potential to be harmful, is exactly what IoT devices are designed to avoid.

But what happens when the receiver (typically an IoT hub of some sort) is blasted with energy right in its antenna? This would be the equivalent of you screaming while trying to listen to a whisper coming from afar—you would not be able to hear the whisper. But why would someone “scream” in the “ears” of the IoT hub? IoT hubs may be standalone devices, but more likely they would be integrated into Wi-Fi access points to avoid the need for separate wiring.

BLE, Zigbee, etc.

Check the market. All major enterprise Wi-Fi vendors have BLE (Bluetooth Low Energy) receivers integrated into their product portfolio. And all major service providers are rolling out Wi-Fi access points that incorporate IoT receivers in the form of BLE or Zigbee radios. This is also true for consumer solutions from the likes of Google Wi-Fi.

Yes, when the 2.4-GHz Wi-Fi radio transmits, the IoT receiver is saturated. It doesn’t matter that the IoT radio operates on a different channel or that it applies any and all of the other interference avoidance techniques. It’s saturated. Period. Any incoming transmission would get lost and the originating IoT device would have to retransmit it. Is this a problem? Maybe not if you have one water sensor under the dishwater. But if IoT is truly to take off, at home, in the enterprise, and in what’s termed the Industrial IoT, this would be a serious problem.

In fact, the interference between Wi-Fi and Bluetooth is already a serious problem. Today, it’s most noticeable when Wi-Fi- and Bluetooth-based audio streaming are trying to co-exist. Unknowingly to you, the user, Wi-Fi 2.4 GHz is throttled to the absolute minimal throughput when a co-located Bluetooth receiver is used. And this is likely to be a growing problem as personal assistant devices would double up as Wi-Fi extenders, or when Wi-Fi access points would be more pervasive in cars where the driver heavily relies on Bluetooth audio streaming to comply with the ‘no hands’ laws.

Self-Interference Cancellation

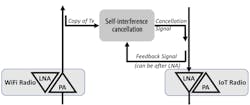

There is a solution. It’s called self-interference cancellation. The “self” denotes that the interference comes from a local source as opposed to a remote source. This is important because the self-interference cancellation technique is based on an exact copy of the interfering signal, which can easily be provided if we could connect a local wire to it. The system “subtracts” this interferer from the signal incoming into the interfered receiver to ensure that only a clean desired signal makes its way into the receiver (Fig. 2).

2. Self-interference cancellation eliminates interference from a local source as opposed to a remote source.

It’s not as simple as that because interference has the nasty habit of changing when someone places a transmitter in front of a reflecting metal cabinet or a refrigerator. In other words, the interference, even if it’s local, is affected by the environment. Kumu Networks has developed self-interference cancellation that’s designed to adapt to the environment and “cancel” or “subtract” the interfering noise from the desired signal. This allows Wi-Fi, Bluetooth, Zigbee, and other protocols to coexist in the same very narrow 2.4-GHz band, even if these radios are co-located in the same enclosure.

Joel Brand is Vice President of Product Management at Kumu Networks.