Artificial intelligence (AI) is fast becoming one of the most important areas of digital expansion in history. The CEO of Applied Materials recently stated that “the war” for AI leadership will be the “biggest battle of our lifetime."1 AI promises to transform almost every industry, including healthcare (diagnosis, treatments), automotive (autonomous driving), manufacturing (robot assembly), and retail (purchasing assistance).

Although the field of AI has been around since the 1950s, it was not until very recently that computing power and the methods used in AI have reached a tipping point for major disruption and rapid advancement. Both of these areas have a tremendous need for much higher memory bandwidth.

Computing Power

Those familiar with computing hardware understand that CPUs have become faster by increasing clock speeds with each product generation. However, as material physics will tell you, we’ve reached a limit as to how far we can go to improve performance by increasing clock speeds. Instead, the industry has shifted toward multicore architectures—processors operating in parallel.

Modern data-center CPUs now feature up to 32 cores. GPUs, traditionally used for graphics applications, were designed from the start with a parallel architecture; these can have over 5,000 simpler (more specialized) cores. GPUs have become major players in AI applications primarily because they’re very fast at matrix multiplication, a key element of machine learning. The shift toward more cores underscores the growing demand for data to be fed into these cores to keep them active—driving the need for much greater memory bandwidth.

Methods Used in AI

The fastest-advancing area within AI has been machine learning, and more specifically, deep learning. Deep learning is a subset of machine-learning methods based on learning data representations, as opposed to task-specific algorithms. In the past, AI used hand-coded "rules" to make decisions. This had very limited success.

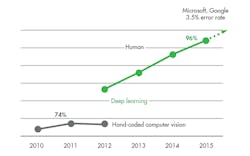

1. Deep neural networks improve the accuracy that’s vital in computer-vision systems. (Source: Nvidia, FMS 2017)