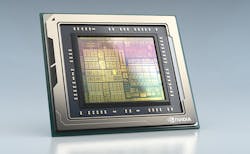

Nvidia’s DRIVE AGX Orin SoC (Fig.1) improves on the company’s Jetson AGX Xavier by a factor of seven, providing more performance and machine-learning (ML) acceleration. The chip, designed to meet ISO26262 ASIL-D requirements, is aimed at automotive and robotics applications that can take advantage of its ML support. The SoC is able to handle ADAS chores in addition to being used for self-driving cars.

There are a dozen 64-bit “Hercules” cores in the CPU complex, which is coupled with a GPU that includes CUDA Tensor cores capable of delivering 200 INT8 TOPS. The CPU cores are based on Arm’s DynamIQ technology. Also in the mix are a programmable vision accelerator (PVA) and Nvidia’s deep-learning accelerator (DLA). The memory subsystem has a 200-GB/s bandwidth and there are four 10-Gb/s Ethernet ports.

The video hardware can decode 8K video streams at 30 frames/s and encode 4K streams at 60 frames/s. This includes support for H.264, H.265, and VP9. The chip is built by TSMC using a 7-nm process technology.

NVIDIA hasn’t provided all of the details about the chip, but it’s set to deliver 3 TOPS/W. It’s likely to have a TDP that’s twice that of the Jetson AGX Xavier.

The DRIVE AGX Orin takes advantage of Nvidia’s CUDA-based, deep-neural-network (DNN) ML development support. This support allows applications to run on any of the company’s platform from the compact Jetson Nano through the DRIVE platforms up to its cloud-based systems.

Along with the DRIVE AGX Orin, NVIDIA had a number of other announcements at the GTC China show. One involved the TensorRT 7 that supports the TensorFlow. TensorRT 7 has a new compiler that will automatically optimize ML models to improve performance, sometimes by a factor of ten. It’s designed to reduce the latency of models being used for real-time interactions below 300 ms, which is typically needed for human-machine interactions.

TensorRT 7 supports recurrent neural networks (RNNs) used for applications like text-to-speech. It handles models like WaveRNN and Tacotron 2. RNNs are also used for speech recognition and language translation.

In addition, the new compiler is optimized to handle transformer-based models like BERT (Bidirectional Encoder Representations from Transformers). BERT is used for natural language processing tasks.

On top of that, Nvidia is making its cloud-based DRIVE Federated Server available to developers (Fig. 2). The idea is to share the models but not the actual data providing privacy for application areas like the medical space. Privacy is even important for self-driving car models.

The client-server model has the company’s software running at both ends of the system. A global model is distributed to the clients that enhance the model and return new weights to be incorporated into the global, shared model. Many applications like self-driving cars can benefit from having a larger training dataset.