What you’ll learn:

- Smart sensors bring real-time object detection to autonomous vehicle.

- Why early fusion of sensor data improves subsequent machine-learning analysis.

- Why simulation is important to level 4 autonomous-vehicle development.

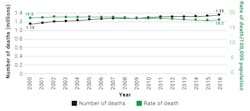

Fueled by the need to improve road safety, consumer demand for smarter driving technologies has soared. Every year over one million people lose their lives in traffic accidents around the world, according to a WHO global status road safety report (Fig. 1). The most common reasons for accidents are distracted driving, speeding, driving under the influence, and reckless driving—all of which are human error, usually caused by the driver.

Autonomous-vehicle (AV) technology will play a fundamental role in curbing the number of accidents to make driving safer for everyone. While the industry is still in its infancy, companies are working to develop safe, reliable, and cost-efficient automated driving solutions for the future of transportation.

In addition to the growing interest in using AVs to reduce accidents, the COVID-19 outbreak has made many consumers avoid public transit and look for safer and more reliable forms of transportation. Self-driving vehicles like robo-taxis are one way that people will be able to get around while also social distancing. AVs and other self-driving applications have already proven helpful in the fight against the virus in China by transporting essential medical supplies and food to healthcare workers.

Although the mass deployment of fully autonomous vehicles might take some time, Level 4 AV technology promises to usher in a safer and more intelligent form of transportation today. This article will explore different types of hardware components and software solutions that top automotive OEMs, Tier 1 suppliers, and mobility and logistic companies are leveraging as they test out and work to commercialize Level 4 self-driving vehicles.

Smart Sensors Used for Real-Time Object Detection

An AV might use a number of hardware components—an inertial measurement unit (IMU), global navigation satellite system (GNSS), multiple sensors including LiDAR and radar, and high dynamic cameras—to operate safely on roadways. Some autonomous technology providers combine all of these components into one full-stack driving system so that companies can more easily integrate these components into cars to turn them into AVs (Fig. 2).

LiDAR technology is often seen as the linchpin of autonomous driving, as most companies are using it to help vehicles see the world around them. LiDAR assists in vehicles navigation by collecting and measuring 3D data in high spatial resolution around a vehicle’s surroundings.

One trend we’ve seen is that companies are leveraging different types of LiDAR sensors to reduce the overall system cost and weight of self-driving systems. For instance, AV technology providers might utilize a higher-resolution and long-range 64-line LiDAR sensor in the center of an application roof-top box to give the vehicle a birds-eye view of its surroundings, while also using more affordable and lighter 16-line LiDAR solutions on the sides of the box to provide data about surrounding blind spots.

Radar is useful for AVs to determine the velocity of moving objects. However, while radar can detect a stopped vehicle or cyclist ahead, radar doesn’t have the ability to determine an object’s precise position, like whether a stopped vehicle or cyclist is in the same lane as the driver. Combining speed information from radar sensors and 3D and position information from LiDAR sensors overcomes this challenge.

Vehicle cameras are useful for gathering detailed information about a vehicle’s surrounding environment, such as the color of a stoplight or the text on a sign. However, unlike LiDAR systems, cameras have trouble determining the distance of an object because it can be difficult to detect what the object really is. That’s why most AV technology providers leverage a combination of LiDAR, radar, cameras, GNSS, and IMUs to take advantage of the inherent strengths of each hardware component.

The similarities and differences between various self-driving technology solutions usually boils down to a few key things: cost, power efficiency, accuracy, and customizability. Depending on the use case, a company might need a more cost-effective AV solution; thus, leveraging more affordable hardware components is necessary. If accuracy is the top priority, such as AVs used for industrial applications with heavy machinery, companies might opt for higher-performance solutions like 64- or 128-line LiDAR sensors, along with components that can withstand harsh conditions.

We’ve found that given the wide variety of use cases for AVs, many companies are looking for customizable solutions so that they can mix and match which solutions fit their specific application requirements.

Sensor Fusion is Fundamental for Self-Driving Vehicles

To achieve high reliability, AVs must be able to accurately perceive the surrounding world. This includes understanding what things are dynamic objects—e.g., other motorists, cyclists and pedestrians—and what are fixed entities—e.g., road debris, traffic lights, and construction equipment. The different types of hardware components in an AV system all collect valuable data that needs to be combined and analyzed in real time, something that’s called sensor fusion, so that a vehicle has the most accurate picture of its surrounding environment.

An advanced data synchronization (ADS) controller is one type of solution that enables cars to achieve sensor fusion (Fig. 3). ADS controllers synchronize data gathered by hardware components, such as the point cloud from LiDAR sensors, speed information from radar sensors, and color images from cameras, and immediately process that information with a car’s self-driving computing system.

There are different ways to reach sensor fusion. The traditional method fuses the information from each individual sensor after it detects something, which sometimes provides incomplete data—the result will not be as accurate and AVs will end up misjudging what’s happening on the road in front of them (Fig. 4). By contrast, deep-learning early fusion, which fuses together at a raw data level to keep relevant information between different kinds of sensors, provides much more accurate information.

For example, after an AV synchronizes time and spatial data, DeepRoute’s technology fuses the data at the original layer and outputs that data across eight dimensions, including color, texture, speed, etc. This type of approach has full redundancy and greatly improves both precision and recall. In addition, the deep-learning model can accurately predict the trajectory of vehicles, cyclists, and pedestrians, enhancing the reliability and safety of AVs.

From Data to Driving

All AVs require a high-definition (HD) map and processing positioning information in real-time. An HD map gathers precise data from the various hardware components in an AV. These maps need to have extremely high precision at the centimeter-level—even when GNSS signals are weak due to skyscrapers and high buildings in cities—to allow an AV to recognize its environment and make decisions on its own.

While the sensors and ADS controller in a vehicle collect and transfer data for synchronization and calibration, a vehicle’s perception software module is responsible for merging all sensing modalities to create a precise 3D representation of the vehicle’s surroundings. The perception software’s output consists of accurate 3D positions of all objects—their velocities, accelerations, and semantics—including object-type labels (car, pedestrians, cyclists, etc.). While the perception module gathers its output, the deep-learning model communicates what’s on the road ahead, allowing the vehicle to set decisive parameters that predicts its trajectory.

With the data from the perception module and information from the vehicle (for instance, the speed, current location, and the camber angle), the planning and control module will decide how the AV should operate and then maneuver the vehicle.

AVs are highly dependent on their ability to process data efficiently and in real time. DeepRoute’s independently developed and proprietary inference engine, DeepRoute-Engine, is an example of high-efficiency engine that reduces the amount of calculations on the computing platform so that the AV can achieve a Level 4 self-driving experience (Fig. 5).

A cloud platform and infrastructure are also a fundamental part of a self-driving technology. The cloud platform supplies the data storage, development environment, tools, and monitoring system needed for the self-driving system. The infrastructure module provides the self-driving operating system with resources and runtime scheduling, visualization systems for testing, and a simulator for virtual testing. These additional modules ensure the system is fully developed and can run safely and reliably.

Automated AV Testing and Simulation

Safety is a top priority among AV technology providers, automotive OEMs, and mobility and logistic companies. Autonomous driving simply won’t succeed if it isn’t safe. Many companies including DeepRoute conduct AV tests a number of different ways to ensure vehicles are safe. For example, companies will test out autonomous cars on closed tracks to evaluate performance in different scenarios, on public roads to test out performance in real-world conditions, and even through simulation testing. Simulations offer engineers the ability to test technology virtually so that they can manipulate specific scenarios like cornering in the rain to evaluate how AVs will react.

As companies evaluate what types of self-driving systems to use, it’s important for them to carefully consider the different objectives and goals for their specific applications. For instance, industrial use cases will have different performance and cost requirements than consumer vehicles. Companies should carefully evaluate the differences between various solutions and the algorithms used to process data. We’re excited to see the incredible progress that’s already been made in the AV industry, and look forward to seeing Level 4 AVs produced on a mass scale to make roadways safer for everyone.

Nianqiu Liu is Vice President and tech lead of the hardware team at DeepRoute.ai.